您好,登录后才能下订单哦!

密码登录

登录注册

点击 登录注册 即表示同意《亿速云用户服务条款》

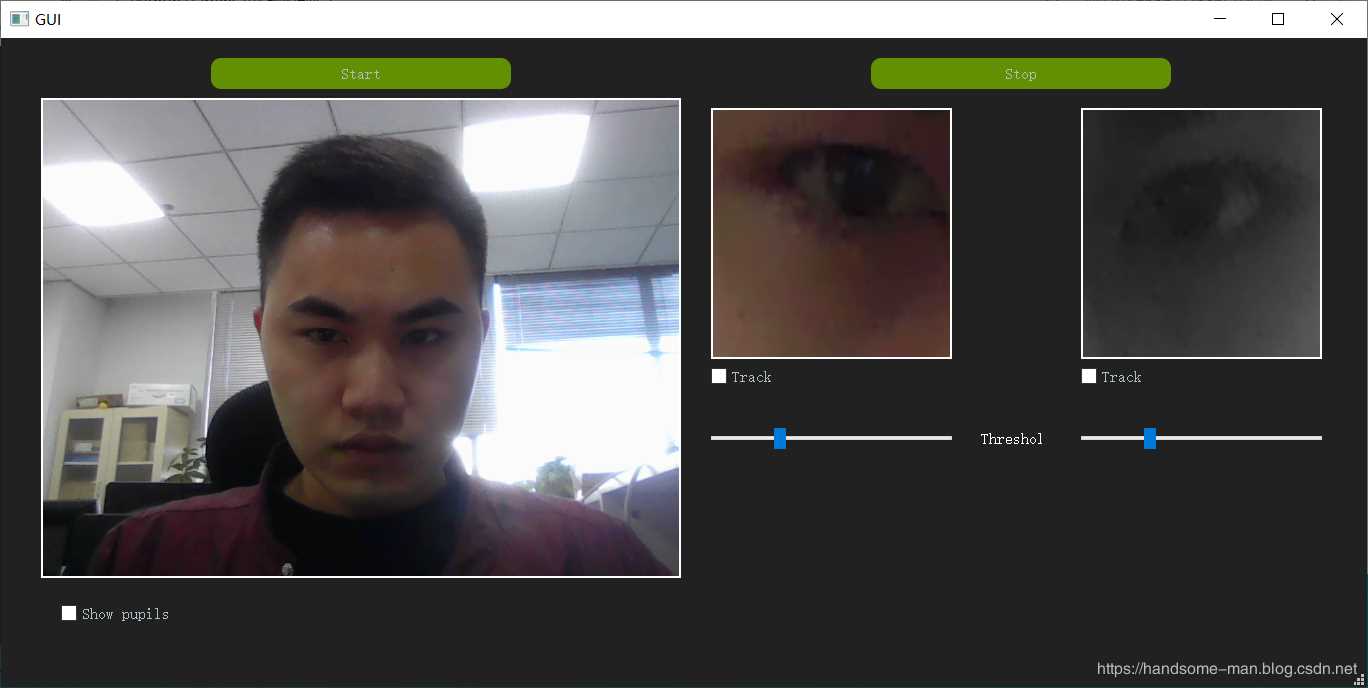

使用Python+OpenCV实现实时眼动追踪,不需要高端硬件简单摄像头即可实现,效果图如下所示。

项目演示参见:https://www.bilibili.com/video/av75181965/

项目主程序如下:

import sys

import cv2

import numpy as np

import process

from PyQt5.QtCore import QTimer

from PyQt5.QtWidgets import QApplication, QMainWindow

from PyQt5.uic import loadUi

from PyQt5.QtGui import QPixmap, QImage

class Window(QMainWindow):

def __init__(self):

super(Window, self).__init__()

loadUi('GUImain.ui', self)

with open("style.css", "r") as css:

self.setStyleSheet(css.read())

self.face_decector, self.eye_detector, self.detector = process.init_cv()

self.startButton.clicked.connect(self.start_webcam)

self.stopButton.clicked.connect(self.stop_webcam)

self.camera_is_running = False

self.previous_right_keypoints = None

self.previous_left_keypoints = None

self.previous_right_blob_area = None

self.previous_left_blob_area = None

def start_webcam(self):

if not self.camera_is_running:

self.capture = cv2.VideoCapture(cv2.CAP_DSHOW) # VideoCapture(0) sometimes drops error #-1072875772

if self.capture is None:

self.capture = cv2.VideoCapture(0)

self.camera_is_running = True

self.timer = QTimer(self)

self.timer.timeout.connect(self.update_frame)

self.timer.start(2)

def stop_webcam(self):

if self.camera_is_running:

self.capture.release()

self.timer.stop()

self.camera_is_running = not self.camera_is_running

def update_frame(self): # logic of the main loop

_, base_image = self.capture.read()

self.display_image(base_image)

processed_image = cv2.cvtColor(base_image, cv2.COLOR_RGB2GRAY)

face_frame, face_frame_gray, left_eye_estimated_position, right_eye_estimated_position, _, _ = process.detect_face(

base_image, processed_image, self.face_decector)

if face_frame is not None:

left_eye_frame, right_eye_frame, left_eye_frame_gray, right_eye_frame_gray = process.detect_eyes(face_frame,

face_frame_gray,

left_eye_estimated_position,

right_eye_estimated_position,

self.eye_detector)

if right_eye_frame is not None:

if self.rightEyeCheckbox.isChecked():

right_eye_threshold = self.rightEyeThreshold.value()

right_keypoints, self.previous_right_keypoints, self.previous_right_blob_area = self.get_keypoints(

right_eye_frame, right_eye_frame_gray, right_eye_threshold,

previous_area=self.previous_right_blob_area,

previous_keypoint=self.previous_right_keypoints)

process.draw_blobs(right_eye_frame, right_keypoints)

right_eye_frame = np.require(right_eye_frame, np.uint8, 'C')

self.display_image(right_eye_frame, window='right')

if left_eye_frame is not None:

if self.leftEyeCheckbox.isChecked():

left_eye_threshold = self.leftEyeThreshold.value()

left_keypoints, self.previous_left_keypoints, self.previous_left_blob_area = self.get_keypoints(

left_eye_frame, left_eye_frame_gray, left_eye_threshold,

previous_area=self.previous_left_blob_area,

previous_keypoint=self.previous_left_keypoints)

process.draw_blobs(left_eye_frame, left_keypoints)

left_eye_frame = np.require(left_eye_frame, np.uint8, 'C')

self.display_image(left_eye_frame, window='left')

if self.pupilsCheckbox.isChecked(): # draws keypoints on pupils on main window

self.display_image(base_image)

def get_keypoints(self, frame, frame_gray, threshold, previous_keypoint, previous_area):

keypoints = process.process_eye(frame_gray, threshold, self.detector,

prevArea=previous_area)

if keypoints:

previous_keypoint = keypoints

previous_area = keypoints[0].size

else:

keypoints = previous_keypoint

return keypoints, previous_keypoint, previous_area

def display_image(self, img, window='main'):

# Makes OpenCV images displayable on PyQT, displays them

qformat = QImage.Format_Indexed8

if len(img.shape) == 3:

if img.shape[2] == 4: # RGBA

qformat = QImage.Format_RGBA8888

else: # RGB

qformat = QImage.Format_RGB888

out_image = QImage(img, img.shape[1], img.shape[0], img.strides[0], qformat) # BGR to RGB

out_image = out_image.rgbSwapped()

if window == 'main': # main window

self.baseImage.setPixmap(QPixmap.fromImage(out_image))

self.baseImage.setScaledContents(True)

if window == 'left': # left eye window

self.leftEyeBox.setPixmap(QPixmap.fromImage(out_image))

self.leftEyeBox.setScaledContents(True)

if window == 'right': # right eye window

self.rightEyeBox.setPixmap(QPixmap.fromImage(out_image))

self.rightEyeBox.setScaledContents(True)

if __name__ == "__main__":

app = QApplication(sys.argv)

window = Window()

window.setWindowTitle("GUI")

window.show()

sys.exit(app.exec_())

人眼检测程序如下:

import os

import cv2

import numpy as np

def init_cv():

"""loads all of cv2 tools"""

face_detector = cv2.CascadeClassifier(

os.path.join("Classifiers", "haar", "haarcascade_frontalface_default.xml"))

eye_detector = cv2.CascadeClassifier(os.path.join("Classifiers", "haar", 'haarcascade_eye.xml'))

detector_params = cv2.SimpleBlobDetector_Params()

detector_params.filterByArea = True

detector_params.maxArea = 1500

detector = cv2.SimpleBlobDetector_create(detector_params)

return face_detector, eye_detector, detector

def detect_face(img, img_gray, cascade):

"""

Detects all faces, if multiple found, works with the biggest. Returns the following parameters:

1. The face frame

2. A gray version of the face frame

2. Estimated left eye coordinates range

3. Estimated right eye coordinates range

5. X of the face frame

6. Y of the face frame

"""

coords = cascade.detectMultiScale(img, 1.3, 5)

if len(coords) > 1:

biggest = (0, 0, 0, 0)

for i in coords:

if i[3] > biggest[3]:

biggest = i

biggest = np.array([i], np.int32)

elif len(coords) == 1:

biggest = coords

else:

return None, None, None, None, None, None

for (x, y, w, h) in biggest:

frame = img[y:y + h, x:x + w]

frame_gray = img_gray[y:y + h, x:x + w]

lest = (int(w * 0.1), int(w * 0.45))

rest = (int(w * 0.55), int(w * 0.9))

X = x

Y = y

return frame, frame_gray, lest, rest, X, Y

def detect_eyes(img, img_gray, lest, rest, cascade):

"""

:param img: image frame

:param img_gray: gray image frame

:param lest: left eye estimated position, needed to filter out nostril, know what eye is found

:param rest: right eye estimated position

:param cascade: Hhaar cascade

:return: colored and grayscale versions of eye frames

"""

leftEye = None

rightEye = None

leftEyeG = None

rightEyeG = None

coords = cascade.detectMultiScale(img_gray, 1.3, 5)

if coords is None or len(coords) == 0:

pass

else:

for (x, y, w, h) in coords:

eyecenter = int(float(x) + (float(w) / float(2)))

if lest[0] < eyecenter and eyecenter < lest[1]:

leftEye = img[y:y + h, x:x + w]

leftEyeG = img_gray[y:y + h, x:x + w]

leftEye, leftEyeG = cut_eyebrows(leftEye, leftEyeG)

elif rest[0] < eyecenter and eyecenter < rest[1]:

rightEye = img[y:y + h, x:x + w]

rightEyeG = img_gray[y:y + h, x:x + w]

rightEye, rightEye = cut_eyebrows(rightEye, rightEyeG)

else:

pass # nostril

return leftEye, rightEye, leftEyeG, rightEyeG

def process_eye(img, threshold, detector, prevArea=None):

"""

:param img: eye frame

:param threshold: threshold value for threshold function

:param detector: blob detector

:param prevArea: area of the previous keypoint(used for filtering)

:return: keypoints

"""

_, img = cv2.threshold(img, threshold, 255, cv2.THRESH_BINARY)

img = cv2.erode(img, None, iterations=2)

img = cv2.dilate(img, None, iterations=4)

img = cv2.medianBlur(img, 5)

keypoints = detector.detect(img)

if keypoints and prevArea and len(keypoints) > 1:

tmp = 1000

for keypoint in keypoints: # filter out odd blobs

if abs(keypoint.size - prevArea) < tmp:

ans = keypoint

tmp = abs(keypoint.size - prevArea)

keypoints = np.array(ans)

return keypoints

def cut_eyebrows(img, imgG):

height, width = img.shape[:2]

img = img[15:height, 0:width] # cut eyebrows out (15 px)

imgG = imgG[15:height, 0:width]

return img, imgG

def draw_blobs(img, keypoints):

"""Draws blobs"""

cv2.drawKeypoints(img, keypoints, img, (0, 0, 255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持亿速云。

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。