您好,登录后才能下订单哦!

小编给大家分享一下python怎么使用__slots__让你的代码更加节省内存,希望大家阅读完这篇文章之后都有所收获,下面让我们一起去探讨吧!

现在来说说python中dict为什么比list浪费内存?

和list相比,dict 查找和插入的速度极快,不会随着key的增加而增加;dict需要占用大量的内存,内存浪费多。

而list查找和插入的时间随着元素的增加而增加;占用空间小,浪费的内存很少。

python解释器是Cpython,这两个数据结构应该对应C的哈希表和数组。因为哈希表需要额外内存记录映射关系,而数组只需要通过索引就能计算出下一个节点的位置,所以哈希表占用的内存比数组大,也就是dict比list占用的内存更大。

如果想更加详细了解,可以查看C的源代码。python官方链接:https://www.python.org/downloads/source/

如下代码是我从python官方截取的代码片段:

List 源码:

typedef struct {

PyObject_VAR_HEAD

/* Vector of pointers to list elements. list[0] is ob_item[0], etc. */

PyObject **ob_item;

/* ob_item contains space for 'allocated' elements. The number

* currently in use is ob_size.

* Invariants:

* 0 <= ob_size <= allocated

* len(list) == ob_size

* ob_item == NULL implies ob_size == allocated == 0

* list.sort() temporarily sets allocated to -1 to detect mutations.

*

* Items must normally not be NULL, except during construction when

* the list is not yet visible outside the function that builds it.

*/

Py_ssize_t allocated;

} PyListObject;Dict源码:

/* PyDict_MINSIZE is the minimum size of a dictionary. This many slots are

* allocated directly in the dict object (in the ma_smalltable member).

* It must be a power of 2, and at least 4. 8 allows dicts with no more

* than 5 active entries to live in ma_smalltable (and so avoid an

* additional malloc); instrumentation suggested this suffices for the

* majority of dicts (consisting mostly of usually-small instance dicts and

* usually-small dicts created to pass keyword arguments).

*/

#define PyDict_MINSIZE 8

typedef struct {

/* Cached hash code of me_key. Note that hash codes are C longs.

* We have to use Py_ssize_t instead because dict_popitem() abuses

* me_hash to hold a search finger.

*/

Py_ssize_t me_hash;

PyObject *me_key;

PyObject *me_value;

} PyDictEntry;

/*

To ensure the lookup algorithm terminates, there must be at least one Unused

slot (NULL key) in the table.

The value ma_fill is the number of non-NULL keys (sum of Active and Dummy);

ma_used is the number of non-NULL, non-dummy keys (== the number of non-NULL

values == the number of Active items).

To avoid slowing down lookups on a near-full table, we resize the table when

it's two-thirds full.

*/

typedef struct _dictobject PyDictObject;

struct _dictobject {

PyObject_HEAD

Py_ssize_t ma_fill; /* # Active + # Dummy */

Py_ssize_t ma_used; /* # Active */

/* The table contains ma_mask + 1 slots, and that's a power of 2.

* We store the mask instead of the size because the mask is more

* frequently needed.

*/

Py_ssize_t ma_mask;

/* ma_table points to ma_smalltable for small tables, else to

* additional malloc'ed memory. ma_table is never NULL! This rule

* saves repeated runtime null-tests in the workhorse getitem and

* setitem calls.

*/

PyDictEntry *ma_table;

PyDictEntry *(*ma_lookup)(PyDictObject *mp, PyObject *key, long hash);

PyDictEntry ma_smalltable[PyDict_MINSIZE];

};PyObject_HEAD 源码:

#ifdef Py_TRACE_REFS /* Define pointers to support a doubly-linked list of all live heap objects. */ #define _PyObject_HEAD_EXTRA \ struct _object *_ob_next; \ struct _object *_ob_prev; #define _PyObject_EXTRA_INIT 0, 0, #else #define _PyObject_HEAD_EXTRA #define _PyObject_EXTRA_INIT #endif /* PyObject_HEAD defines the initial segment of every PyObject. */ #define PyObject_HEAD \ _PyObject_HEAD_EXTRA \ Py_ssize_t ob_refcnt; \ struct _typeobject *ob_type;

PyObject_VAR_HEAD 源码:

/* PyObject_VAR_HEAD defines the initial segment of all variable-size * container objects. These end with a declaration of an array with 1 * element, but enough space is malloc'ed so that the array actually * has room for ob_size elements. Note that ob_size is an element count, * not necessarily a byte count. */ #define PyObject_VAR_HEAD \ PyObject_HEAD \ Py_ssize_t ob_size; /* Number of items in variable part */

现在知道了dict为什么比list 占用的内存空间更大。接下来如何让你的类更加的节省内存。

其实有两种解决方案:

第一种是使用__slots__ ;另外一种是使用Collection.namedtuple 实现。

首先用标准的方式写一个类:

#!/usr/bin/env python class Foobar(object): def __init__(self, x): self.x = x @profile def main(): f = [Foobar(42) for i in range(1000000)] if __name__ == "__main__": main()

然后,创建一个类Foobar(),然后实例化100W次。通过@profile查看内存使用情况。

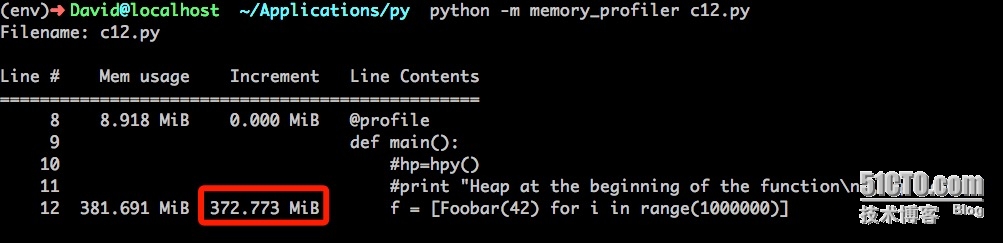

运行结果:

该代码共使用了372M内存。

接下来通过__slots__代码实现该代码:

#!/usr/bin/env python class Foobar(object): __slots__ = 'x' def __init__(self, x): self.x = x @profile def main(): f = [Foobar(42) for i in range(1000000)] if __name__ == "__main__": main()

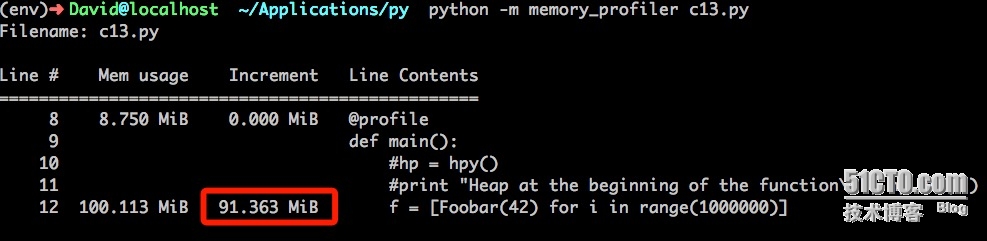

运行结果:

使用__slots__使用了91M内存,比使用__dict__存储属性值节省了4倍。

其实使用collection模块的namedtuple也可以实现__slots__相同的功能。namedtuple其实就是继承自tuple,同时也因为__slots__的值被设置成了一个空tuple以避免创建__dict__。

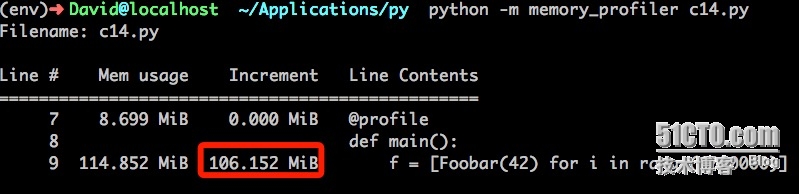

看看collection是如何实现的:

collection 和普通创建类方式相比,也节省了不少的内存。所在在确定类的属性值固定的情况下,可以使用__slots__方式对内存进行优化。但是这项技术不应该被滥用于静态类或者其他类似场合,那不是python程序的精神所在。

看完了这篇文章,相信你对“python怎么使用__slots__让你的代码更加节省内存”有了一定的了解,如果想了解更多相关知识,欢迎关注亿速云行业资讯频道,感谢各位的阅读!

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。