您好,登录后才能下订单哦!

这篇文章主要讲解了python中如何使用Beautiful Soup,内容清晰明了,相信大家阅读完之后会有帮助。

Beautiful Soup就是Python的一个HTML或XML的解析库,可以用它来方便地从网页中提取数据。它有如下三个特点:

首先,我们要安装它:pip install bs4,然后安装 pip install beautifulsoup4.

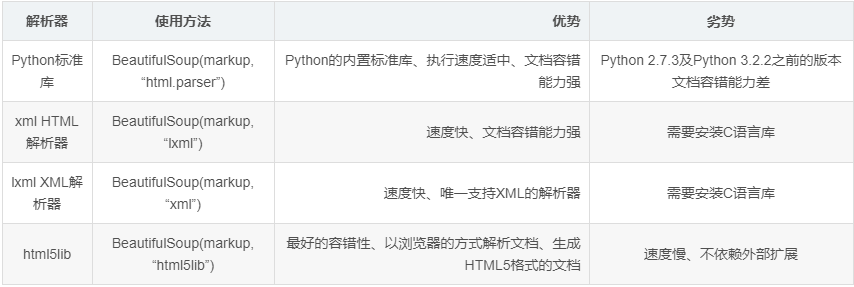

Beautiful Soup支持的解析器

下面我们以lxml解析器为例:

from bs4 import BeautifulSoup

soup = BeautifulSoup('<p>Hello</p>', 'lxml')

print(soup.p.string)

结果:

Hello

beautiful soup美化的效果实例:

html = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title" name="dromouse"><b>The Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" rel="external nofollow" rel="external nofollow" rel="external nofollow" class="sister" id="link1"><!-- Elsie --></a>, <a href="http://example.com/lacie" rel="external nofollow" rel="external nofollow" rel="external nofollow" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" rel="external nofollow" rel="external nofollow" rel="external nofollow" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ from bs4 import BeautifulSoup soup = BeautifulSoup(html, 'lxml')#调用prettify()方法。这个方法可以把要解析的字符串以标准的缩进格式输出 print(soup.prettify()) print(soup.title.string)

结果:

<html> <head> <title> The Dormouse's story </title> </head> <body> <p class="title" name="dromouse"> <b> The Dormouse's story </b> </p> <p class="story"> Once upon a time there were three little sisters; and their names were <a class="sister" href="http://example.com/elsie" rel="external nofollow" rel="external nofollow" rel="external nofollow" id="link1"> <!-- Elsie --> </a> , <a class="sister" href="http://example.com/lacie" rel="external nofollow" rel="external nofollow" rel="external nofollow" id="link2"> Lacie </a> and <a class="sister" href="http://example.com/tillie" rel="external nofollow" rel="external nofollow" rel="external nofollow" id="link3"> Tillie </a> ; and they lived at the bottom of a well. </p> <p class="story"> ... </p> </body> </html> The Dormouse's story

下面举例说明选择元素、属性、名称的方法

html = """

<html><head><title>The Dormouse's story</title></head>

<body>

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

<p class="story">Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" rel="external nofollow" rel="external nofollow" rel="external nofollow" class="sister" id="link1"><!-- Elsie --></a>,

<a href="http://example.com/lacie" rel="external nofollow" rel="external nofollow" rel="external nofollow" class="sister" id="link2">Lacie</a> and

<a href="http://example.com/tillie" rel="external nofollow" rel="external nofollow" rel="external nofollow" class="sister" id="link3">Tillie</a>;

and they lived at the bottom of a well.</p>

<p class="story">...</p>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print('输出结果为title节点加里面的文字内容:\n',soup.title)

print('输出它的类型:\n',type(soup.title))

print('输出节点的文本内容:\n',soup.title.string)

print('结果是节点加其内部的所有内容:\n',soup.head)

print('结果是第一个p节点的内容:\n',soup.p)

print('利用name属性获取节点的名称:\n',soup.title.name)

#这里需要注意的是,有的返回结果是字符串,有的返回结果是字符串组成的列表。

# 比如,name属性的值是唯一的,返回的结果就是单个字符串。

# 而对于class,一个节点元素可能有多个class,所以返回的是列表。

print('每个节点可能有多个属性,比如id和class等:\n',soup.p.attrs)

print('选择这个节点元素后,可以调用attrs获取所有属性:\n',soup.p.attrs['name'])

print('获取p标签的name属性值:\n',soup.p['name'])

print('获取p标签的class属性值:\n',soup.p['class'])

print('获取第一个p节点的文本:\n',soup.p.string)结果:

输出结果为title节点加里面的文字内容:

<title>The Dormouse's story</title>

输出它的类型:

<class 'bs4.element.Tag'>

输出节点的文本内容:

The Dormouse's story

结果是节点加其内部的所有内容:

<head><title>The Dormouse's story</title></head>

结果是第一个p节点的内容:

<p class="title" name="dromouse"><b>The Dormouse's story</b></p>

利用name属性获取节点的名称:

title

每个节点可能有多个属性,比如id和class等:

{'class': ['title'], 'name': 'dromouse'}

选择这个节点元素后,可以调用attrs获取所有属性:

dromouse

获取p标签的name属性值:

dromouse

获取p标签的class属性值:

['title']

获取第一个p节点的文本:

The Dormouse's story在上面的例子中,我们知道每一个返回结果都是bs4.element.Tag类型,它同样可以继续调用节点进行下一步的选择。

html = """

<html><head><title>The Dormouse's story</title></head>

<body>

"""

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print('获取了head节点元素,继续调用head来选取其内部的head节点元素:\n',soup.head.title)

print('继续调用输出类型:\n',type(soup.head.title))

print('继续调用输出内容:\n',soup.head.title.string)结果:

获取了head节点元素,继续调用head来选取其内部的head节点元素: <title>The Dormouse's story</title> 继续调用输出类型: <class 'bs4.element.Tag'> 继续调用输出内容: The Dormouse's story

(1)find_all()

find_all,顾名思义,就是查询所有符合条件的元素。给它传入一些属性或文本,就可以得到符合条件的元素,它的功能十分强大。

find_all(name , attrs , recursive , text , **kwargs)

他的用法:

html='''

<div class="panel">

<div class="panel-heading">

<h5>Hello</h5>

</div>

<div class="panel-body">

<ul class="list" id="list-1">

<li class="element">Foo</li>

<li class="element">Bar</li>

<li class="element">Jay</li>

</ul>

<ul class="list list-small" id="list-2">

<li class="element">Foo</li>

<li class="element">Bar</li>

</ul>

</div>

</div>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'lxml')

print('查询所有ul节点,返回结果是列表类型,长度为2:\n',soup.find_all(name='ul'))

print('每个元素依然都是bs4.element.Tag类型:\n',type(soup.find_all(name='ul')[0]))

#将以上步骤换一种方式,遍历出来

for ul in soup.find_all(name='ul'):

print('输出每个u1:',ul.find_all(name='li'))

#遍历两层

for ul in soup.find_all(name='ul'):

print('输出每个u1:',ul.find_all(name='li'))

for li in ul.find_all(name='li'):

print('输出每个元素:',li.string)结果:

查询所有ul节点,返回结果是列表类型,长度为2: [<ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul>, <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul>] 每个元素依然都是bs4.element.Tag类型: <class 'bs4.element.Tag'> 输出每个u1: [<li class="element">Foo</li>, <li class="element">Bar</li>, <li class="element">Jay</li>] 输出每个u1: [<li class="element">Foo</li>, <li class="element">Bar</li>] 输出每个u1: [<li class="element">Foo</li>, <li class="element">Bar</li>, <li class="element">Jay</li>] 输出每个元素: Foo 输出每个元素: Bar 输出每个元素: Jay 输出每个u1: [<li class="element">Foo</li>, <li class="element">Bar</li>] 输出每个元素: Foo 输出每个元素: Bar

看完上述内容,有没有对python中如何使用Beautiful Soup有进一步的了解,如果还想学习更多内容,欢迎关注亿速云行业资讯频道。

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。