您好,登录后才能下订单哦!

Kubernetes部署(一):架构及功能说明

Kubernetes部署(二):系统环境初始化

Kubernetes部署(三):CA证书制作

Kubernetes部署(四):ETCD集群部署

Kubernetes部署(五):Haproxy、Keppalived部署

Kubernetes部署(六):Master节点部署

Kubernetes部署(七):Node节点部署

Kubernetes部署(八):Flannel网络部署

Kubernetes部署(九):CoreDNS、Dashboard、Ingress部署

Kubernetes部署(十):储存之glusterfs和heketi部署

Kubernetes部署(十一):管理之Helm和Rancher部署

Kubernetes部署(十二):helm部署harbor企业级镜像仓库

harbor官方github:https://github.com/goharbor

Harbor是一个用于存储和分发Docker镜像的企业级Registry服务器。Harbor通过添加用户通常需要的功能(如安全性,身份和管理)来扩展开源Docker Distribution。使registry更接近构建和运行环境可以提高图像传输效率。Harbor支持在registry之间复制映像,还提供高级安全功能,如用户管理,访问控制和活动审计。

将h.cnlinux.club和n.cnlinux.club的A记录解析到我的负载均衡IP 10.31.90.200,用于创建ingress。

[root@node-01 harbor]# wget https://github.com/goharbor/harbor-helm/archive/1.0.0.tar.gz -O harbor-helm-v1.0.0.tar.gzharbor-helm-v1.0.0.tar.gz文件中的values.yaml文件,并放到和harbor-helm-v1.0.0.tar.gz同一级的目录中。修改values.yaml,我的配置修改了如下几个字段:

需要说明的是如果k8s集群中存在storageclass就可以直接用storageclass,在几个persistence.persistentVolumeClaim.XXX.storageClass中指定storageclass名就可以了,会自动创建多个pvc,但是我这里为了防止创建多个pvc增加管理难度,我在部署前创建了一个pvc,harbor下所有的服务都使用这一个pvc,具体每个字段的作用请查看官方文档https://github.com/goharbor/harbor-helm。

expose.ingress.hosts.corexpose.ingress.hosts.notaryexternalURLpersistence.persistentVolumeClaim.registry.existingClaimpersistence.persistentVolumeClaim.registry.subPathpersistence.persistentVolumeClaim.chartmuseum.existingClaimpersistence.persistentVolumeClaim.chartmuseum.subPathpersistence.persistentVolumeClaim.jobservice.existingClaimpersistence.persistentVolumeClaim.jobservice.subPathpersistence.persistentVolumeClaim.database.existingClaimpersistence.persistentVolumeClaim.database.subPathpersistence.persistentVolumeClaim.redis.existingClaimpersistence.persistentVolumeClaim.redis.subPathexpose:

type: ingress

tls:

enabled: true

secretName: ""

notarySecretName: ""

commonName: ""

ingress:

hosts:

core: h.cnlinux.club

notary: n.cnlinux.club

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

clusterIP:

name: harbor

ports:

httpPort: 80

httpsPort: 443

notaryPort: 4443

nodePort:

name: harbor

ports:

http:

port: 80

nodePort: 30002

https:

port: 443

nodePort: 30003

notary:

port: 4443

nodePort: 30004

externalURL: https://h.cnlinux.club

persistence:

enabled: true

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

existingClaim: "pvc-harbor"

storageClass: ""

subPath: "registry"

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: "pvc-harbor"

storageClass: ""

subPath: "chartmuseum"

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: "pvc-harbor"

storageClass: ""

subPath: "jobservice"

accessMode: ReadWriteOnce

size: 1Gi

database:

existingClaim: "pvc-harbor"

storageClass: ""

subPath: "database"

accessMode: ReadWriteOnce

size: 1Gi

redis:

existingClaim: "pvc-harbor"

storageClass: ""

subPath: "redis"

accessMode: ReadWriteOnce

size: 1Gi

imageChartStorage:

type: filesystem

filesystem:

rootdirectory: /storage

imagePullPolicy: IfNotPresent

logLevel: debug

harborAdminPassword: "Harbor12345"

secretKey: "not-a-secure-key"

nginx:

image:

repository: goharbor/nginx-photon

tag: v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

portal:

image:

repository: goharbor/harbor-portal

tag: v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

core:

image:

repository: goharbor/harbor-core

tag: v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

adminserver:

image:

repository: goharbor/harbor-adminserver

tag: v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

jobservice:

image:

repository: goharbor/harbor-jobservice

tag: v1.7.0

replicas: 1

maxJobWorkers: 10

jobLogger: file

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

registry:

registry:

image:

repository: goharbor/registry-photon

tag: v2.6.2-v1.7.0

controller:

image:

repository: goharbor/harbor-registryctl

tag: v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

chartmuseum:

enabled: true

image:

repository: goharbor/chartmuseum-photon

tag: v0.7.1-v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

clair:

enabled: true

image:

repository: goharbor/clair-photon

tag: v2.0.7-v1.7.0

replicas: 1

httpProxy:

httpsProxy:

updatersInterval: 12

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

notary:

enabled: true

server:

image:

repository: goharbor/notary-server-photon

tag: v0.6.1-v1.7.0

replicas: 1

signer:

image:

repository: goharbor/notary-signer-photon

tag: v0.6.1-v1.7.0

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

database:

type: internal

internal:

image:

repository: goharbor/harbor-db

tag: v1.7.0

password: "changeit"

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

redis:

type: internal

internal:

image:

repository: goharbor/redis-photon

tag: v1.7.0

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}因为harbor需要使用到mysql,为防止mysql在调度过程中造成数据丢失,我们需要将mysql的数据存储在gluster的存储卷里。

[root@node-01 harbor]# vim pvc-harbor.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-harbor

spec:

storageClassName: gluster-heketi

accessModes:

- ReadWriteMany

resources:

requests:

storage: 50Gi[root@node-01 harbor]# kubectl apply -f pvc-harbor.yaml [root@node-01 harbor]# helm install --name harbor harbor-helm-v1.0.0.tar.gz -f values.yaml 如果安装不成功可以用

helm del --purge harbor删除重新安装。

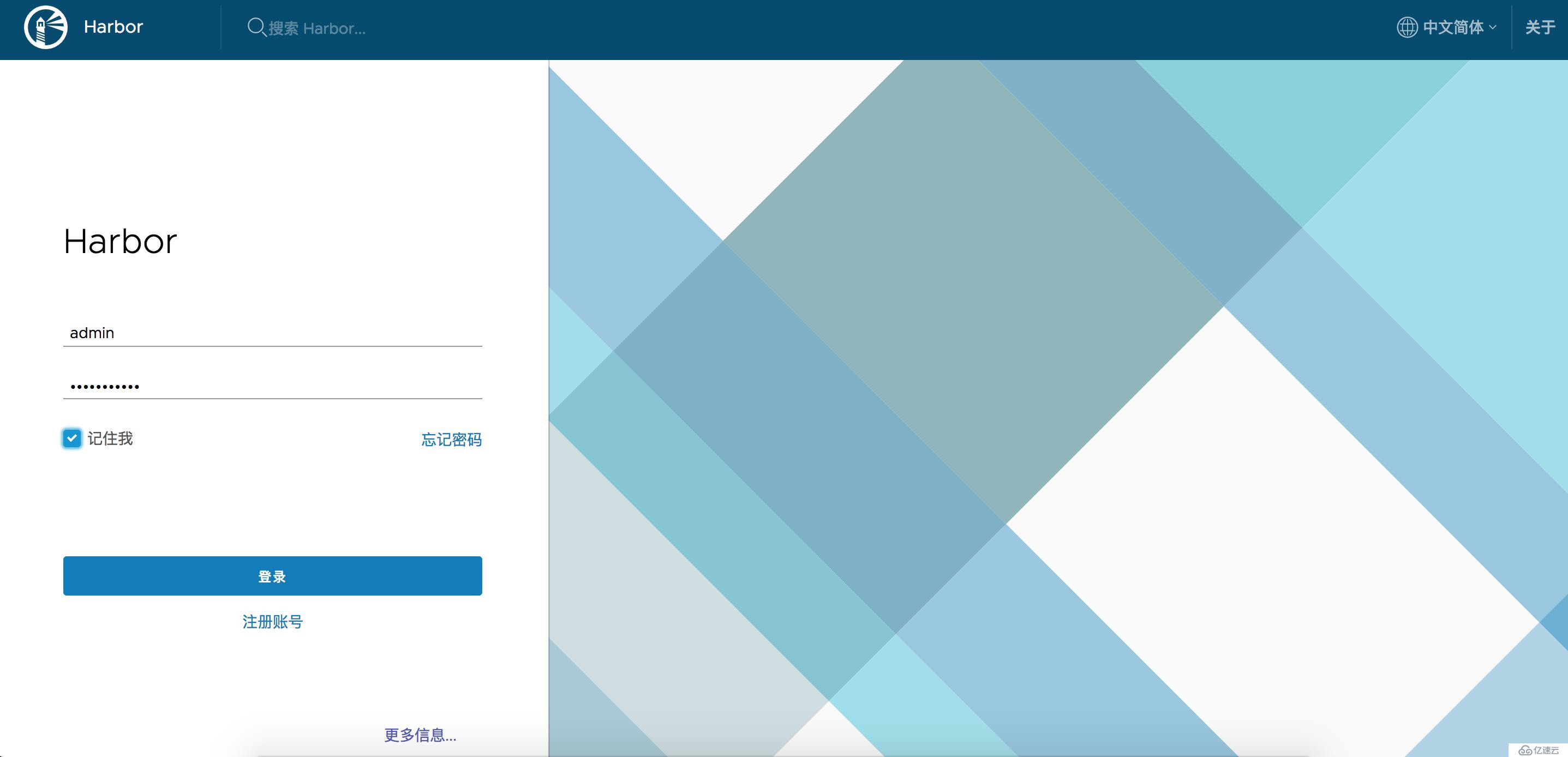

在一段时间后可以看到harbor所有相关的pod都已经运行起来了,我们就可以访问了,默认用户密码是admin/Harbor12345,可以通过修改values.yaml来更改默认的用户名和密码。

[root@node-01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

harbor-harbor-adminserver-7fffc7bf4d-vj845 1/1 Running 1 15d

harbor-harbor-chartmuseum-bdf64f899-brnww 1/1 Running 0 15d

harbor-harbor-clair-8457c45dd8-9rgq8 1/1 Running 1 15d

harbor-harbor-core-7fc454c6d8-b6kvs 1/1 Running 1 15d

harbor-harbor-database-0 1/1 Running 0 15d

harbor-harbor-jobservice-7895949d6b-zbwkf 1/1 Running 1 15d

harbor-harbor-notary-server-57dd94bf56-txdkl 1/1 Running 0 15d

harbor-harbor-notary-signer-5d64c5bf8d-kppts 1/1 Running 0 15d

harbor-harbor-portal-648c56499f-g28rz 1/1 Running 0 15d

harbor-harbor-redis-0 1/1 Running 0 15d

harbor-harbor-registry-5cd9c49489-r92ph 2/2 Running 0 15d

接下来我们创建test的私有项目用来测试。

for n in `seq -w 01 06`;do ssh node-$n "mkdir -p /etc/docker/certs.d/h.cnlinux.club";done

#将下载下来的harbor CA证书拷贝到每个node节点的etc/docker/certs.d/h.cnlinux.club目录下

for n in `seq -w 01 06`;do scp ca.crt node-$n:/etc/docker/certs.d/h.cnlinux.club/;done.docker/config.json里。[root@node-06 ~]# docker login h.cnlinux.club

Username: admin

Password:

Login Succeeded

[root@node-06 ~]# cat .docker/config.json

{

"auths": {

"h.cnlinux.club": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

}

}

}[root@node-06 ~]# docker pull nginx:latest

[root@node-06 ~]# docker tag nginx:latest h.cnlinux.club/test/nginx:latest

[root@node-06 ~]# docker push h.cnlinux.club/test/nginx:latest

问题:如果我的k8s集群很多的node节点是不是每个node节点都要上去登录才能pull harbor仓库的镜像?这样是不是就非常麻烦了?

kubernetes.io/dockerconfigjson就是用来解决这种问题的。[root@node-06 ~]# cat .docker/config.json |base64

ewoJImF1dGhzIjogewoJCSJoLmNubGludXguY2x1YiI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9IgoJCX0KCX0sCgkiSHR0cEhlYWRlcnMiOiB7CgkJIlVzZXItQWdlbnQiOiAiRG9ja2VyLUNsaWVudC8xOC4wNi4xLWNlIChsaW51eCkiCgl9Cn0=apiVersion: v1

kind: Secret

metadata:

name: harbor-registry-secret

namespace: default

data:

.dockerconfigjson: ewoJImF1dGhzIjogewoJCSJoLmNubGludXguY2x1YiI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9IgoJCX0KCX0sCgkiSHR0cEhlYWRlcnMiOiB7CgkJIlVzZXItQWdlbnQiOiAiRG9ja2VyLUNsaWVudC8xOC4wNi4xLWNlIChsaW51eCkiCgl9Cn0=

type: kubernetes.io/dockerconfigjson[root@node-01 ~]# kubectl create -f harbor-registry-secret.yaml

secret/harbor-registry-secret created10.31.90.200。apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-nginx

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: h.cnlinux.club/test/nginx:latest

ports:

- containerPort: 80

imagePullSecrets:

- name: harbor-registry-secret

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

selector:

app: nginx

ports:

- name: nginx

protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx

annotations:

# nginx.ingress.kubernetes.io/rewrite-target: /

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: nginx.cnlinux.club

http:

paths:

- path:

backend:

serviceName: nginx

servicePort: 80[root@node-01 ~]# kubectl get pod -o wide|grep nginx

deploy-nginx-647f9649f5-88mkt 1/1 Running 0 2m41s 10.34.0.5 node-06 <none> <none>

deploy-nginx-647f9649f5-9z842 1/1 Running 0 2m41s 10.40.0.5 node-04 <none> <none>

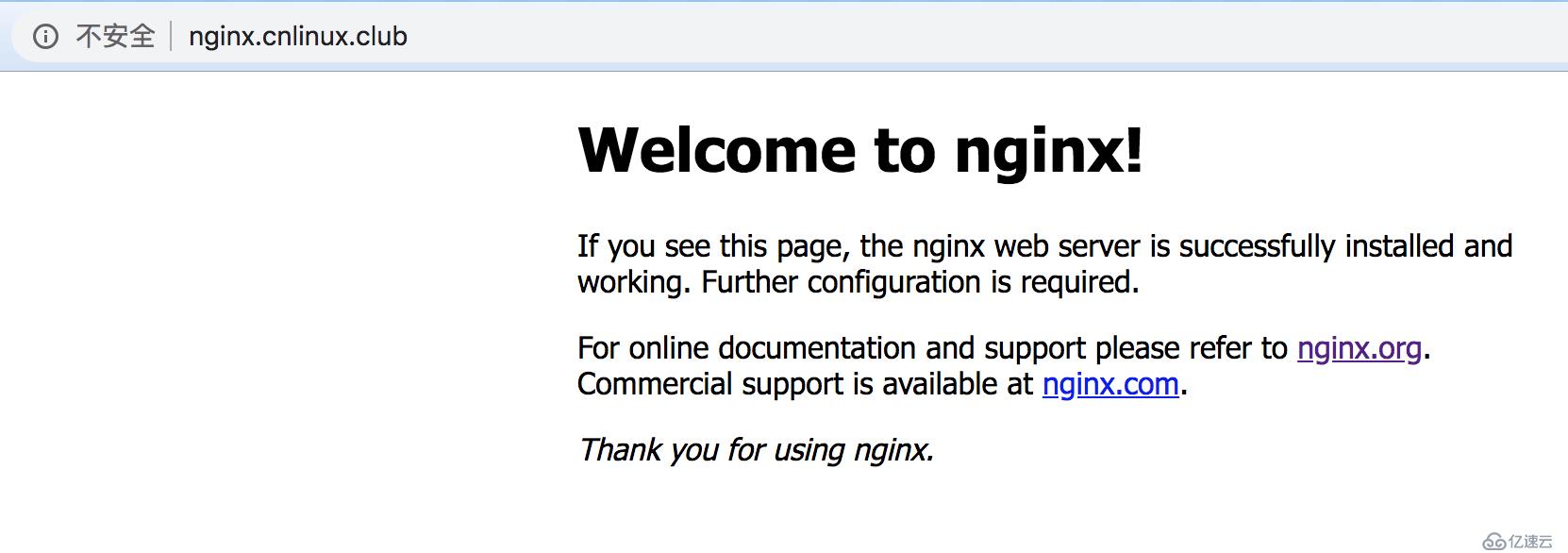

deploy-nginx-647f9649f5-w44ck 1/1 Running 0 2m41s 10.46.0.6 node-05 <none> <none>最后我们访问http://nginx.cnlinux.club,至此所有的都已完成。

后续会陆续更新所有的k8s相关文档,如果你觉得我写的不错,希望大家多多关注点赞,如有问题可以在下面给我留言,非常感谢!

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。