您好,登录后才能下订单哦!

在Oracle中,对于数据库的修改操作都会记录redo,那么不同的操作会产生多少redo呢?可以通过以下一些方式来查询来统计产生的redo日志量。

(1)SQL*Plus中使用AUTOTRACE的使用。

当在SQL*Plus中启用autotrace跟踪后,在执行了特定的DML语句时,Oracle会显示该语句的统计信息,其中,redo Size一栏表示的就是该操作产生的redo的数量,其单位为Bytes:

SCOTT@seiang11g>set autotrace traceonly statistics

注意:如果在启动autotrace跟踪的时候,出现如下报错:SP2-0618:

Cannot find the Session Identifier.

Check PLUSTRACE role is enabled.

解决方法请参考另一篇博文:SP2-0618: Cannot find the Session Identifier.Check PLUSTRACE role is enabled

SCOTT@seiang11g>create table emp1 as select * from emp;

Table created.

SCOTT@seiang11g>

SCOTT@seiang11g>insert into emp1 select * from emp1;

14 rows created.

Statistics

----------------------------------------------------------

15 recursive calls

22 db block gets

33 consistent gets

5 physical reads

1872 redo size

834 bytes sent via SQL*Net to client

791 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

2 sorts (memory)

0 sorts (disk)

14 rows processed

(2)通过v$mystat查询。

Oracle通过v$mystat视图记录当前session的统计信息,我们也可以从该视图中查询得到session的redo生成情况:

SCOTT@seiang11g>set autot off

SCOTT@seiang11g>

SCOTT@seiang11g>select a.name,b.value from v$statname a,v$mystat b

2 where a.statistic# = b.statistic# and a.name='redo size';

NAME VALUE

---------------------------------------------------------------- ----------

redo size 29140

SCOTT@seiang11g>

SCOTT@seiang11g>insert into emp1 select * from emp1;

28 rows created.

SCOTT@seiang11g>

SCOTT@seiang11g>select a.name,b.value from v$statname a,v$mystat b

2 where a.statistic# = b.statistic# and a.name='redo size';

NAME VALUE

---------------------------------------------------------------- ----------

redo size 30708

SCOTT@seiang11g>

SCOTT@seiang11g>select 30708-29140 from dual;

30708-29140

-----------

1568

(3)通过v$sysstat查询。

对于数据库全局Redo的生成量,可以通过v$sysstat视图来查询得到:

SYS@seiang11g>select name,value from v$sysstat where name='redo size';

NAME VALUE

---------------------------------------------------------------- ----------

redo size 548518160

从v$sysstat视图中得到的是自数据库实例启动以来的累积日志生成量,可以根据实例启动时间大致估算每天数据库的日志生成量:

SYS@seiang11g>alter session set nls_date_format='yyyy-mm-dd hh34:mi:ss';

Session altered.

SYS@seiang11g>

SYS@seiang11g>select

2 (select value/1024/1024/1024 from v$sysstat where name='redo size'

3 )/

4 (select round(sysdate-

5 (select startup_time from v$instance

6 )) from dual

7 ) redo_gb_per_day

8 from dual;

REDO_GB_PER_DAY

---------------

.102173401

如果数据库运行在归档模式下,由于其他因素的影响,以上Redo生成量并不代表归档日志的大小,但是可以通过一定的加权提供参考。

至于归档日志的生成量,可以通过v$archived_log视图,根据一段时间的归档日志量进行估算得到。该视图中记录了归档日志的主要信息:

SYS@seiang11g>select

name,completion_time,blocks*block_size/1024/1024 MB

2 from v$archived_log where status = 'A';

NAME COMPLETION_TIME MB

-------------------------------------------------- ------------------- ----------

/u01/app/oracle/arch/arch_1_949237404_8.log 2017-07-13 13:37:10 1.74072266

/u01/app/oracle/arch/arch_1_949237404_9.log 2017-09-13 17:09:40 35.9506836

/u01/app/oracle/arch/arch_1_949237404_10.log 2017-09-13 22:00:47 42.2592773

/u01/app/oracle/arch/arch_1_949237404_11.log 2017-09-14 05:00:33 36.9936523

/u01/app/oracle/arch/arch_1_949237404_12.log 2017-09-14 19:00:36 36.9335938

/u01/app/oracle/arch/arch_1_949237404_13.log 2017-09-15 01:06:21 35.8876953

/u01/app/oracle/arch/arch_1_949237404_14.log 2017-09-15 15:00:10 35.8935547

/u01/app/oracle/arch/arch_1_949237404_15.log 2017-09-15 22:00:37 37.5634766

/u01/app/oracle/arch/arch_1_949237404_16.log 2017-09-16 06:00:28 42.2397461

/u01/app/oracle/arch/arch_1_949237404_17.log 2017-09-16 14:00:16 43.9946289

/u01/app/oracle/arch/arch_1_949237404_18.log 2017-09-16 22:00:25 44.0483398

/u01/app/oracle/arch/arch_1_949237404_19.log 2017-09-17 06:00:25 40.4213867

/u01/app/oracle/arch/arch_1_949237404_20.log 2017-09-17 14:00:25 42.0063477

/u01/app/oracle/arch/arch_1_949237404_21.log 2017-09-17 22:00:28 42.7241211

/u01/app/oracle/arch/arch_1_949237404_22.log 2017-09-18 11:00:07 36.0229492

某日全天的日志生成可以通过如下查询计算:

SYS@seiang11g>select trunc(completion_time),

2 sum(Mb)/1024 DAY_GB

3 from

4 (select name,

5 completion_time,

6 blocks*block_size/1024/1024 Mb

7 from v$archived_log

8 where completion_time between trunc(sysdate)-2 and trunc(sysdate)-1

9 )

10 group by trunc(completion_time);

TRUNC(COMPLETION_TI DAY_GB

------------------- ----------

2017-09-16 00:00:00 .127229214

最近日期的日志生成统计:

SYS@seiang11g>select trunc(completion_time),

2 sum(mb)/1024 day_gb

3 from

4 (select name,

5 completion_time,

6 blocks*block_size/1024/1024 mb

7 from v$archived_log

8 )

9 group by trunc(completion_time);

TRUNC(COMPLETION_TI DAY_GB

------------------- ----------

2017-09-15 00:00:00 .10678196

2017-09-18 00:00:00 .035178661

2017-09-13 00:00:00 .076376915

2017-09-17 00:00:00 .122218609

2017-07-13 00:00:00 .065961361

2017-09-16 00:00:00 .127229214

2017-09-14 00:00:00 .072194576

根据每日归档的生成量,我们也可以反过来估计每日的数据库活动性及周期性,并决定空间分配等问题。

拓展:

(一)以下脚本可以用于列出最近Oracle数据库每小时估算的redo重做日志产生量,因为估算数据来源于archivelog的产生量和大小,所以数据是近似值,可供参考:

WITH times AS

(SELECT /*+ MATERIALIZE */

hour_end_time

FROM (SELECT (TRUNC(SYSDATE, 'HH') + (2 / 24)) - (ROWNUM / 24) hour_end_time

FROM DUAL

CONNECT BY ROWNUM <= (1 * 24) + 3),

v$database

WHERE log_mode = 'ARCHIVELOG')

SELECT hour_end_time, NVL(ROUND(SUM(size_mb), 3), 0) size_mb, i.instance_name

FROM(

SELECT hour_end_time, CASE WHEN(hour_end_time - (1 / 24)) > lag_next_time THEN(next_time + (1 / 24) - hour_end_time) * (size_mb / (next_time - lag_next_time)) ELSE 0 END + CASE WHEN hour_end_time < lead_next_time THEN(hour_end_time - next_time) * (lead_size_mb / (lead_next_time - next_time)) ELSE 0 END + CASE WHEN lag_next_time > (hour_end_time - (1 / 24)) THEN size_mb ELSE 0 END + CASE WHEN next_time IS NULL THEN(1 / 24) * LAST_VALUE(CASE WHEN next_time IS NOT NULL AND lag_next_time IS NULL THEN 0 ELSE(size_mb / (next_time - lag_next_time)) END IGNORE NULLS) OVER(

ORDER BY hour_end_time DESC, next_time DESC) ELSE 0 END size_mb

FROM(

SELECT t.hour_end_time, arc.next_time, arc.lag_next_time, LEAD(arc.next_time) OVER(

ORDER BY arc.next_time ASC) lead_next_time, arc.size_mb, LEAD(arc.size_mb) OVER(

ORDER BY arc.next_time ASC) lead_size_mb

FROM times t,(

SELECT next_time, size_mb, LAG(next_time) OVER(

ORDER BY next_time) lag_next_time

FROM(

SELECT next_time, SUM(size_mb) size_mb

FROM(

SELECT DISTINCT a.sequence#, a.next_time, ROUND(a.blocks * a.block_size / 1024 / 1024) size_mb

FROM v$archived_log a,(

SELECT /*+ no_merge */

CASE WHEN TO_NUMBER(pt.VALUE) = 0 THEN 1 ELSE TO_NUMBER(pt.VALUE) END VALUE

FROM v$parameter pt

WHERE pt.name = 'thread') pt

WHERE a.next_time > SYSDATE - 3 AND a.thread# = pt.VALUE AND ROUND(a.blocks * a.block_size / 1024 / 1024) > 0)

GROUP BY next_time)) arc

WHERE t.hour_end_time = (TRUNC(arc.next_time(+), 'HH') + (1 / 24)))

WHERE hour_end_time > TRUNC(SYSDATE, 'HH') - 1 - (1 / 24)), v$instance i

WHERE hour_end_time <= TRUNC(SYSDATE, 'HH')

GROUP BY hour_end_time, i.instance_name

ORDER BY hour_end_time

/

执行结果:

HOUR_END_TIME SIZE_MB INSTANCE_NAME

------------------- ---------- ----------------

2017-09-17 14:00:00 5.25 seiang11g

2017-09-17 15:00:00 5.374 seiang11g

2017-09-17 16:00:00 5.374 seiang11g

2017-09-17 17:00:00 5.374 seiang11g

2017-09-17 18:00:00 5.374 seiang11g

2017-09-17 19:00:00 5.374 seiang11g

2017-09-17 20:00:00 5.374 seiang11g

2017-09-17 21:00:00 5.374 seiang11g

2017-09-17 22:00:00 5.374 seiang11g

2017-09-17 23:00:00 2.79 seiang11g

2017-09-18 00:00:00 2.77 seiang11g

2017-09-18 01:00:00 2.77 seiang11g

2017-09-18 02:00:00 2.77 seiang11g

2017-09-18 03:00:00 2.77 seiang11g

2017-09-18 04:00:00 2.77 seiang11g

2017-09-18 05:00:00 2.77 seiang11g

2017-09-18 06:00:00 2.77 seiang11g

2017-09-18 07:00:00 2.77 seiang11g

2017-09-18 08:00:00 2.77 seiang11g

2017-09-18 09:00:00 2.77 seiang11g

2017-09-18 10:00:00 2.77 seiang11g

2017-09-18 11:00:00 2.77 seiang11g

2017-09-18 12:00:00 .005 seiang11g

2017-09-18 13:00:00 0 seiang11g

2017-09-18 14:00:00 0 seiang11g

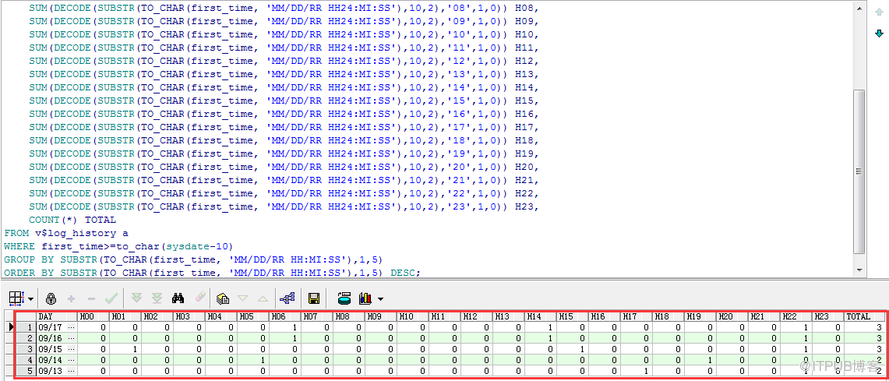

(二)Oracle查询最近几天每小时归档日志产生数量的脚本,脚本内容如下所示:

SELECT SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH:MI:SS'),1,5) Day,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'00',1,0)) H00,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'01',1,0)) H01,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'02',1,0)) H02,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'03',1,0)) H03,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'04',1,0)) H04,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'05',1,0)) H05,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'06',1,0)) H06,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'07',1,0)) H07,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'08',1,0)) H08,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'09',1,0)) H09,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'10',1,0)) H10,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'11',1,0)) H11,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'12',1,0)) H12,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'13',1,0)) H13,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'14',1,0)) H14,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'15',1,0)) H15,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'16',1,0)) H16,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'17',1,0)) H17,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'18',1,0)) H18,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'19',1,0)) H19,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'20',1,0)) H20,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'21',1,0)) H21,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'22',1,0)) H22,

SUM(DECODE(SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH24:MI:SS'),10,2),'23',1,0)) H23,

COUNT(*) TOTAL

FROM v$log_history a

WHERE first_time>=to_char(sysdate-10)

GROUP BY SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH:MI:SS'),1,5)

ORDER BY SUBSTR(TO_CHAR(first_time, 'MM/DD/RR HH:MI:SS'),1,5) DESC;

修改天数,可以修改WHERE first_time>=to_char(sysdate-11)

执行结果:

参考链接:

http://www.dbtan.com/2009/12/how-many-redo-has-produced.html

http://www.askmaclean.com/archives/script%E5%88%97%E5%87%BAoracle%E6%AF%8F%E5%B0%8F%E6%97%B6%E7%9A%84redo%E9%87%8D%E5%81%9A%E6%97%A5%E5%BF%97%E4%BA%A7%E7%94%9F%E9%87%8F.html

http://www.jb51.net/article/119200.htm

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。