您好,登录后才能下订单哦!

密码登录

登录注册

点击 登录注册 即表示同意《亿速云用户服务条款》

利用python 爬虫怎么对京东进行爬取?相信很多没有经验的人对此束手无策,为此本文总结了问题出现的原因和解决方法,通过这篇文章希望你能解决这个问题。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @File : HtmlParser.py

# @Author: 赵路仓

# @Date : 2020/3/17

# @Desc :

# @Contact : 398333404@qq.com

import json

from lxml import etree

import requests

from bs4 import BeautifulSoup

url="https://search.jd.com/Search?keyword=ps4&enc=utf-8&wq=ps4&pvid=cf0158c8664442799c1146a461478c9c"

head={

'authority': 'search.jd.com',

'method': 'GET',

'path': '/s_new.php?keyword=%E6%89%8B%E6%9C%BA&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&wq=%E6%89%8B%E6%9C%BA&cid2=653&cid3=655&page=4&s=84&scrolling=y&log_id=1529828108.22071&tpl=3_M&show_items=7651927,7367120,7056868,7419252,6001239,5934182,4554969,3893501,7421462,6577495,26480543553,7345757,4483120,6176077,6932795,7336429,5963066,5283387,25722468892,7425622,4768461',

'scheme': 'https',

'referer': 'https://search.jd.com/Search?keyword=%E6%89%8B%E6%9C%BA&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&wq=%E6%89%8B%E6%9C%BA&cid2=653&cid3=655&page=3&s=58&click=0',

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36',

'x-requested-with': 'XMLHttpRequest',

}

def page(page):

print("开始")

url = "https://search.jd.com/Search?keyword=ps4&enc=utf-8&qrst=1&rt=1&stop=1&vt=1&wq=ps4&page="+page+"&s=181&click=0"

r=requests.get(url,timeout=3,headers=head)

r.encoding=r.apparent_encoding

# print(r.text)

b=BeautifulSoup(r.text,"html.parser")

#print(b.prettify())

_element = etree.HTML(r.text)

datas = _element.xpath('//li[contains(@class,"gl-item")]')

print(datas)

for data in datas:

p_price = data.xpath('div/div[@class="p-price"]/strong/i/text()')

p_comment = data.xpath('div/div[5]/strong/a/text()')

p_name = data.xpath('div/div[@class="p-name p-name-type-2"]/a/em/text()')

p_href = data.xpath('div/div[@class="p-name p-name-type-2"]/a/@href')

comment=' '.join(p_comment)

name = ' '.join(p_name)

price = ' '.join(p_price)

href = ' '.join(p_href)

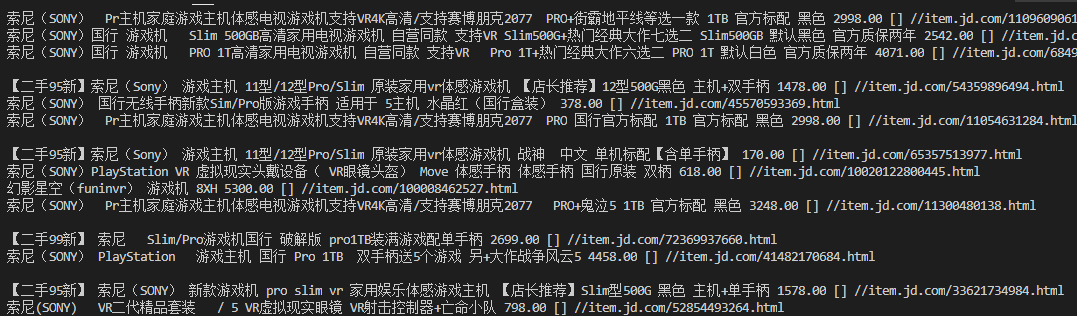

print(name,price,p_comment,href)

if __name__=="__main__":

page("5")

看完上述内容,你们掌握利用python 爬虫怎么对京东进行爬取的方法了吗?如果还想学到更多技能或想了解更多相关内容,欢迎关注亿速云行业资讯频道,感谢各位的阅读!

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。