您好,登录后才能下订单哦!

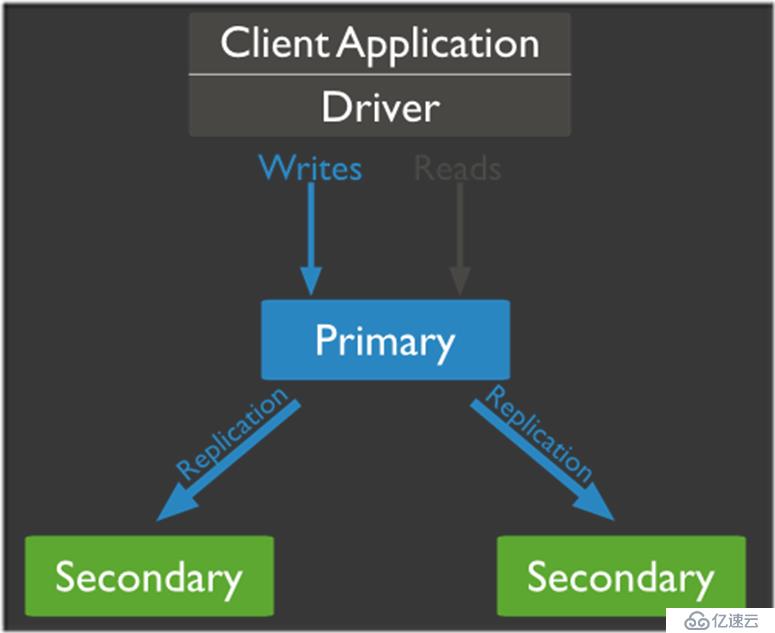

Mongodb复制集由一组Mongod实例(进程)组成,包含一个Primary节点和多个Secondary节点,Mongodb Driver(客户端)的所有数据都写入Primary,Secondary从Primary同步写入的数据,以保持复制集内所有成员存储相同的数据集,提供数据的高可用。

保障数据的安全性

数据高可用性 (24*7)

灾难恢复

无需停机维护(如备份,重建索引,压缩)

分布式读取数据

N 个节点的集群

任何节点可作为主节点

所有写入操作都在主节点上

自动故障转移

自动恢复

复制集图解

实验环境

系统:centos7

mongodb版本:v3.6.7

操作流程

添加三个实例

mkdir -p /data/mongodb/mongodb{2,3,4} /首先创建数据文件存放位置

mkdir -p /data/mongodb/logs /日志文件存放位置

touch /data/mongodb/logs/mongodb{2,3,4}.log /日志文件

chmod 777 /data/mongodb/logs/*.log /给日志文件加权

cd /data/mongodb/ /记得检查操作是否奏效

[root@cent mongodb]# ls

logs mongodb2 mongodb3 mongodb4

[root@cent mongodb]# cd logs/

[root@cent logs]# ll

总用量 0

-rwxrwxrwx. 1 root root 0 9月 12 09:48 mongodb2.log

-rwxrwxrwx. 1 root root 0 9月 12 09:48 mongodb3.log

-rwxrwxrwx. 1 root root 0 9月 12 09:48 mongodb4.log编辑2.3.4的配置文件

vim /etc/mongod2.conf 修改如下 systemLog: destination: file logAppend: true path: //后面的3,4则改为mongodb3.log,mongodb4.log storage: dbPath: /后面的3,4则改为mongodb3,4 journal: enabled: true net: port: /后面的3,4则改为27019,27020 bindIp: 0.0.0.0 replication: replSetName: yang

启动与检测·

开启服务 [root@cent logs]# mongod -f /etc/mongod2.conf about to fork child process, waiting until server is ready for connections. forked process: 83795child process started successfully, parent exiting [root@cent logs]# mongod -f /etc/mongod3.conf about to fork child process, waiting until server is ready for connections. forked process: 83823child process started successfully, parent exiting [root@cent logs]# mongod -f /etc/mongod4.conf about to fork child process, waiting until server is ready for connections. forked process: 83851child process started successfully, parent exiting [root@cent logs]# netstat -ntap /检测端口,分别看到27017,18,19,20

登陆测试

[root@cent logs]# mongo --port 27019 /指定端口登陆 MongoDB shell version v3.6.7 connecting to: mongodb://127.0.0.1:27019 /MongoDB server version: 3.6.7 >

复制集操作

定义复制集

[root@cent logs]# mongo //27017端口 >cfg={"_id":"yang","members":[{"_id":0,"host":"192.168.137.11:27017"},{"_id":1,"host":"192.168.137.11:27018"},{"_id":2,"host":"192.168.137.11:27019"}]} //定义复制集 { "_id" : "yang", "members" : [ { "_id" : 0, "host" : "192.168.137.11:27017" }, { "_id" : 1, "host" : "192.168.137.11:27018" }, { "_id" : 2, "host" : "192.168.137.11:27019" } ] } 启动 > rs.initiate(cfg) { "ok" : 1, "operationTime" : Timestamp(1536848891, 1), "$clusterTime" : { "clusterTime" : Timestamp(1536848891, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

查看复制集信息

yang:OTHER> db.stats(){

"db" : "test",

"collections" : 0,

"views" : 0,

"objects" : 0,

"avgObjSize" : 0,

"dataSize" : 0,

"storageSize" : 0,

"numExtents" : 0,

"indexes" : 0,

"indexSize" : 0,

"fileSize" : 0,

"fsUsedSize" : 0,

"fsTotalSize" : 0,

"ok" : 1,

"operationTime" : Timestamp(1536741495, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536741495, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}查看复制集状态

yang:SECONDARY> rs.status()

{

"set" : "yang",

"date" : ISODate("2018-09-12T08:58:56.358Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

}

},

"members" : [{

"_id" : 0,

"name" : "192.168.137.11:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 24741,

"optime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-09-12T08:58:48Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1536741506, 1),

"electionDate" : ISODate("2018-09-12T08:38:26Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.137.11:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1240,

"optime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-09-12T08:58:48Z"),

"optimeDurableDate" : ISODate("2018-09-12T08:58:48Z"),

"lastHeartbeat" : ISODate("2018-09-12T08:58:54.947Z"),

"lastHeartbeatRecv" : ISODate("2018-09-12T08:58:55.699Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.137.11:27017",

"syncSourceHost" : "192.168.137.11:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.137.11:27019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1240,

"optime" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1536742728, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-09-12T08:58:48Z"),

"optimeDurableDate" : ISODate("2018-09-12T08:58:48Z"),

"lastHeartbeat" : ISODate("2018-09-12T08:58:54.947Z"),

"lastHeartbeatRecv" : ISODate("2018-09-12T08:58:55.760Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.137.11:27017",

"syncSourceHost" : "192.168.137.11:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}],

"ok" : 1,

"operationTime" : Timestamp(1536742728, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536742728, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}添加与删除

添加节点时,不要有数据,否则可能丢失数据。

添加一个27020节点

yang:PRIMARY> rs.add("192.168.137.11:27020")

{

"ok" : 1,

"operationTime" : Timestamp(1536849195, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536849195, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

查验

yang:PRIMARY> rs.status(){

"_id" : 3,

"name" : "192.168.137.11:27020", //27020在末尾出现了

删除27020

yang:PRIMARY> rs.remove("192.168.137.11:27020")

{

"ok" : 1,

"operationTime" : Timestamp(1536849620, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536849620, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

故障转移切换

注释:mongodb中每个实例都对应一个进程,故结束进程则节点停运,以此达到模拟故障。

查看进程

[root@cent mongodb]# ps aux | grep mongod

root 74970 0.4 3.1 1580728 59392 ? Sl 21:47 0:15 mongod -f /etcmongod.conf

root 75510 0.4 2.8 1465952 53984 ? Sl 22:16 0:07 mongod -f /etcmongod2.conf

root 75538 0.4 2.9 1501348 54496 ? Sl 22:17 0:07 mongod -f /etcmongod3.conf

root 75566 0.3 2.7 1444652 52144 ? Sl 22:17 0:06 mongod -f /etcmongod4.conf

结束primary(27017)

[root@cent mongodb]# kill -9 74970

[root@cent mongodb]# ps aux | grep mongod

root 75510 0.4 2.9 1465952 55016 ? Sl 22:16 0:10 mongod -f /etcmongod2.conf

root 75538 0.4 2.9 1493152 55340 ? Sl 22:17 0:10 mongod -f /etcmongod3.conf

root 75566 0.3 2.7 1444652 52168 ? Sl 22:17 0:08 mongod -f /etcmongod4.conf

进入27018查验

ARY> rs.status()

yang:SECOND

"_id" : 0,

"name" : "192.168.137.11:27017",

"health" : 0, //健康值为0,显示原本的primary已经失效"_id" : 2,

"name" : "192.168.137.11:27019",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", //而这一台服务器抢到了primary

手动切换

手动切换需要在primary下进行操作,现在primary是27018

暂停三十秒不进行选举

[root@cent mongodb]# mongo --port 27019

yang:PRIMARY> rs.freeze(30)

{

"ok" : 0,

"errmsg" : "cannot freeze node when primary or running for election. state: Primary",

"code" : 95,

"codeName" : "NotSecondary",

"operationTime" : Timestamp(1536851239, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536851239, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

交出主节点位置,维持从节点状态不少于60秒,等待30秒使主节点和从节点日至同步。

yang:PRIMARY> rs.stepDown(60,30)

2018-09-13T23:07:48.655+0800 E QUERY [thread1] Error: error doing query: failed: network error while attempting to run command 'replSetStepDown' on host '127.0.0.1:27019' :

DB.prototype.runCommand@src/mongo/shell/db.js:168:1

DB.prototype.adminCommand@src/mongo/shell/db.js:186:16

rs.stepDown@src/mongo/shell/utils.js:1341:12

@(shell):1:1

2018-09-13T23:07:48.658+0800 I NETWORK [thread1] trying reconnect to 127.0.0.1:27019 (127.0.0.1) failed

2018-09-13T23:07:48.659+0800 I NETWORK [thread1] reconnect 127.0.0.1:27019 (127.0.0.1) ok

yang:SECONDARY> //可以看到它直接变成了从

节点选举

复制集通过replSetInitiate命令(或mongo shell的rs.initiate())进行初始化,初始化后各个成员间开始发送心跳消息,并发起Priamry选举操作,获得『大多数』成员投票支持的节点,会成为Primary,其余节点成为Secondary。

回到上面定义复制集那一步,这里把语句稍作改变,添加优先值与仲裁节点。

> cfg={"_id":"yang","members":[{"_id":0,"host":"192.168.137.11:27017","priority":100},{"_id":1,"host":"192.168.137.11:27018","priority":100},{"_id":2,"host":"192.168.137.11:27019","priority":0},{"_id":3,"host":"192.168.137.11:27020","arbiterOnly":true}]}

{

"_id" : "yang",

"members" : [

{

"_id" : 0,

"host" : "192.168.137.11:27017",

"priority" : 100 //优先级

},

{

"_id" : 1,

"host" : "192.168.137.11:27018",

"priority" : 100 //优先级

},

{

"_id" : 2,

"host" : "192.168.137.11:27019",

"priority" : 0 //优先级

},

{

"_id" : 3,

"host" : "192.168.137.11:27020",

"arbiterOnly" : true

}

]

}

启动cfg

> rs.initiate(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1536852325, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536852325, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

查看各实例关系与日志

yang:OTHER> rs.isMaster()

{

"hosts" : [ //标准节点

"192.168.137.11:27017",

"192.168.137.11:27018"

],

"passives" : [ //被动节点

"192.168.137.11:27019"

],

"arbiters" : [ //动态节点

"192.168.137.11:27020"

],

"setName" : "yang",

"setVersion" : 1,

"ismaster" : false,

"secondary" : true,

"me" : "192.168.137.11:27017",

"lastWrite" : {

"opTime" : {

"ts" : Timestamp(1536852325, 1),

"t" : NumberLong(-1)

},

"lastWriteDate" : ISODate("2018-09-13T15:25:25Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 100000,

"localTime" : ISODate("2018-09-13T15:25:29.008Z"),

"logicalSessionTimeoutMinutes" : 30,

"minWireVersion" : 0,

"maxWireVersion" : 6,

"readOnly" : false,

"ok" : 1,

"operationTime" : Timestamp(1536852325, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1536852325, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

添加一个集合,做一些基本操作,让日志更新。

yang:SECONDARY> use mood

switched to db mood

yang:PRIMARY> db.info.insert({"id":1,"name":"mark"})

WriteResult({ "nInserted" : 1 })

yang:PRIMARY> db.info.find()

{ "_id" : ObjectId("5b9a8244b4360d88324a69fc"), "id" : 1, "name" : "mark" }

yang:PRIMARY> db.info.update({"id":1},{$set:{"name":"zhangsan"}})

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

yang:PRIMARY> db.info.find()

{ "_id" : ObjectId("5b9a8244b4360d88324a69fc"), "id" : 1, "name" : "zhangsan" }

查看日志

yang:PRIMARY> use local

switched to db local

yang:PRIMARY> show collections

me

oplog.rs

replset.election

replset.minvalid

startup_log

system.replset

system.rollback.id

yang:PRIMARY> db.oplog.rs.find() //数量很多

抢占测试

首先把主节点关闭

[root@cent ~]# mongod -f /etc/mongod.conf --shutdown

killing process with pid: 74970

登陆下一个节点27018,发现它变成了主节点,继续关闭27018,两个标准节点都没有了,实验被动节点时候会变为主节点。

登陆被动节点

yang:SECONDARY> rs.status()

"_id" : 2,

"name" : "192.168.137.11:27019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

发现并不会,启动前面关闭的两个节点。

[root@cent ~]# mongod -f /etc/mongod2.conf

about to fork child process, waiting until server is ready for connections.

forked process: 77132

child process started successfully, parent exiting

[root@cent ~]# mongod -f /etc/mongod.conf

about to fork child process, waiting until server is ready for connections.

forked process: 77216

child process started successfully, parent exiting

其中27018又变为主节点了。

[root@cent ~]# mongo --port 27018

yang:PRIMARY>

可见只有标准节点会互相抢占主节点。

数据权限

Primary与Secondary之间通过oplog来同步数据,Primary上的写操作完成后,会向特殊的local.oplog.rs特殊集合写入一条oplog,Secondary不断的从Primary取新的oplog并应用。

因oplog的数据会不断增加,local.oplog.rs被设置成为一个capped集合,当容量达到配置上限时,会将最旧的数据删除掉。另外考虑到oplog在Secondary上可能重复应用,oplog必须具有幂等性,即重复应用也会得到相同的结果。

只有主节点有权限查看数据,从节点是没有权限的,会出现以下回馈。

yang:SECONDARY> show dbs

2018-09-13T23:58:34.112+0800 E QUERY [thread1] Error: listDatabases failed:{

"operationTime" : Timestamp(1536854312, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotMasterNoSlaveOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1536854312, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

} :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:65:1

shellHelper.show@src/mongo/shell/utils.js:849:19

shellHelper@src/mongo/shell/utils.js:739:15

@(shellhelp2):1:1

使用如下命令在从节点上查看信息

yang:SECONDARY> rs.slaveOk()

yang:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

mood 0.000GB

仲裁节点不会复制主节点中的复制信息

可以看到只有两台节点又数据日志,27020仲裁节点是没有的

yang:SECONDARY> rs.printSlaveReplicationInfo()

source: 192.168.137.11:27017

syncedTo: Fri Sep 14 2018 00:03:52 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

source: 192.168.137.11:27019

syncedTo: Fri Sep 14 2018 00:03:52 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

yang:SECONDARY> rs.help() //获取副本集相关的帮助命令

rs.status() { replSetGetStatus : 1 } checks repl set status

rs.initiate() { replSetInitiate : null } initiates set with default settings

rs.initiate(cfg) { replSetInitiate : cfg } initiates set with configuration cfg

rs.conf() get the current configuration object from local.system.replset

rs.reconfig(cfg) updates the configuration of a running replica set with cfg (disconnects)

rs.add(hostportstr) add a new member to the set with default attributes (disconnects)

rs.add(membercfgobj) add a new member to the set with extra attributes (disconnects)

rs.addArb(hostportstr) add a new member which is arbiterOnly:true (disconnects)

rs.stepDown([stepdownSecs, catchUpSecs]) step down as primary (disconnects)

rs.syncFrom(hostportstr) make a secondary sync from the given member

rs.freeze(secs) make a node ineligible to become primary for the time specified

rs.remove(hostportstr) remove a host from the replica set (disconnects)

rs.slaveOk() allow queries on secondary nodesrs.printReplicationInfo() check oplog size and time range

rs.printSlaveReplicationInfo() check replica set members and replication lag

db.isMaster() check who is primaryreconfiguration helpers disconnect from the database so the shell will display

an error, even if the command succeeds.

总结一下各节点的属性:

Arbiter节点只参与投票,不能被选为Primary,并且不从Primary同步数据。

比如你部署了一个2个节点的复制集,1个Primary,1个Secondary,任意节点宕机,复制集将不能提供服务了(无法选出Primary),这时可以给复制集添加一个Arbiter节点,即使有节点宕机,仍能选出Primary。

Arbiter本身不存储数据,是非常轻量级的服务,当复制集成员为偶数时,最好加入一个Arbiter节点,以提升复制集可用性。

Priority0节点的选举优先级为0,不会被选举为Primary

比如你跨机房A、B部署了一个复制集,并且想指定Primary必须在A机房,这时可以将B机房的复制集成员Priority设置为0,这样Primary就一定会是A机房的成员。(注意:如果这样部署,最好将『大多数』节点部署在A机房,否则网络分区时可能无法选出Primary)

Mongodb 3.0里,复制集成员最多50个,参与Primary选举投票的成员最多7个,其他成员(Vote0)的vote属性必须设置为0,即不参与投票。

Hidden节点不能被选为主(Priority为0),并且对Driver不可见。

因Hidden节点不会接受Driver的请求,可使用Hidden节点做一些数据备份、离线计算的任务,不会影响复制集的服务。

Delayed节点必须是Hidden节点,并且其数据落后与Primary一段时间(可配置,比如1个小时)。

因Delayed节点的数据比Primary落后一段时间,当错误或者无效的数据写入Primary时,可通过Delayed节点的数据来恢复到之前的时间点。

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。