您好,登录后才能下订单哦!

1)启动环境

start-all.sh

2)产看状态

jps

0613 NameNode

10733 DataNode

3455 NodeManager

15423 Jps

11082 ResourceManager

10913 SecondaryNameNode

3)利用Eclipse编写jar

1.编写WordMap

public class MrMap extends Mapper<Object, Text, Text, IntWritable>{

protected void map(Object key, Text value, Context context) { String line= value.toString(); String[] words = line.split(" "); for (String str : words) { Text text=new Text(str); IntWritable num=new IntWritable(1); try { context.write(text, num); } catch (Exception e) { // TODO Auto-generated catch block e.printStackTrace(); } } }; } |

2.编写WordReduce类

public class WordReduce extends Reducer<Text, IntWritable, Text, IntWritable> { protected void reduce(Text text, Iterable<IntWritable> itrs, Context context) { int sum = 0; for (IntWritable itr : itrs) { sum = sum + itr.get(); } try { context.write(text, new IntWritable(sum)); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } catch (InterruptedException e) { // TODO Auto-generated catch block e.printStackTrace(); } }; } |

3.编写WordCount类

public class WordCount { /** * @param args * @throws IOException * @throws InterruptedException * @throws ClassNotFoundException */ public static void main(String[] args) throws IOException { Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(conf); Job job = null; try { job = Job.getInstance(conf); job.setJobName("wc"); job.setJarByClass(WordCount.class); job.setMapperClass(WordMap.class); job.setReducerClass(WordReduce.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path("/word.txt")); if (fs.exists(new Path("/out"))) { fs.delete(new Path("/out")); } FileOutputFormat.setOutputPath(job, new Path("/out")); System.exit(job.waitForCompletion(true) ? 0 : 1); } catch (Exception e) { // TODO Auto-generated catch block e.printStackTrace(); } } } |

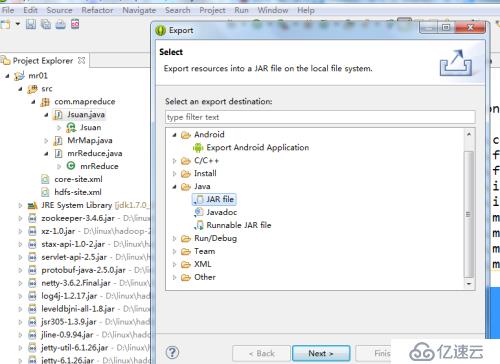

4)导出jar包

5)通过ftp上传jar到linux目录

6)运行jar包

hadoop jar wc.jar com.mc.WordCount / /out

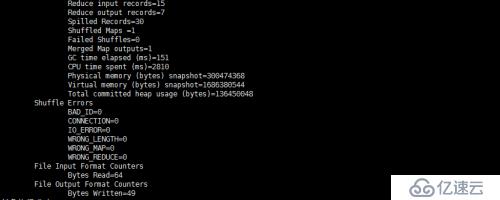

7)如果map和reduce都100%,以及

表示运行成功!!

8)产看结果

hadoop fs -tail /out/part-r-00000

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。