您好,登录后才能下订单哦!

本分档主要分四个部分,安装部署hadoop_hbase、hbase基本命令、移除一个hadoop+hbase节点、添加一个移除一个hadoop+hbase节点

1、安装配置Hadoop 1.0.3+hbase-0.92.1

环境概括

| Hostname | Role |

| sht-sgmhadoopcm-01(172.16.101.54) | NameNode, ZK, HMaster |

| sht-sgmhadoopdn-01(172.16.101.58) | DataNode, ZK, HRegionServer |

| sht-sgmhadoopdn-02(172.16.101.59) | DataNode, ZK, HRegionServer |

| sht-sgmhadoopdn-03(172.16.101.60) | DataNode, HRegionServer |

| sht-sgmhadoopdn-04(172.16.101.66) | DataNode, HRegionServer |

使用tnuser用户,每个机器节点之间需要ssh互信

每个机器节点都需要安装jdk1.6.0_12并配置好环境变量

[tnuser@sht-sgmhadoopcm-01 ~]$ cat .bash_profile

export JAVA_HOME=/usr/java/jdk1.6.0_12

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export HADOOP_HOME=/usr/local/contentplatform/hadoop-1.0.3

export HBASE_HOME=/usr/local/contentplatform/hbase-0.92.1

export PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HBASE_HOME/bin

[tnuser@sht-sgmhadoopcm-01 ~]$ rsync -avz --progress ~/.bash_profile sht-sgmhadoopdn-01:~/

[tnuser@sht-sgmhadoopcm-01 ~]$ rsync -avz --progress ~/.bash_profile sht-sgmhadoopdn-02:~/

[tnuser@sht-sgmhadoopcm-01 ~]$ rsync -avz --progress ~/.bash_profile sht-sgmhadoopdn-03:~/

[tnuser@sht-sgmhadoopcm-01 ~]$ rsync -avz --progress ~/.bash_profile sht-sgmhadoopdn-04:~/

创建目录

[tnuser@sht-sgmhadoopcm-01 contentplatform]$ mkdir -p /usr/local/contentplatform/data/dfs/{name,data}

[tnuser@sht-sgmhadoopcm-01 contentplatform]$ mkdir -p /usr/local/contentplatform/temp

[tnuser@sht-sgmhadoopcm-01 contentplatform]$ mkdir -p /usr/local/contentplatform/logs/{hadoop,hbase}

修改hadoop的相关配置文件

[tnuser@sht-sgmhadoopcm-01 conf]$ vim hadoop-env.sh export JAVA_HOME=/usr/java/jdk1.6.0_12 export HADOOP_HEAPSIZE=3072 export HADOOP_OPTS=-Djava.net.preferIPv4Stack=true export HADOOP_LOG_DIR=/usr/local/contentplatform/logs/hadoop [tnuser@sht-sgmhadoopcm-01 conf]$ cat core-site.xml <configuration> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/contentplatform/temp</value> </property> <property> <name>fs.default.name</name> <value>hdfs://sht-sgmhadoopcm-01:9000</value> </property> <property> <name>hadoop.proxyuser.tnuser.hosts</name> <value>sht-sgmhadoopdn-01.telenav.cn</value> </property> <property> <name>hadoop.proxyuser.tnuser.groups</name> <value>appuser</value> </property> </configuration> [tnuser@sht-sgmhadoopcm-01 conf]$ cat hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.name.dir</name> <value>/usr/local/contentplatform/data/dfs/name</value> </property> <property> <name>dfs.data.dir</name> <value>/usr/local/contentplatform/data/dfs/data</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> <property> <name>dfs.support.append</name> <value>true</value> </property> <property> <name>dfs.datanode.max.xcievers</name> <value>4096</value> </property> <property> <name>dfs.datanode.dns.nameserver</name> <value>10.224.0.102</value> </property> <property> <name>mapred.min.split.size</name> <value>100663296</value> </property> <property> <name>dfs.datanode.socket.write.timeout</name> <value>0</value> </property> <property> <name>dfs.datanode.socket.write.timeout</name> <value>3000000</value> </property> <property> <name>dfs.socket.timeout</name> <value>3000000</value> </property> <property> <name>dfs.http.address</name> <value>0.0.0.0:50070</value> </property> </configuration> [tnuser@sht-sgmhadoopcm-01 conf]$ cat mapred-site.xml <configuration> <property> <name>mapred.job.tracker</name> <value>sht-sgmhadoopcm-01:9001</value> </property> <property> <name>mapred.system.dir</name> <value>/usr/local/contentplatform/data/mapred/system/</value> </property> <property> <name>mapred.local.dir</name> <value>/usr/local/contentplatform/data/mapred/local/</value> </property> <property> <name>mapred.tasktracker.map.tasks.maximum</name> <value>4</value> </property> <property> <name>mapred.tasktracker.reduce.tasks.maximum</name> <value>1</value> </property> <property> <name>io.sort.mb</name> <value>200m</value> <final>true</final> </property> <property> <name>io.sort.factor</name> <value>20</value> <final>true</final> </property> <property> <name>mapred.task.timeout</name> <value>7200000</value> </property> <property> <name>mapred.child.java.opts</name> <value>-Xmx2048m</value> </property> </configuration>

修改hbase的相关配置文件

[tnuser@sht-sgmhadoopcm-01 conf]$ cat hbase-env.sh export JAVA_HOME=/usr/java/jdk1.6.0_12 export HBASE_HEAPSIZE=5120 export HBASE_LOG_DIR=/usr/local/contentplatform/logs/hbase export HBASE_OPTS="-XX:+UseConcMarkSweepGC" export HBASE_OPTS="-server -Djava.net.preferIPv4Stack=true -XX:+UseParallelGC -XX:ParallelGCThreads=4 -XX:+AggressiveHeap -XX:+HeapDumpOnOutOfMemoryError" export HBASE_MANAGES_ZK=true #这里为true表示使用hbase自带zk,不需要另外安装zk [tnuser@sht-sgmhadoopcm-01 conf]$ cat regionservers sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 sht-sgmhadoopdn-04 [tnuser@sht-sgmhadoopcm-01 conf]$ cat hbase-site.xml <configuration> <property> <name>hbase.zookeeper.quorum</name> <value>sht-sgmhadoopcm-01,sht-sgmhadoopdn-01,sht-sgmhadoopdn-02</value> </property> <property> <name>hbase.zookeeper.dns.nameserver</name> <value>10.224.0.102</value> </property> <property> <name>hbase.regionserver.dns.nameserver</name> <value>10.224.0.102</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/usr/local/contentplatform/data/zookeeper</value> </property> <property> <name>hbase.rootdir</name> <value>hdfs://sht-sgmhadoopcm-01:9000/hbase</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> <description>The mode the cluster will be in. Possible values are false: standalone and pseudo-distributed setups with managed Zookeeper true: fully-distributed with unmanaged Zookeeper Quorum (see hbase-env.sh) </description> </property> <property> <name>hbase.hregion.max.filesize</name> <value>536870912</value> </property> <property> <name>hbase.regionserver.global.memstore.upperLimit</name> <value>0.2</value> </property> <property> <name>hbase.regionserver.global.memstore.lowerLimit</name> <value>0.1</value> </property> <property> <name>hfile.block.cache.size</name> <value>0.5</value> </property> <property> <name>dfs.support.append</name> <value>true</value> </property> <property> <name>hbase.regionserver.lease.period</name> <value>1800000</value> </property> <property> <name>hbase.rpc.timeout</name> <value>1800000</value> </property> <property> <name>hbase.hstore.blockingStoreFiles</name> <value>40</value> </property> <property> <name>zookeeper.session.timeout</name> <value>900000</value> </property> <property> <name>hbase.hregion.memstore.flush.size</name> <value>134217728</value> </property> <property> <name>hbase.hstore.compaction.max</name> <value>30</value> </property> <property> <name>hbase.regionserver.handler.count</name> <value>10</value> </property> </configuration>

拷贝整个目录分配到其他节点

[tnuser@sht-sgmhadoopcm-01 contentplatform]$ ll /usr/local/contentplatform/

total 103572

drwxr-xr-x 4 tnuser appuser 34 Apr 6 21:41 data

drwxr-xr-x 14 tnuser appuser 4096 May 9 2012 hadoop-1.0.3

-rw-r--r-- 1 tnuser appuser 62428860 Apr 5 14:59 hadoop-1.0.3.tar.gz

drwxr-xr-x 10 tnuser appuser 255 Apr 6 21:36 hbase-0.92.1

-rw-r--r-- 1 tnuser appuser 43621631 Apr 5 15:00 hbase-0.92.1.tar.gz

drwxr-xr-x 4 tnuser appuser 33 Apr 6 22:43 logs

drwxr-xr-x 3 tnuser appuser 17 Apr 6 20:44 temp

[root@sht-sgmhadoopcm-01 local]# rsync -avz --progress /usr/local/contentplatform sht-sgmhadoopdn-01:/usr/local/

[root@sht-sgmhadoopcm-01 local]# rsync -avz --progress /usr/local/contentplatform sht-sgmhadoopdn-02:/usr/local/

[root@sht-sgmhadoopcm-01 local]# rsync -avz --progress /usr/local/contentplatform sht-sgmhadoopdn-03:/usr/local/

[root@sht-sgmhadoopcm-01 local]# rsync -avz --progress /usr/local/contentplatform sht-sgmhadoopdn-04:/usr/local/

启动hadoop

[tnuser@sht-sgmhadoopcm-01 data]$ hadoop namenode -format

[tnuser@sht-sgmhadoopcm-01 bin]$ start-all.sh

[tnuser@sht-sgmhadoopcm-01 conf]$jps

6008 NameNode

6392 Jps

6191 SecondaryNameNode

6279 JobTracker

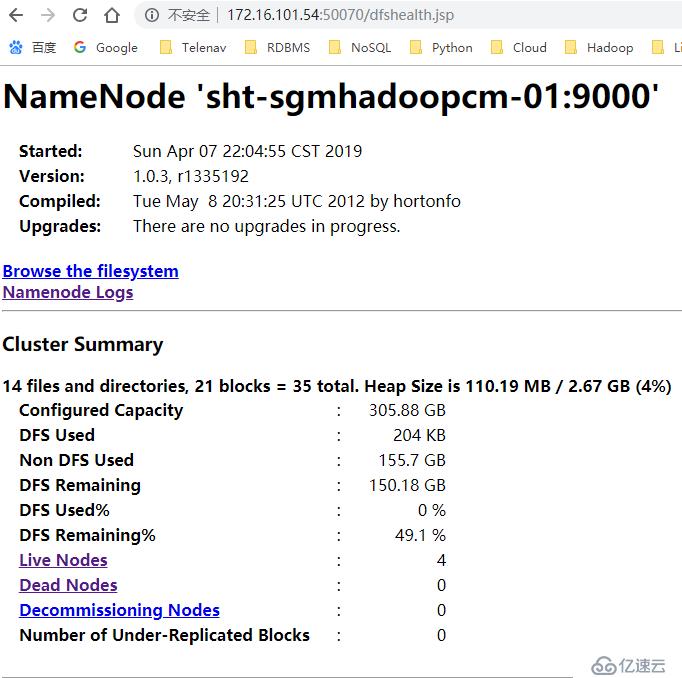

访问HDFS文件系统:

http://172.16.101.54:50070

http://172.16.101.59:50075/browseDirectory.jsp?namenodeInfoPort=50070&dir=/

启动hbase

[tnuser@sht-sgmhadoopcm-01 ~]$ start-hbase.sh

[tnuser@sht-sgmhadoopcm-01 ~]$ jps

3792 HQuorumPeer

4103 Jps

3876 HMaster

3142 NameNode

3323 SecondaryNameNode

3408 JobTracker

http://172.16.101.54:60010

2、hbase基本命令

查看hbase运行状态

hbase(main):001:0> status

4 servers, 0 dead, 0.7500 average load

创建表t1

hbase(main):008:0> create 't1','info'

查看所有表

hbase(main):009:0> list

TABLE

t1

查看表对应的hdfs文件:

[tnuser@sht-sgmhadoopcm-01 hbase-0.92.1]$ hadoop dfs -ls /hbase/

Found 7 items

drwxr-xr-x - tnuser supergroup 0 2019-04-06 22:41 /hbase/-ROOT-

drwxr-xr-x - tnuser supergroup 0 2019-04-06 22:41 /hbase/.META.

drwxr-xr-x - tnuser supergroup 0 2019-04-06 23:14 /hbase/.logs

drwxr-xr-x - tnuser supergroup 0 2019-04-06 22:41 /hbase/.oldlogs

-rw-r--r-- 3 tnuser supergroup 38 2019-04-06 22:41 /hbase/hbase.id

-rw-r--r-- 3 tnuser supergroup 3 2019-04-06 22:41 /hbase/hbase.version

drwxr-xr-x - tnuser supergroup 0 2019-04-07 16:53 /hbase/t1

查看表详情

hbase(main):017:0> describe 't1'

DESCRIPTION ENABLED

{NAME => 't1', FAMILIES => [{NAME => 'info', BLOOMFILTER => 'NONE', REPLICATION_SCOPE => '0', VERSIONS => '3', COMPRESSIO true

N => 'NONE', MIN_VERSIONS => '0', TTL => '2147483647', BLOCKSIZE => '65536', IN_MEMORY => 'false', BLOCKCACHE => 'true'}]

}

判断表是否存在

hbase(main):018:0> exists 't1'

Table t1 does exist

禁用和启用表

hbase(main):019:0> is_disabled 't1'

false

或者:disable 't1'

hbase(main):020:0> is_enabled 't1'

true

或者:enable 't1'

插入记录:put <table>,<rowkey>,<family:column>,<value>

hbase(main):010:0> put 't1','row1','info:name','xiaoming'

hbase(main):011:0> put 't1','row2','info:age','18'

hbase(main):012:0> put 't1','row3','info:sex','male'

查询表记录get <table>,<rowkey>,[<family:column>, ...]

hbase(main):014:0> get 't1','row1'

COLUMN CELL

info:name timestamp=1554621994538, value=xiaoming

hbase(main):015:0> get 't1','row2','info:age'

COLUMN CELL

info:age timestamp=1554623754957, value=18

hbase(main):017:0> get 't1','row2',{COLUMN=> 'info:age'}

COLUMN CELL

info:age timestamp=1554623754957, value=18

范围扫描:

hbase(main):026:0* scan 't1'

ROW COLUMN+CELL

row1 column=info:name, timestamp=1554621994538, value=xiaoming

row2 column=info:age, timestamp=1554625223482, value=18

row3 column=info:sex, timestamp=1554625229782, value=male

hbase(main):027:0> scan 't1',{LIMIT => 2}

ROW COLUMN+CELL

row1 column=info:name, timestamp=1554621994538, value=xiaoming

row2 column=info:age, timestamp=1554625223482, value=18

hbase(main):034:0> scan 't1',{STARTROW => 'row2'}

ROW COLUMN+CELL

row2 column=info:age, timestamp=1554625223482, value=18

row3 column=info:sex, timestamp=1554625229782, ue=male

hbase(main):038:0> scan 't1',{STARTROW => 'row2',ENDROW => 'row3'}

ROW COLUMN+CELL

row2 column=info:age, timestamp=1554625223482, value=18

计数表

hbase(main):042:0> count 't1'

3 row(s) in 0.0200 seconds

删除行指定行的列簇

hbase(main):013:0> delete 't1','row3','info:sex'

删除整行

hbase(main):047:0> deleteall 't1','row2'

清空表

hbase(main):049:0> truncate 't1'

Truncating 't1' table (it may take a while):

- Disabling table...

- Dropping table...

- Creating table...

0 row(s) in 4.8050 seconds

删除表(删除表之前要禁用表)

hbase(main):058:0> disable 't1'

hbase(main):059:0> drop 't1'创建HBase测试数据

create 't1','info' put 't1','row1','info:name','xiaoming' put 't1','row2','info:age','18' put 't1','row3','info:sex','male' create 'emp','personal','professional' put 'emp','1','personal:name','raju' put 'emp','1','personal:city','hyderabad' put 'emp','1','professional:designation','manager' put 'emp','1','professional:salary','50000' put 'emp','2','personal:name','ravi' put 'emp','2','personal:city','chennai' put 'emp','2','professional:designation','sr.engineer' put 'emp','2','professional:salary','30000' put 'emp','3','personal:name','rajesh' put 'emp','3','personal:city','delhi' put 'emp','3','professional:designation','jr.engineer' put 'emp','3','professional:salary','25000' hbase(main):040:0> scan 't1' ROW COLUMN+CELL row1 column=info:name, timestamp=1554634306493, value=xiaoming row2 column=info:age, timestamp=1554634306540, value=18 row3 column=info:sex, timestamp=1554634307409, value=male 3 row(s) in 0.0290 seconds hbase(main):041:0> scan 'emp' ROW COLUMN+CELL 1 column=personal:city, timestamp=1554634236024, value=hyderabad 1 column=personal:name, timestamp=1554634235959, value=raju 1 column=professional:designation, timestamp=1554634236063, value=manager 1 column=professional:salary, timestamp=1554634237419, value=50000 2 column=personal:city, timestamp=1554634241879, value=chennai 2 column=personal:name, timestamp=1554634241782, value=ravi 2 column=professional:designation, timestamp=1554634241920, value=sr.engineer 2 column=professional:salary, timestamp=1554634242923, value=30000 3 column=personal:city, timestamp=1554634246842, value=delhi 3 column=personal:name, timestamp=1554634246784, value=rajesh 3 column=professional:designation, timestamp=1554634246879, value=jr.engineer 3 column=professional:salary, timestamp=1554634247692, value=25000 3 row(s) in 0.0330 seconds

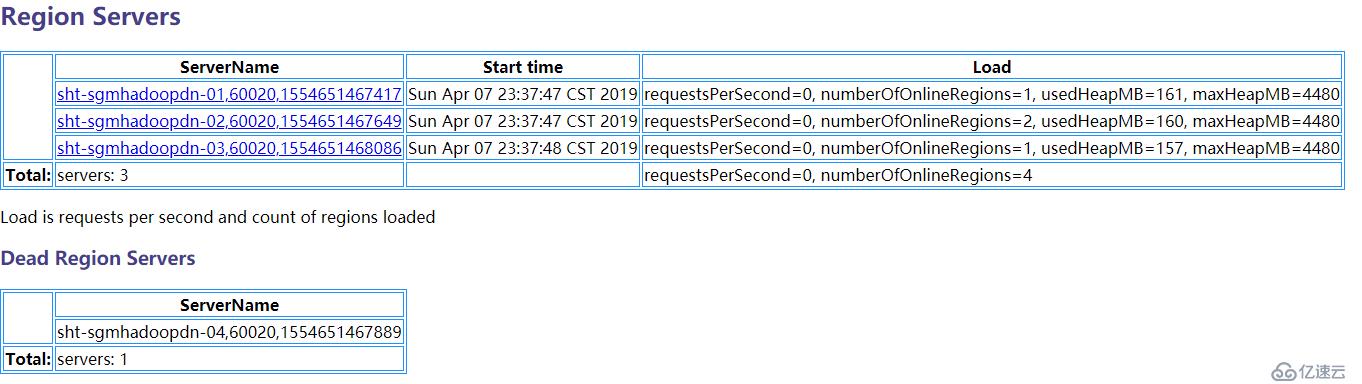

3、移除一个hadoop+hbase节点

先移除sht-sgmhadoopdn-04的hbase节点

graceful_stop.sh脚本自动关闭平衡器,移动region到其他regionserver,如果数据量比较大,这个步骤大概需要花费较长的时间.

[tnuser@sht-sgmhadoopcm-01 bin]$ graceful_stop.sh sht-sgmhadoopdn-04 Disabling balancer! HBase Shell; enter 'help<RETURN>' for list of supported commands. Type "exit<RETURN>" to leave the HBase Shell Version 0.92.1, r1298924, Fri Mar 9 16:58:34 UTC 2012 balance_switch false SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/contentplatform/hbase-0.92.1/lib/slf4j-log4j12-1.5.8.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/contentplatform/hadoop-1.0.3/lib/slf4j-log4j12-1.4.3.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. true 0 row(s) in 0.7580 seconds Unloading sht-sgmhadoopdn-04 region(s) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/local/contentplatform/hbase-0.92.1/lib/slf4j-log4j12-1.5.8.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/local/contentplatform/hadoop-1.0.3/lib/slf4j-log4j12-1.4.3.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.3-1240972, built on 02/06/2012 10:48 GMT 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:host.name=sht-sgmhadoopcm-01 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:java.version=1.6.0_12 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Sun Microsystems Inc. 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/java/jdk1.6.0_12/jre 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/contentplatform/hadoop-1.0.3/libexec/../lib/native/Linux-amd64-64:/usr/local/contentplatform/hbase-0.92.1/lib/native/Linux-amd64-64 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA> 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:os.version=3.10.0-514.el7.x86_64 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:user.name=tnuser 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/tnuser 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Client environment:user.dir=/usr/local/contentplatform/hbase-0.92.1/bin 19/04/07 20:11:14 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=sht-sgmhadoopdn-01:2181,sht-sgmhadoopcm-01:2181,sht-sgmhadoopdn-02:2181 sessionTimeout=900000 watcher=hconnection 19/04/07 20:11:14 INFO zookeeper.ClientCnxn: Opening socket connection to server /172.16.101.58:2181 19/04/07 20:11:14 INFO zookeeper.RecoverableZooKeeper: The identifier of this process is 24569@sht-sgmhadoopcm-01.telenav.cn 19/04/07 20:11:14 WARN client.ZooKeeperSaslClient: SecurityException: java.lang.SecurityException: Unable to locate a login configuration occurred when trying to find JAAS configuration. 19/04/07 20:11:14 INFO client.ZooKeeperSaslClient: Client will not SASL-authenticate because the default JAAS configuration section 'Client' could not be found. If you are not using SASL, you may ignore this. On the other hand, if you expected SASL to work, please fix your JAAS configuration. 19/04/07 20:11:14 INFO zookeeper.ClientCnxn: Socket connection established to sht-sgmhadoopdn-01/172.16.101.58:2181, initiating session 19/04/07 20:11:14 INFO zookeeper.ClientCnxn: Session establishment complete on server sht-sgmhadoopdn-01/172.16.101.58:2181, sessionid = 0x169f7b052050003, negotiated timeout = 900000 19/04/07 20:11:15 INFO region_mover: Moving 2 region(s) from sht-sgmhadoopdn-04,60020,1554638724252 during this cycle 19/04/07 20:11:15 INFO region_mover: Moving region 1028785192 (0 of 2) to server=sht-sgmhadoopdn-01,60020,1554638723581 19/04/07 20:11:16 INFO region_mover: Moving region d3a10ae012afde8e1e401a2e400accc8 (1 of 2) to server=sht-sgmhadoopdn-01,60020,1554638723581 19/04/07 20:11:17 INFO region_mover: Wrote list of moved regions to /tmp/sht-sgmhadoopdn-04 Unloaded sht-sgmhadoopdn-04 region(s) sht-sgmhadoopdn-04: ******************************************************************** sht-sgmhadoopdn-04: * * sht-sgmhadoopdn-04: * This system is for the use of authorized users only. Usage of * sht-sgmhadoopdn-04: * this system may be monitored and recorded by system personnel. * sht-sgmhadoopdn-04: * * sht-sgmhadoopdn-04: * Anyone using this system expressly consents to such monitoring * sht-sgmhadoopdn-04: * and they are advised that if such monitoring reveals possible * sht-sgmhadoopdn-04: * evidence of criminal activity, system personnel may provide the * sht-sgmhadoopdn-04: * evidence from such monitoring to law enforcement officials. * sht-sgmhadoopdn-04: * * sht-sgmhadoopdn-04: ******************************************************************** sht-sgmhadoopdn-04: stopping regionserver.... [tnuser@sht-sgmhadoopcm-01 hbase]$ echo "balance_switch true" | hbase shell 查看hbase节点状态 [tnuser@sht-sgmhadoopcm-01 hbase]$ echo "status" | hbase shell 3 servers, 1 dead, 1.3333 average load [tnuser@sht-sgmhadoopdn-04 hbase]$ jps 23940 Jps 23375 DataNode 23487 TaskTracker

http://172.16.101.54:60010

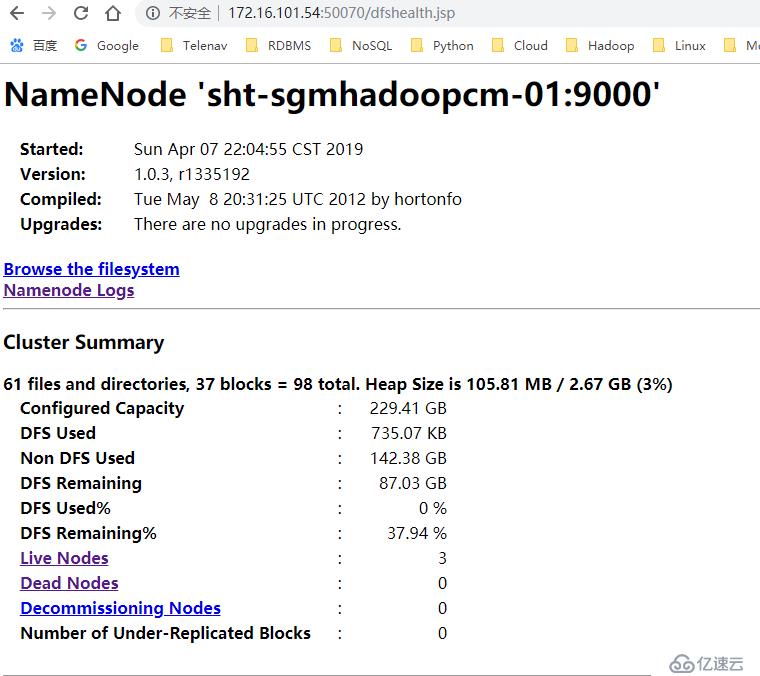

再移除sht-sgmhadoopdn-04的datanode和TaskTracker节点

[tnuser@sht-sgmhadoopcm-01 hadoop-1.0.3]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/include sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 [tnuser@sht-sgmhadoopcm-01 hadoop-1.0.3]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/excludes sht-sgmhadoopdn-04 [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/hdfs-site.xml <property> <name>dfs.hosts</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/include</value> <final>true</final> </property> <property> <name>dfs.hosts.exclude</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/excludes</value> <final>true</final> </property> [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/mapred-site.xml <property> <name>mapred.hosts</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/include</value> <final>true</final> </property> <property> <name>mapred.hosts.exclude</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/excludes</value> <final>true</final> </property> 重新加载配置,NameNode会检查并将数据复制到其它节点上以恢复副本数,但是不会删除sht-sgmhadoopdn-04把原本的数据,如果数据量比较大,这个过程比较耗时 -refreshNodes :Re-read the hosts and exclude files to update the set of Datanodes that are allowed to connect to the Namenode and those that should be decommissioned or recommissioned. [tnuser@sht-sgmhadoopcm-01 hadoop-1.0.3]$ hadoop dfsadmin -refreshNodes [tnuser@sht-sgmhadoopcm-01 hadoop-1.0.3]$ hadoop mradmin -refreshNodes 如果节点sht-sgmhadoopdn-04上DataNode和TaskTracker进程还存活,则使用下面命令关闭(正常情况在上一步中已经被关闭了) [tnuser@sht-sgmhadoopdn-04 hbase]$ hadoop-daemon.sh stop datanode [tnuser@sht-sgmhadoopdn-04 hbase]$ hadoop-daemon.sh stop tasktracker [tnuser@sht-sgmhadoopcm-01 hadoop-1.0.3]$ hadoop dfsadmin -report Warning: $HADOOP_HOME is deprecated. Configured Capacity: 246328578048 (229.41 GB) Present Capacity: 93446351917 (87.03 GB) DFS Remaining: 93445607424 (87.03 GB) DFS Used: 744493 (727.04 KB) DFS Used%: 0% Under replicated blocks: 0 Blocks with corrupt replicas: 0 Missing blocks: 0 ------------------------------------------------- Datanodes available: 3 (4 total, 1 dead) Name: 172.16.101.58:50010 Decommission Status : Normal Configured Capacity: 82109526016 (76.47 GB) DFS Used: 259087 (253.01 KB) Non DFS Used: 57951808497 (53.97 GB) DFS Remaining: 24157458432(22.5 GB) DFS Used%: 0% DFS Remaining%: 29.42% Last contact: Sun Apr 07 20:45:42 CST 2019 Name: 172.16.101.60:50010 Decommission Status : Normal Configured Capacity: 82109526016 (76.47 GB) DFS Used: 246799 (241.01 KB) Non DFS Used: 45172382705 (42.07 GB) DFS Remaining: 36936896512(34.4 GB) DFS Used%: 0% DFS Remaining%: 44.98% Last contact: Sun Apr 07 20:45:43 CST 2019 Name: 172.16.101.59:50010 Decommission Status : Normal Configured Capacity: 82109526016 (76.47 GB) DFS Used: 238607 (233.01 KB) Non DFS Used: 49758034929 (46.34 GB) DFS Remaining: 32351252480(30.13 GB) DFS Used%: 0% DFS Remaining%: 39.4% Last contact: Sun Apr 07 20:45:42 CST 2019 Name: 172.16.101.66:50010 Decommission Status : Decommissioned Configured Capacity: 0 (0 KB) DFS Used: 0 (0 KB) Non DFS Used: 0 (0 KB) DFS Remaining: 0(0 KB) DFS Used%: 100% DFS Remaining%: 0% Last contact: Thu Jan 01 08:00:00 CST 1970 此时节点sht-sgmhadoopdn-04已经没有进程 [tnuser@sht-sgmhadoopdn-04 hbase]$ jps 23973 Jps sht-sgmhadoopdn-04节点的数据仍然保留 [tnuser@sht-sgmhadoopdn-04 hbase]$ hadoop dfs -ls /hbase Warning: $HADOOP_HOME is deprecated. Found 8 items drwxr-xr-x - tnuser supergroup 0 2019-04-07 17:46 /hbase/-ROOT- drwxr-xr-x - tnuser supergroup 0 2019-04-07 18:23 /hbase/.META. drwxr-xr-x - tnuser supergroup 0 2019-04-07 20:11 /hbase/.logs drwxr-xr-x - tnuser supergroup 0 2019-04-07 20:45 /hbase/.oldlogs drwxr-xr-x - tnuser supergroup 0 2019-04-07 18:50 /hbase/emp -rw-r--r-- 3 tnuser supergroup 38 2019-04-06 22:41 /hbase/hbase.id -rw-r--r-- 3 tnuser supergroup 3 2019-04-06 22:41 /hbase/hbase.version drwxr-xr-x - tnuser supergroup 0 2019-04-07 18:51 /hbase/t1 平衡数据文件节点 [tnuser@sht-sgmhadoopcm-01 conf]$ start-balancer.sh -threshold 10

最后修改一些配置文件

删除regionservers文件中sht-sgmhadoopdn-04行 [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-01:/usr/local/contentplatform/hbase-0.92.1/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-02:/usr/local/contentplatform/hbase-0.92.1/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-03:/usr/local/contentplatform/hbase-0.92.1/conf/ 删除slave文件中sht-sgmhadoopdn-04行 [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-01:/usr/local/contentplatform/hadoop-1.0.3/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-02:/usr/local/contentplatform/hadoop-1.0.3/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-03:/usr/local/contentplatform/hadoop-1.0.3/conf/ 注释并删除excludes文件 [tnuser@sht-sgmhadoopcm-01 conf]$ rm -rf /usr/local/contentplatform/hadoop-1.0.3/conf/excludes [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/hdfs-site.xml <!-- property> <name>dfs.hosts</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/include</value> </property --> <!-- property> <name>dfs.hosts.exclude</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/excludes</value> </property --> [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/mapred-site.xml <!-- property> <name>mapred.hosts</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/include</value> </property --> <!-- property> <name>mapred.hosts.exclude</name> <value>/usr/local/contentplatform/hadoop-1.0.3/conf/excludes</value> </property -->

重启hadoop和hbase

[tnuser@sht-sgmhadoopcm-01 conf]$ stop-hbase.sh [tnuser@sht-sgmhadoopcm-01 hbase]$ stop-all.sh [tnuser@sht-sgmhadoopcm-01 hbase]$ start-all.sh [tnuser@sht-sgmhadoopcm-01 hbase]$ start-hbase.sh check 数据: hbase(main):040:0> scan 't1' ROW COLUMN+CELL row1 column=info:name, timestamp=1554634306493, value=xiaoming row2 column=info:age, timestamp=1554634306540, value=18 row3 column=info:sex, timestamp=1554634307409, value=male 3 row(s) in 0.0290 seconds hbase(main):041:0> scan 'emp' ROW COLUMN+CELL 1 column=personal:city, timestamp=1554634236024, value=hyderabad 1 column=personal:name, timestamp=1554634235959, value=raju 1 column=professional:designation, timestamp=1554634236063, value=manager 1 column=professional:salary, timestamp=1554634237419, value=50000 2 column=personal:city, timestamp=1554634241879, value=chennai 2 column=personal:name, timestamp=1554634241782, value=ravi 2 column=professional:designation, timestamp=1554634241920, value=sr.engineer 2 column=professional:salary, timestamp=1554634242923, value=30000 3 column=personal:city, timestamp=1554634246842, value=delhi 3 column=personal:name, timestamp=1554634246784, value=rajesh 3 column=professional:designation, timestamp=1554634246879, value=jr.engineer 3 column=professional:salary, timestamp=1554634247692, value=25000 3 row(s) in 0.0330 seconds

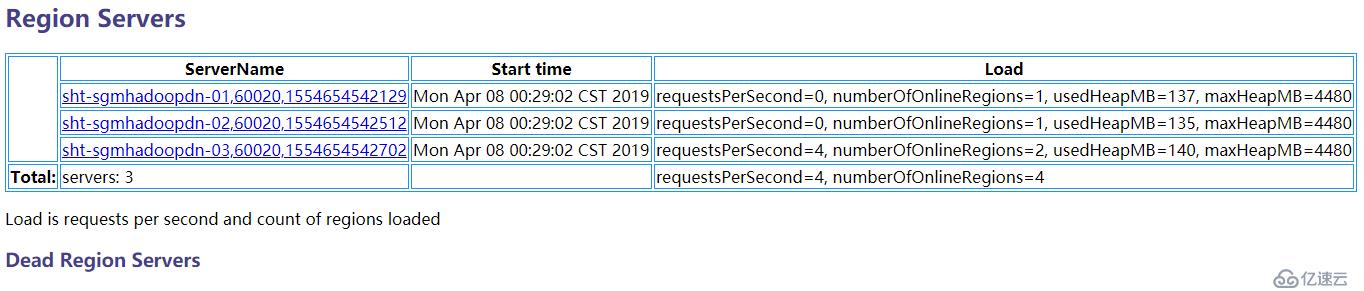

4、添加一个hadoop+hbase节点

先添加sht-sgmhadoopdn-04的hadoop节点

准备工作:

java环境,ssh互信,/etc/hosts文件

添加slave文件中sht-sgmhadoopdn-04行,并同步到其他节点 [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 sht-sgmhadoopdn-04 [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-01:/usr/local/contentplatform/hadoop-1.0.3/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-02:/usr/local/contentplatform/hadoop-1.0.3/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hadoop-1.0.3/conf/slaves sht-sgmhadoopdn-03:/usr/local/contentplatform/hadoop-1.0.3/conf/ 添加regionservers文件中sht-sgmhadoopdn-04行,并同步到其他节点 [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-01 sht-sgmhadoopdn-02 sht-sgmhadoopdn-03 sht-sgmhadoopdn-04 [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-01:/usr/local/contentplatform/hbase-0.92.1/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-02:/usr/local/contentplatform/hbase-0.92.1/conf/ [tnuser@sht-sgmhadoopcm-01 conf]$ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/regionservers sht-sgmhadoopdn-03:/usr/local/contentplatform/hbase-0.92.1/conf/ 在sht-sgmhadoopdn-04上删除已经存在的数据文件 rm -rf /usr/local/contentplatform/data/dfs/name/* rm -rf /usr/local/contentplatform/data/dfs/data/* rm -rf /usr/local/contentplatform/data/mapred/local/* rm -rf /usr/local/contentplatform/data/zookeeper/* rm -rf /usr/local/contentplatform/logs/hadoop/* rm -rf /usr/local/contentplatform/logs/hbase/* 在sht-sgmhadoopdn-04启动datanode和tasktracker [tnuser@sht-sgmhadoopdn-04 conf]$ hadoop-daemon.sh start datanode [tnuser@sht-sgmhadoopdn-04 conf]$ hadoop-daemon.sh start tasktracker 检查live nodes [tnuser@sht-sgmhadoopcm-01 contentplatform]$ hadoop dfsadmin -report http://172.16.101.54:50070 在namenode上执行平衡数据 [tnuser@sht-sgmhadoopcm-01 conf]$ start-balancer.sh -threshold 10

再添加sht-sgmhadoopdn-04的hbase节点

在sht-sgmhadoopdn-04上启动reginserver [tnuser@sht-sgmhadoopdn-04 conf]$ hbase-daemon.sh start regionserver 检查hbase节点状态 http://172.16.101.54:60010

添加一个backup master

添加配置文件并同步到所有节点上 [tnuser@sht-sgmhadoopcm-01 conf]$ vim /usr/local/contentplatform/hbase-0.92.1/conf/backup-masters sht-sgmhadoopdn-01 rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/backup-masters sht-sgmhadoopdn-01:/usr/local/contentplatform/hbase-0.92.1/conf/ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/backup-masters sht-sgmhadoopdn-02:/usr/local/contentplatform/hbase-0.92.1/conf/ rsync -avz --progress /usr/local/contentplatform/hbase-0.92.1/conf/backup-masters sht-sgmhadoopdn-03:/usr/local/contentplatform/hbase-0.92.1/conf/ 启动hbase,如果hbase集群已经启动,则重启hbase集群 [tnuser@sht-sgmhadoopcm-01 conf]$ stop-hbase.sh [tnuser@sht-sgmhadoopcm-01 conf]$ start-hbase.sh [tnuser@sht-sgmhadoopdn-01 conf]$ vim /usr/local/contentplatform/logs/hbase/hbase-tnuser-master-sht-sgmhadoopdn-01.log 2019-04-12 13:58:50,893 INFO org.apache.hadoop.hbase.master.metrics.MasterMetrics: Initialized 2019-04-12 13:58:50,899 DEBUG org.apache.hadoop.hbase.master.HMaster: HMaster started in backup mode. Stalling until master znode is written. 2019-04-12 13:58:50,924 ERROR org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper: Node /hbase/master already exists and this is not a retry 2019-04-12 13:58:50,925 INFO org.apache.hadoop.hbase.master.ActiveMasterManager: Adding ZNode for /hbase/backup-masters/sht-sgmhadoopdn-01,60000,1555048730644 in backup master directory 2019-04-12 13:58:50,941 INFO org.apache.hadoop.hbase.master.ActiveMasterManager: Another master is the active master, sht-sgmhadoopcm-01,60000,1555048728172; waiting to become the next active master [tnuser@sht-sgmhadoopcm-01 hbase-0.92.1]$ jps 2913 JobTracker 2823 SecondaryNameNode 3667 Jps 3410 HMaster 3332 HQuorumPeer 2639 NameNode [tnuser@sht-sgmhadoopdn-01 conf]$ jps 7539 HQuorumPeer 7140 DataNode 7893 HMaster 8054 Jps 7719 HRegionServer 7337 TaskTracker 故障切换: [tnuser@sht-sgmhadoopcm-01 hbase]$ cat /tmp/hbase-tnuser-master.pid|xargs kill -9 http://172.16.101.58:60010

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。