您好,登录后才能下订单哦!

2019/3/14 星期四

Linux 初始化脚本 (centos6 centos7 通用)Linux 初始化脚本 (centos6 centos7 通用)

zookeeper生产环境搭建 zookeeper生产环境搭建

在安装前请务必安装好zookeeper 查看上面2个链接地址!

kafka优化:

有时候系统需要应对突如其来的高峰数据,它可能会拖慢磁盘。(比如说,每个小时开始时进行的批量操作等)

这个时候需要容许更多的脏数据存到内存,让后台进程慢慢地通过异步方式将数据写到磁盘当中。

vm.dirty_background_ratio = 5

vm.dirty_ratio = 80

这个时候,后台进行在脏数据达到5%时就开始异步清理,但在80%之前系统不会强制同步写磁盘。这样可以使IO变得更加平滑。

实际操作:

echo "vm.dirty_background_ratio=5" >> /etc/sysctl.conf

echo "vm.dirty_ratio=80" >> /etc/sysctl.conf

sysctl -p

kafka 生产环境搭建

[root@emm-kafka01-10--174 ~]# cd /opt/ins/

[root@emm-kafka01-10--174 ins]# ll

total 233044

-rwxr-xr-x 1 root root 166044032 Mar 13 15:58 jdk-8u102-linux-x64.rpm

-rw-r--r-- 1 root root 50326212 Mar 13 16:14 kafka_2.12-1.1.0.tgz

-rw-r--r-- 1 root root 22261552 Mar 13 16:14 zookeeper-3.4.8.tar.gz

[root@emm-kafka01-10--174 ins]# tar -zxvf kafka_2.12-1.1.0.tgz -C /usr/local/

[root@emm-kafka01-10--174 ins]# cd /usr/local/

[root@emm-kafka01-10--174 local]# ln -s kafka_2.12-1.1.0/ kafka

[root@emm-kafka01-10--174 local]# ll

total 4

drwxr-xr-x. 2 root root 6 Apr 11 2018 bin

drwxr-xr-x. 2 root root 6 Apr 11 2018 etc

drwxr-xr-x. 2 root root 6 Apr 11 2018 games

drwxr-xr-x. 2 root root 6 Apr 11 2018 include

lrwxrwxrwx 1 root root 17 Mar 14 09:51 kafka -> kafka_2.12-1.1.0/

drwxr-xr-x 6 root root 83 Mar 24 2018 kafka_2.12-1.1.0

drwxr-xr-x. 2 root root 6 Apr 11 2018 lib

drwxr-xr-x. 2 root root 6 Apr 11 2018 lib64

drwxr-xr-x. 2 root root 6 Apr 11 2018 libexec

drwxr-xr-x. 2 root root 6 Apr 11 2018 sbin

drwxr-xr-x. 5 root root 46 Apr 11 2018 share

drwxr-xr-x. 2 root root 6 Nov 12 13:03 src

lrwxrwxrwx 1 root root 15 Mar 13 18:20 zookeeper -> zookeeper-3.4.8

drwxr-xr-x 11 root root 4096 Mar 13 18:22 zookeeper-3.4.8修改配置文件

我们不会对 zookeeper.properties producer.properties consumer.properties 做什么特殊的配置

我们只对server.properties 做修改配置

[root@emm-kafka01-10--174 config]# pwd

/usr/local/kafka/config

[root@emm-kafka01-10--174 config]# ll

total 64

-rw-r--r-- 1 root root 906 Mar 24 2018 connect-console-sink.properties

-rw-r--r-- 1 root root 909 Mar 24 2018 connect-console-source.properties

-rw-r--r-- 1 root root 5807 Mar 24 2018 connect-distributed.properties

-rw-r--r-- 1 root root 883 Mar 24 2018 connect-file-sink.properties

-rw-r--r-- 1 root root 881 Mar 24 2018 connect-file-source.properties

-rw-r--r-- 1 root root 1111 Mar 24 2018 connect-log4j.properties

-rw-r--r-- 1 root root 2730 Mar 24 2018 connect-standalone.properties

-rw-r--r-- 1 root root 1221 Mar 24 2018 consumer.properties

-rw-r--r-- 1 root root 4727 Mar 24 2018 log4j.properties

-rw-r--r-- 1 root root 1919 Mar 24 2018 producer.properties

-rw-r--r-- 1 root root 6851 Mar 24 2018 server.properties

-rw-r--r-- 1 root root 1032 Mar 24 2018 tools-log4j.properties

-rw-r--r-- 1 root root 1023 Mar 24 2018 zookeeper.properties

[root@emm-kafka01-10--174 config]# ls -l server.properties

-rw-r--r-- 1 root root 6851 Mar 24 2018 server.properties

[root@emm-kafka01-10--174 config]# vim server.properties

...

...

...如下

config下的 server.properties

broker.id=10 --这个id如果部署的是kafka集群,id是不能一样的

port=9092 --默认kafka端口,如果一台机器上面部署了多个kafka实例,需要两个实例端口不一样

log.dirs=/var/log/kafka/kafka-logs 这个需要修改,这个是存topic相关信息

zookeeper.connect=10.2.10.174:2181,10.2.10.175:2181,10.2.10.176:2181/kafkagroup

[root@emm-kafka01-10--174 config]# grep '^[a-Z]' server.properties

broker.id=174 //每个实例都不一样

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/var/log/kafka/kafka-logs

num.partitions=1 //默认的每个topic的分区 为1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.2.10.174:2181,10.2.10.175:2181,10.2.10.176:2181/kafkagroup

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0注意:

kafka实现功能需要zookeeper调度,所以这是与zk单机或者集群的连接,上面是与集群连接的方式,也可以去掉/kafkagroup,但是zk的znode结构就会比较混乱,所以建议加一个路径

这样在zk的znode下就会出现 kafkagroup

其他2台 的server.properties 配置文件 中的 broker.id=改成 175 176

其他的不变

[root@emm-kafka01-10--174 config]# scp server.properties root@10.2.10.175:/usr/local/kafka/config/

server.properties 100% 6911 2.5MB/s 00:00

[root@emm-kafka01-10--174 config]# scp server.properties root@10.2.10.176:/usr/local/kafka/config/

server.properties 100% 6911 2.8MB/s 00:0

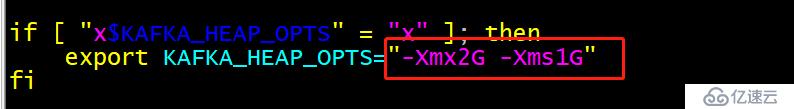

修改kafka启动脚本(调节启动内存占用大小

[root@emm-kafka01-10--174 bin]# vim /usr/local/kafka/bin/kafka-server-start.sh

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

fi

改成

我们的内存是8G 这里我们这样改

export KAFKA_HEAP_OPTS="-Xmx4G -Xms1G"

最大4G 初始 1G

内存为4G 就改成

export KAFKA_HEAP_OPTS="-Xmx2G -Xms1G"

[root@emm-kafka01-10--174 bin]# scp kafka-server-start.sh root@10.2.10.175:/usr/local/kafka/bin/

kafka-server-start.sh 100% 1376 1.5MB/s 00:00

[root@emm-kafka01-10--174 bin]# scp kafka-server-start.sh root@10.2.10.176:/usr/local/kafka/bin/

kafka-server-start.sh 100% 1376 1.2MB/s 00:00 4、启动kafka //后台启动 再启动之前,我们先设置环境变量

[root@emm-kafka01-10--174 bin]# vim /etc/profile

export PATH=/usr/local/kafka/bin:/usr/local/zookeeper/bin:$PATH

[root@emm-kafka01-10--174 bin]# source /etc/profile

[root@emm-kafka01-10--174 bin]# which kafka-server-start.sh

/usr/local/kafka/bin/kafka-server-start.sh

[root@emm-kafka01-10--174 bin]# which zkServer.sh

/usr/local/zookeeper/bin/zkServer.sh其他2台同上

后台启动kafka

[root@emm-kafka01-10--174 bin]# cd ~

[root@emm-kafka01-10--174 ~]# nohup kafka-server-start.sh /usr/local/kafka/config/server.properties 1>/dev/null 2>&1 &

[1] 26314

[root@emm-kafka01-10--174 ~]# jps

14290 QuorumPeerMain

26643 Jps

26314 Kafka

[root@emm-kafka01-10--174 ~]# ps -ef|grep kafka

root 26314 24915 35 10:12 pts/0 00:00:11 java -Xmx2G -Xms1G -server[root@emm-kafka01-10--174 ~]# sh zkCli.sh

[zk: localhost:2181(CONNECTED) 0] ls /

[kafkagroup, zookeeper]

[zk: localhost:2181(CONNECTED) 1] ls /kafkagroup

[cluster, controller, controller_epoch, brokers, admin, isr_change_notification, consumers, log_dir_event_notification, latest_producer_id_block, config]以上安装成功

我们接下来就是测试kafka

[root@emm-kafka01-10--174 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --create --topic majihui --partitions 2 --replication-factor 2

Created topic "majihui".

[root@emm-kafka01-10--174 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --describe --topic majihui

Topic:majihui PartitionCount:2 ReplicationFactor:2 Configs:

Topic: majihui Partition: 0 Leader: 174 Replicas: 174,175 Isr: 174,175

Topic: majihui Partition: 1 Leader: 175 Replicas: 175,176 Isr: 175,176

[root@emm-kafka02-10--175 ~]# kafka-topics.sh --zookeeper 10.2.10.174 --list

[root@emm-kafka02-10--175 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --list

__consumer_offsets

majihui启动生产者

[root@emm-kafka02-10--175 ~]# kafka-console-producer.sh --zookeeper 10.2.10.174:2181 --topic majihui

zookeeper is not a recognized option

这个命令不对,命令改了

[root@emm-kafka02-10--175 ~]# kafka-console-producer.sh --broker-list 10.2.10.174:9092 --topic majihui

>hello

启动消费者

[root@emm-kafka01-10--174 ~]# kafka-console-consumer.sh --bootstrap-server 10.2.10.174:9092 --topic majihui

hello删除topic majihui

[root@emm-kafka02-10--175 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --delete --topic majihui

Topic majihui is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

[root@emm-kafka02-10--175 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --list

__consumer_offsets[root@emm-kafka01-10--174 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --delete --topic __consumer_offsets

Error while executing topic command : Topic __consumer_offsets is a kafka internal topic and is not allowed to be marked for deletion.

//执行主题命令时出错:主题__consumer_offsets是kafka内部主题,不允许标记为删除。

[2019-03-14 11:24:17,407] ERROR kafka.admin.AdminOperationException: Topic __consumer_offsets is a kafka internal topic and is not allowed to be marked for deletion.

at kafka.admin.TopicCommand$.$anonfun$deleteTopic$1(TopicCommand.scala:188)

at kafka.admin.TopicCommand$.$anonfun$deleteTopic$1$adapted(TopicCommand.scala:185)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:52)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at kafka.admin.TopicCommand$.deleteTopic(TopicCommand.scala:185)

at kafka.admin.TopicCommand$.main(TopicCommand.scala:71)

at kafka.admin.TopicCommand.main(TopicCommand.scala)

(kafka.admin.TopicCommand$)

[root@emm-kafka01-10--174 ~]# kafka-topics.sh --zookeeper 10.2.10.174/kafkagroup --list

__consumer_offsets由于版本的升级,以前的命令不可以用了

这里注意一下。

kafka优化:

[root@emm-kafka01-10--174 ~]# echo "vm.swappiness=1" >> /etc/sysctl.conf

[root@emm-kafka01-10--174 ~]# echo "vm.dirty_background_ratio=5" >> /etc/sysctl.conf

[root@emm-kafka01-10--174 ~]# echo "vm.dirty_ratio=80" >> /etc/sysctl.conf

[root@emm-kafka01-10--174 ~]# sysctl -p

vm.swappiness = 1

vm.dirty_background_ratio = 5

vm.dirty_ratio = 80

[root@emm-kafka01-10--174 ~]# sysctl -p

vm.swappiness = 1

vm.dirty_background_ratio = 5

vm.dirty_ratio = 80

[root@emm-kafka01-10--174 ~]# scp /etc/sysctl.conf root@10.2.10.175:/etc/sysctl.conf

sysctl.conf 100% 511 529.6KB/s 00:00

[root@emm-kafka01-10--174 ~]# scp /etc/sysctl.conf root@10.2.10.176:/etc/sysctl.conf

sysctl.conf 100% 511 497.6KB/s 00:00 kafka启动脚本

[root@emm-kafka01-10--174 scripts]# pwd

/opt/scripts

[root@emm-kafka01-10--174 scripts]# ll

total 16

-rwxr-xr-x 1 root root 6704 Mar 13 15:10 initialization.sh

-rw-r--r-- 1 root root 122 May 10 11:05 kafkastart.sh

-rw-r--r-- 1 root root 88 May 10 10:26 zkstart.sh

[root@emm-kafka01-10--174 scripts]# cat kafkastart.sh

#!/bin/bash

source /etc/profile

nohup /usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties 1>/dev/null 2>&1 &

[root@emm-kafka01-10--174 scripts]# scp kafkastart.sh root@10.2.10.175:/opt/scripts/

kafkastart.sh 100% 122 70.8KB/s 00:00

[root@emm-kafka01-10--174 scripts]# scp kafkastart.sh root@10.2.10.176:/opt/scripts/

kafkastart.sh 100% 122 61.6KB/s 00:00

[root@emm-kafka01-10--174 scripts]# sh kafkastart.sh

[root@emm-kafka01-10--174 scripts]# jps

11185 Jps

7974 QuorumPeerMain

10856 Kafka[root@emm-kafka01-10--174 scripts]# netstat -lntup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1379/master

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1110/sshd

tcp6 0 0 ::1:25 :::* LISTEN 1379/master

tcp6 0 0 :::39549 :::* LISTEN 10856/java

tcp6 0 0 :::9092 :::* LISTEN 10856/java

tcp6 0 0 :::2181 :::* LISTEN 7974/java

tcp6 0 0 10.2.10.174:3888 :::* LISTEN 7974/java

tcp6 0 0 :::33264 :::* LISTEN 7974/java

tcp6 0 0 :::22 :::* LISTEN 1110/sshd 添加到开机自启动

[root@emm-kafka03-10--176 scripts]# echo "/usr/bin/sh /opt/scripts/kafkastart.sh" >> /etc/rc.d/rc.local

[root@emm-kafka03-10--176 scripts]# cat /etc/rc.d/rc.local

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

ulimit -SHn 65535

echo 'never' >/sys/kernel/mm/transparent_hugepage/enabled

echo 'never' >/sys/kernel/mm/transparent_hugepage/defrag

/usr/bin/sh /opt/scripts/zkstart.sh

/usr/bin/sh /opt/scripts/kafkastart.sh

[root@emm-kafka03-10--176 scripts]# chmod +x /etc/rc.d/rc.localkafka优化 参考链接:https://www.cnblogs.com/yinzhengjie/p/9994207.html

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。