您好,登录后才能下订单哦!

今天来总结下MySQL 5.7中的一些问题处理,相对来说常规一些。搭建的过程我就不用多说了,昨天的文章里面可以看到一个基本的方式,在测试环境很容易模拟,如果在多台物理机环境中搭建是不是也一样呢,答案是肯定的,我自己都一一试过了。

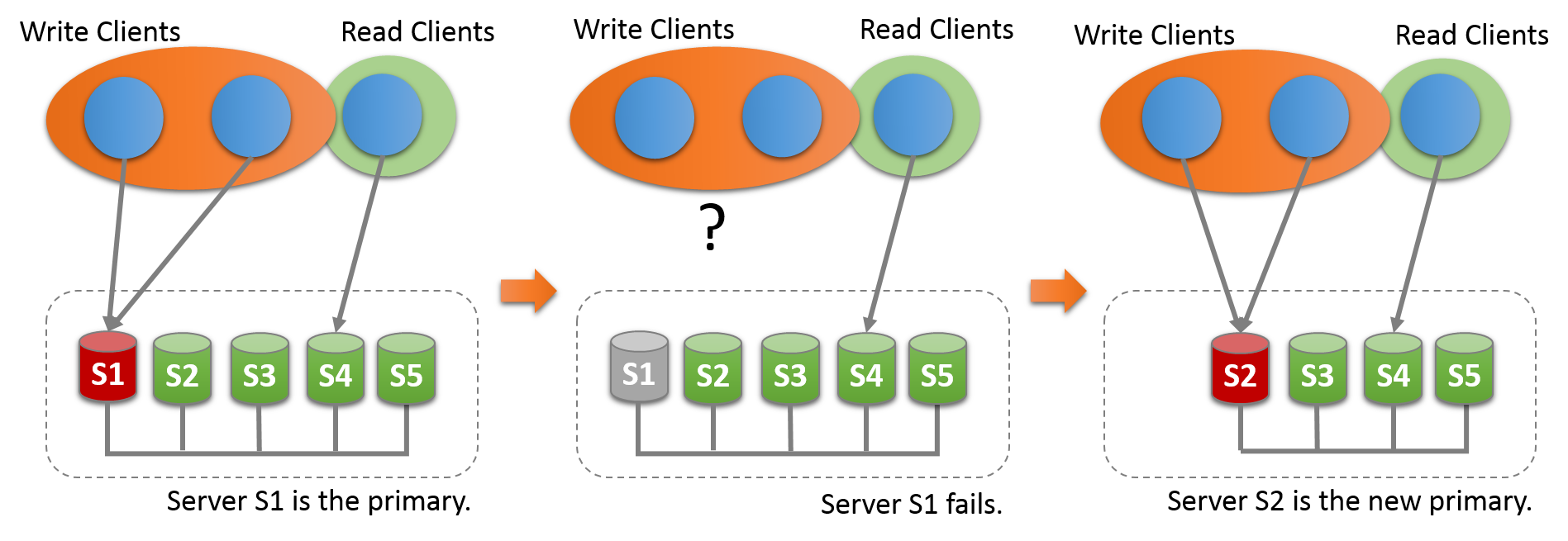

因为搭建的环境官方建议也是single_primary的方式,即一主写入,其它做读,也就是读写分离,当然支持multi_primary理论上也是可行的,但是还是有点小问题,我们就以single_primary来举例。

问题1:

读节点加入组的时候,start group_replication抛出了下面的错误。基本碰到这个错误,你离搭建成功就不远了。

2017-02-20T07:56:30.064556Z 0 [ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the group. Local transactions: 89328c79-f730-11e6-ab63-782bcb377193:1-2 > Group transactions: 7c744904-f730-11e6-a72d-782bcb377193:1-4'

2017-02-20T07:56:30.064580Z 0 [ERROR] Plugin group_replication reported: 'The member contains transactions not present in the group. The member will now exit the group.'

2017-02-20T07:56:30.064587Z 0 [Note] Plugin group_replication reported: 'To force this member into the group you can use the group_replication_allow_local_disjoint_gtids_join option'

可以很明显看到日志中已经提示了,需要设置参数,也就是兼容加入组。group_replication_allow_local_disjoint_gtids_join设置完成后运行start group_replication即可。

问题2:

如果碰到这个错误,也不用太担心,可以从日志看到是因为参数的不兼容性导致的。比如主写设置为multi-primary,读节点设置为single-primary,统一一下即可。

2017-02-21T10:20:56.324890+08:00 0 [ERROR] Plugin group_replication

reported: 'This member has more executed transactions than those present

in the group. Local transactions:

87b9c8fe-f352-11e6-bb33-0026b935eb76:1-5,

b79d42f4-f351-11e6-9891-0026b935eb76:1,

f7c7b9f8-f352-11e6-b1de-a4badb1b524e:1 > Group transactions: 87b9c8fe-f352-11e6-bb33-0026b935eb76:1-5,

b79d42f4-f351-11e6-9891-0026b935eb76:1'

2017-02-21T10:20:56.324971+08:00

0 [ERROR] Plugin group_replication reported: 'The member configuration

is not compatible with the group configuration. Variables such as

single_primary_mode or enforce_update_everywhere_checks must have the

same value on every server in the group. (member configuration option:

[], group configuration option:

[group_replication_single_primary_mode]).'

2017-02-21T10:20:56.325052+08:00 19 [Note] Plugin group_replication reported: 'Going to wait for view modification'

2017-02-21T10:20:56.325594+08:00 0 [Note] Plugin group_replication reported: 'getstart group_id 53d187f2'

问题3:

这个问题困扰了我很久,其实本质上就是节点的设置,里面有一个group_name, 这个名字可以不能设置为每个节点的uuid,比如节点1,2,3这几个节点,group_replication_group_name是需要一致的。之前每次失败都会认认真真拷贝uuid,发现适得其反。

2017-02-22T14:46:35.819072Z 0 [Warning] Plugin group_replication reported: 'read failed'

2017-02-22T14:46:35.851829Z 0 [ERROR] Plugin group_replication reported: '[GCS] The member was unable to join the group. Local port: 24902'

2017-02-22T14:47:05.814080Z 30 [ERROR] Plugin group_replication reported: 'Timeout on wait for view after joining group'

2017-02-22T14:47:05.814183Z 30 [Note] Plugin group_replication reported: 'Requesting to leave the group despite of not being a member'

2017-02-22T14:47:05.814213Z 30 [ERROR] Plugin group_replication reported: '[GCS] The member is leaving a group without being on one.'

2017-02-22T14:47:05.814567Z 30 [Note] Plugin group_replication reported: 'auto_increment_increment is reset to 1'

2017-02-22T14:47:05.814583Z 30 [Note] Plugin group_replication reported: 'auto_increment_offset is reset to 1'

2017-02-22T14:47:05.814859Z 36 [Note] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed

2017-02-22T14:47:05.815720Z 33 [Note] Plugin group_replication reported: 'The group replication applier thread was killed'统一之后,启动的过程其实很快。

mysql> start group_replication;

Query OK, 0 rows affected (1.52 sec)

基本上搭建过程就这几类问题,还有主机名类的问题,这方面还有一些小的bug,如果需要特别设置,还可以指定report_host来完成。

问题4:

环境搭建好之后,我们来创建一个普通的表,有时候好的习惯和规范在这种时候就尤其重要。

创建表test_tab

create table test_tab (id int,name varchar(30));然后插入一条数据,看起来这是一个再正常不过的操作,但是在MGR里面就会有错误,因为一个基本要求就是表中含有主键。

mysql> insert into test_tab values(1,'a');

ERROR 3098 (HY000): The table does not comply with the requirements by an external plugin.修复的方式就是添加主键:

mysql> alter table test_tab add primary key(id);

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

问题5(模拟灾难):

我们目前搭建的是single-primary的模式。如果主写节点发生故障,整个group该怎么处理呢,就会优先把第二个节点S2省纪委主写。

要测试的话还是很简单的。我们把节点1的服务直接kill掉。看看主节点会漂移到哪里。

首先是组复制的基本情况,目前存在5个节点,我们直接kill节点1,即端口为24801的。

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 52d26194-f90a-11e6-a247-782bcb377193 | grtest | 24801 | ONLINE |

| group_replication_applier | 5abaaf89-f90a-11e6-b4de-782bcb377193 | grtest | 24802 | ONLINE |

| group_replication_applier | 655248b9-f90a-11e6-86b4-782bcb377193 | grtest | 24803 | ONLINE |

| group_replication_applier | 6defc92c-f90a-11e6-990c-782bcb377193 | grtest | 24804 | ONLINE |

| group_replication_applier | 76bc07a1-f90a-11e6-ab0a-782bcb377193 | grtest | 24805 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

节点2会输出下面的日志,意味值这个节点正式上岗了。

2017-02-22T14:59:45.157989Z 0 [Note] Plugin group_replication reported: 'getstart group_id 98e4de29'

2017-02-22T14:59:45.434062Z 0 [Note] Plugin group_replication reported: 'Unsetting super_read_only.'

2017-02-22T14:59:45.434130Z

40 [Note] Plugin group_replication reported: 'A new primary was elected, enabled conflict detection until the new primary applies all

relay logs'然后就会看到组复制的情况成了下面的局面,毫无疑问,第一个节点被剔除了。

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 5abaaf89-f90a-11e6-b4de-782bcb377193 | grtest | 24802 | ONLINE |

| group_replication_applier | 655248b9-f90a-11e6-86b4-782bcb377193 | grtest | 24803 | ONLINE |

| group_replication_applier | 6defc92c-f90a-11e6-990c-782bcb377193 | grtest | 24804 | ONLINE |

| group_replication_applier | 76bc07a1-f90a-11e6-ab0a-782bcb377193 | grtest | 24805 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

从日志我们可以看到是第二个节点升为主写了,那么问题来了。

问题6:

怎么判断一个复制组中哪个是主节点,不能完全靠猜或者翻看日志来判断吧。

我们用下面的语句来过滤得到。

mysql> select *from performance_schema.replication_group_members

where member_id =(select variable_value from

performance_schema.global_status WHERE VARIABLE_NAME=

'group_replication_primary_member');

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 5abaaf89-f90a-11e6-b4de-782bcb377193 | grtest | 24802 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

1 row in set (0.00 sec)

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。