您好,登录后才能下订单哦!

系统:centos7

192.168.1.55 mysql 端口3306 Percona-XtraDB-Cluster 主节点

192.168.1.56 mysql端口3307 Percona-XtraDB-Cluster+haproxy+keepalived

192.168.1.57 MySQL端口3307 Percona-XtraDB-Cluster+haproxy+keepalived

192.168.4.58/21 vip ip

软件版本:Percona-XtraDB-Cluster-5.7.16-27.19 percona-xtrabackup-2.4.5

haproxy-1.7.0 keepalived-1.3.2

一、linux 系统限制配置

1、关闭系统防火墙

systemctl stop firewalld.service 关闭防火墙 systemctl disable firewalld.service 禁用防火墙

2、关闭SElinux sed -i 's/SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config setenforce 0 selinux 立即生效

二、系统安装约定

源码包编译安装位置:/usr/local/软件名字

数据目录 /apps/data

三、下在源码安装包

下载 Percona-XtraDB-Cluster-5.7.16-27.19

wget -P /usr/local/src https://www.percona.com/downloads/Percona-XtraDB-Cluster-57/Percona-XtraDB-Cluster-5.7.16-27.19/rel-5.7.16/source/tarball/Percona-XtraDB-Cluster-5.7.16-27.19.tar.gz

下载percona-xtrabackup-2.4.5

wget -P /usr/local/src https://www.percona.com/downloads/XtraBackup/Percona-XtraBackup-2.4.5/source/tarball/percona-xtrabackup-2.4.5.tar.gz

下载boost_1_59_0

mkdir -p /usr/local/boost wget -P /usr/local/boost https://nchc.dl.sourceforge.net/project/boost/boost/1.59.0/boost_1_59_0.tar.gz

下载haproxy-1.7.0

wget -P /usr/local/src http://www.haproxy.org/download/1.7/src/haproxy-1.7.0.tar.gz

下载keepalived-1.3.2

wget -P /usr/local/src http://www.keepalived.org/software/keepalived-1.3.2.tar.gz

下载automake-1.15

wget -P /usr/local/src ftp://ftp.gnu.org/gnu/automake/automake-1.15.tar.gz

四、安装编译环境及编译依赖

1、yum -y install epel-release 2、卸载旧版mysql或mariadb yum -y remove mariadb* mysql* 3、安装编译环境 yum -y install libtool ncurses-devel libgcrypt-devel libev-devel \ git scons gcc gcc-c++ openssl check cmake bison boost-devel \ asio-devel libaio-devel ncurses-devel readline-devel pam-devel socat \ libaio automake autoconf vim redhat-lsb check-devel curl curl-devel xinetd 4、安装keepalived 依赖 (192.168.1.56 192.168.1.57) yum -y install libnl-devel openssl-devel libnfnetlink-devel ipvsadm \ popt-devel libnfnetlink kernel-devel popt-static iptraf

五、编译安装 Percona-XtraDB-Cluster-5.7.16-27.19 percona-xtrabackup-2.4.5

1、解压percona-xtrabackup-2.4.5.tar.gz

tar -xvf /usr/local/src/percona-xtrabackup-2.4.5.tar.gz -C /usr/local/src/

2、解压Percona-XtraDB-Cluster-5.7.16-27.19.tar.gz

tar -xvf /usr/local/src/Percona-XtraDB-Cluster-5.7.16-27.19.tar.gz -C /usr/local/src/

3、编译percona-xtrabackup-2.4.5

cd /usr/local/src/percona-xtrabackup-2.4.5/ cmake ./ -DBUILD_CONFIG=xtrabackup_release \ -DWITH_MAN_PAGES=OFF \ -DDOWNLOAD_BOOST=1 \ -DWITH_BOOST="/usr/local/boost" make -j4 && make install ln -sf /usr/local/xtrabackup/bin/* /usr/sbin/

4、编译安装Percona-XtraDB-Cluster-5.7.16-27.19

4.1、创建mysql用户名及文件夹

mkdir /apps/{run,log}/mysqld -p

useradd mysql -s /sbin/nologin -M

chown -R mysql:mysql /apps4.2、编译garbd,libgalera_smm.so

cd /usr/local/src/Percona-XtraDB-Cluster-5.7.16-27.19

cd "percona-xtradb-cluster-galera"

revno值 cat GALERA-REVISION

scons -j4 psi=1 --config=force revno="b98f92f" boost_pool=0 libgalera_smm.so

scons -j4 --config=force revno="b98f92f" garb/garbd

创建pxc安装目录

mkdir -p /usr/local/Percona-XtraDB-Cluster/{bin,lib}

拷贝编译结果文件到pxc安装目录

cp garb/garbd /usr/local/Percona-XtraDB-Cluster/bin

cp libgalera_smm.so /usr/local/Percona-XtraDB-Cluster/lib4.3、编译 Percona-XtraDB-Cluster-5.7.16-27.19

-DMYSQL_SERVER_SUFFIX 值得获取 cd /usr/local/src/Percona-XtraDB-Cluster-5.7.16-27.19 WSREP_VERSION="$(grep WSREP_INTERFACE_VERSION wsrep/wsrep_api.h | cut -d '"' -f2).$(grep 'SET(WSREP_PATCH_VERSION' "cmake/wsrep.cmake" | cut -d '"' -f2)" echo $WSREP_VERSION -DCOMPILATION_COMMENT 值获取 cd /usr/local/src/Percona-XtraDB-Cluster-5.7.16-27.19 source VERSION MYSQL_VERSION="$MYSQL_VERSION_MAJOR.$MYSQL_VERSION_MINOR.$MYSQL_VERSION_PATCH" echo $MYSQL_VERSION REVISION="$(cd "$SOURCEDIR"; grep '^short: ' Docs/INFO_SRC |sed -e 's/short: //')" -DCOMPILATION_COMMENT 值 Percona XtraDB Cluster binary (GPL) $MYSQL_VERSION-$WSREP_VERSION Revision $REVISION 编译Percona-XtraDB-Cluster-5.7.16-27.19 cd /usr/local/src/Percona-XtraDB-Cluster-5.7.16-27.19 cmake ./ -DBUILD_CONFIG=mysql_release \ -DCMAKE_BUILD_TYPE=RelWithDebInfo\ -DWITH_EMBEDDED_SERVER=OFF \ -DFEATURE_SET=community \ -DENABLE_DTRACE=OFF \ -DWITH_SSL=system -DWITH_ZLIB=system \ -DCMAKE_INSTALL_PREFIX="/usr/local/Percona-XtraDB-Cluster" \ -DMYSQL_DATADIR="/apps/data" \ -DMYSQL_SERVER_SUFFIX="27.19" \ -DWITH_INNODB_DISALLOW_WRITES=ON \ -DWITH_WSREP=ON \ -DWITH_UNIT_TESTS=0 \ -DWITH_READLINE=system \ -DWITHOUT_TOKUDB=ON \ -DCOMPILATION_COMMENT="Percona XtraDB Cluster binary (GPL) 5.7.16-27.19, Revision bec0879" \ -DWITH_PAM=ON \ -DWITH_INNODB_MEMCACHED=ON \ -DDOWNLOAD_BOOST=1 \ -DWITH_BOOST="/usr/local/boost" \ -DWITH_SCALABILITY_METRICS=ON make -j4 && make install 拷贝文件到安装目录 cp -R /usr/local/src/Percona-XtraDB-Cluster-5.7.16-27.19/percona-xtradb-cluster-tests /usr/local/Percona-XtraDB-Cluster/ 创建软连接 mysql 系统环境 ln -sf /usr/local/Percona-XtraDB-Cluster/bin/* /usr/sbin/ 拷贝启动文件到系统启动目录 cp -R /usr/local/Percona-XtraDB-Cluster/support-files/mysql.server /etc/init.d/mysqld 拷贝监控文件到xinetd管理目录 cp -R /usr/local/Percona-XtraDB-Cluster/xinetd.d/mysqlchk /etc/xinetd.d/ 加入动态库 echo "/usr/local/Percona-XtraDB-Cluster/lib" >> /etc/ld.so.conf ldconfig

4.4 配置MySQL健康检查

vim /etc/xinetd.d/mysqlchk server = /usr/bin/clustercheck 修改为如果不修过可以cp /usr/local/Percona-XtraDB-Cluster/bin/clustercheck 到 /usr/bin/ server = /usr/sbin/clustercheck vim /etc/services 添加 mysqlchk 9200/tcp # mysqlchk

4.5my.cnf 配置

192.168.1.55 vim /etc/my.cnf # Template my.cnf for PXC # Edit to your requirements. [client] socket=/apps/data/mysql.sock port=3306 [mysqld] user=mysql server-id=10055 datadir=/apps/data socket=/apps/data/mysql.sock log-error=/apps/log/mysqld/mysqld.log pid-file=/apps/run/mysqld/mysqld.pid log-bin log_slave_updates expire_logs_days=7 port=3306 character_set_server = utf8 # Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 # Path to Galera library wsrep_provider=/usr/local/Percona-XtraDB-Cluster/lib/libgalera_smm.so # Cluster connection URL contains IPs of nodes #If no IP is found, this implies that a new cluster needs to be created, #in order to do that you need to bootstrap this node wsrep_cluster_address='gcomm://192.168.1.55,192.168.1.56,192.168.1.57' #wsrep_cluster_address=gcomm:// # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB wsrep_sst_receive_address=192.168.1.55:6010 wsrep_node_incoming_address=192.168.1.55 wsrep_provider_options = "gmcast.listen_addr=tcp://192.168.1.55;ist.recv_addr=192.168.1.55:6020;" # Slave thread to use wsrep_slave_threads= 8 wsrep_log_conflicts # This changes how InnoDB autoincrement locks are managed and is a requirement for Galera innodb_autoinc_lock_mode=2 # Node IP address wsrep_node_address=192.168.1.55 # Cluster name wsrep_cluster_name=pxc-cluster #If wsrep_node_name is not specified, then system hostname will be used wsrep_node_name=pxc-cluster-node-1 #pxc_strict_mode allowed values: DISABLED,PERMISSIVE,ENFORCING,MASTER pxc_strict_mode=ENFORCING # SST method wsrep_sst_method=xtrabackup-v2 #wsrep_sst_method=rsync #Authentication for SST method # 修改自己的创建的账号、密码 wsrep_sst_auth="sstuser:456789"

192.168.1.56 vim /etc/my.cnf # Template my.cnf for PXC # Edit to your requirements. [client] socket=/apps/data/mysql.sock port=3307 [mysqld] user=mysql server-id=10056 datadir=/apps/data socket=/apps/data/mysql.sock log-error=/apps/log/mysqld/mysqld.log pid-file=/apps/run/mysqld/mysqld.pid log-bin log_slave_updates expire_logs_days=7 port=3307 character_set_server = utf8 # Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 # Path to Galera library wsrep_provider=/usr/local/Percona-XtraDB-Cluster/lib/libgalera_smm.so # Cluster connection URL contains IPs of nodes #If no IP is found, this implies that a new cluster needs to be created, #in order to do that you need to bootstrap this node wsrep_cluster_address='gcomm://192.168.1.55,192.168.1.56,192.168.1.57' #wsrep_cluster_address=gcomm:// # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB wsrep_sst_receive_address=192.168.1.56:6010 wsrep_node_incoming_address=192.168.1.56 wsrep_provider_options = "gmcast.listen_addr=tcp://192.168.1.56;ist.recv_addr=192.168.1.56:6020;" # Slave thread to use wsrep_slave_threads= 8 wsrep_log_conflicts # This changes how InnoDB autoincrement locks are managed and is a requirement for Galera innodb_autoinc_lock_mode=2 # Node IP address wsrep_node_address=192.168.1.56 # Cluster name wsrep_cluster_name=pxc-cluster #If wsrep_node_name is not specified, then system hostname will be used wsrep_node_name=pxc-cluster-node-2 #pxc_strict_mode allowed values: DISABLED,PERMISSIVE,ENFORCING,MASTER pxc_strict_mode=ENFORCING # SST method wsrep_sst_method=xtrabackup-v2 #wsrep_sst_method=rsync #Authentication for SST method # 修改自己的创建的账号、密码 wsrep_sst_auth="sstuser:456789"

192.168.1.57 vim /etc/my.cnf # Template my.cnf for PXC # Edit to your requirements. [client] socket=/apps/data/mysql.sock port=3307 [mysqld] user=mysql server-id=10057 datadir=/apps/data socket=/apps/data/mysql.sock log-error=/apps/log/mysqld/mysqld.log pid-file=/apps/run/mysqld/mysqld.pid log-bin log_slave_updates expire_logs_days=7 port=3307 character_set_server = utf8 # Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 # Path to Galera library wsrep_provider=/usr/local/Percona-XtraDB-Cluster/lib/libgalera_smm.so # Cluster connection URL contains IPs of nodes #If no IP is found, this implies that a new cluster needs to be created, #in order to do that you need to bootstrap this node wsrep_cluster_address='gcomm://192.168.1.55,192.168.1.56,192.168.1.57' #wsrep_cluster_address=gcomm:// # In order for Galera to work correctly binlog format should be ROW binlog_format=ROW # MyISAM storage engine has only experimental support default_storage_engine=InnoDB wsrep_sst_receive_address=192.168.1.57:6010 wsrep_node_incoming_address=192.168.1.57 wsrep_provider_options = "gmcast.listen_addr=tcp://192.168.1.57;ist.recv_addr=192.168.1.57:6020;" # Slave thread to use wsrep_slave_threads= 8 wsrep_log_conflicts # This changes how InnoDB autoincrement locks are managed and is a requirement for Galera innodb_autoinc_lock_mode=2 # Node IP address wsrep_node_address=192.168.1.57 # Cluster name wsrep_cluster_name=pxc-cluster #If wsrep_node_name is not specified, then system hostname will be used wsrep_node_name=pxc-cluster-node-3 #pxc_strict_mode allowed values: DISABLED,PERMISSIVE,ENFORCING,MASTER pxc_strict_mode=ENFORCING # SST method wsrep_sst_method=xtrabackup-v2 #wsrep_sst_method=rsync #Authentication for SST method wsrep_sst_auth="sstuser:456789" # 修改自己的创建的账号、密码

4.6 初始化数据库

192.168.1.55

初始化数据

mysqld --initialize --user=mysql --datadir="/apps/data"

启动数据库

/etc/init.d/mysqld start --wsrep-cluster-address="gcomm://"

关闭数据库 MySQL5.7以后都要密码才能进去

/etc/init.d/mysqld stop

安全模式期待MySQL修改密码

mysqld_safe --wsrep-cluster-address=gcomm:// --user=mysql --skip-grant-tables --skip-networking &

use mysql;

update user set authentication_string=Password('123456') where user='root';

flush privileges;

shutdown

exit 退出MySQL

ps -ef | grep mysql

直到MySQL完全关闭 再次启动MySQL

/etc/init.d/mysqld start --wsrep-cluster-address="gcomm://"

进入MySQL 创建同步账号及修改root密码

mysql -u root -p123456

创建MySQL同步账号及密码

GRANT ALL PRIVILEGES ON *.* TO sstuser@'%' IDENTIFIED BY '456789'; FLUSH PRIVILEGES;

GRANT ALL PRIVILEGES ON *.* TO sstuser@'localhost' IDENTIFIED BY '456789'; FLUSH PRIVILEGES;

修改root 远程能够连接

GRANT ALL PRIVILEGES ON *.* TO root@'%' IDENTIFIED BY '123456'; FLUSH PRIVILEGES;

创建健康检查数据库账号密码

GRANT PROCESS ON *.* TO 'clustercheckuser'@'localhost' IDENTIFIED BY 'clustercheckpassword!';

如果使用自己账号密码请修改

vim /usr/sbin/clustercheck 对应的账号密码

192.168.1.56 192.168.1.57 其它节点直接启动

service mysqld start

重启第一个节点 192.168.1.55

service mysqld restart 出现错误

/etc/init.d/mysqld bootstrap-pxc 进行启动

启动完成登陆MySQL

mysql -u root -p123456

查看MySQL 节点数

mysql> show global status like 'wsrep_cluster_size';

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 3 |

+--------------------+-------+

1 row in set (0.00 sec)

mysql> show global status like 'wsrep_incoming_addresses';

+--------------------------+-------------------------------------------------------+

| Variable_name | Value |

+--------------------------+-------------------------------------------------------+

| wsrep_incoming_addresses | 192.168.1.57:3307,192.168.1.56:3307,192.168.1.55:3306 |

+--------------------------+-------------------------------------------------------+

1 row in set (0.00 sec)

创建测试数据看看是否同步

mysql> create database test;

Query OK, 1 row affected (0.01 sec)4.7 数据库健康检查启动

systemctl start xinetd.service 查看9200端口是否打开 netstat -anlp | grep 9200 tcp6 0 0 :::9200 :::* LISTEN 73323/xinetd 测试端口是否正常 telnet 192.168.1.55 9200 Escape character is '^]'. HTTP/1.1 200 OK Content-Type: text/plain Connection: close Content-Length: 40 Percona XtraDB Cluster Node is synced. Connection closed by foreign host. 返回503 首先执行 /usr/sbin/clustercheck 是否返回200 如果不是检查链接数据库的账号密码 返回200正常 就请修改 vim /etc/xinetd.d/mysqlchk user root 重启systemctl restart xinetd.service 再次测试如果返回200正常就ok 还是报错就请查看系统日志修复

六、编译安装 haproxy (192.168.1.56,192.168.1.57)

安装 haproxy 日志记录支持

yum -y install rsyslog

解压 haproxy-1.7.0.tar.gz

tar -xvf /usr/local/src/haproxy-1.7.0.tar.gz -C /usr/local/src/

cd /usr/local/src/haproxy-1.7.0

make TARGET=linux31 PREFIX=/usr/local/haproxy

make install PREFIX=/usr/local/haproxy

创建文件夹haproxy

mkdir -pv /usr/local/haproxy/{conf,run,log}创建haproxy 启动脚本 vim /etc/init.d/haproxy

#! /bin/bash

# chkconfig: - 85 15

# description: haproxy is a World Wide Web server. It is used to serve

PROGDIR=/usr/local/haproxy

PROGNAME=haproxy

DAEMON=$PROGDIR/sbin/$PROGNAME

CONFIG=$PROGDIR/conf/$PROGNAME.conf

PIDFILE=$PROGDIR/run/$PROGNAME.pid

DESC="HAProxy daemon"

SCRIPTNAME=/usr/local/haproxy/init.d/$PROGNAME

# Gracefully exit if the package has been removed.

test -x $DAEMON || exit 0

start()

{

echo -n "Starting $DESC: $PROGNAME"

$DAEMON -f $CONFIG

echo "."

}

stop()

{ echo -n "Stopping $DESC: $PROGNAME"

cat $PIDFILE | xargs kill

echo "."

}

reload()

{ echo -n "reloading $DESC: $PROGNAME"

$DAEMON -f $CONFIG -p $PIDFILE -sf $(cat $PIDFILE)

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

reload)

reload

;;

*)

echo "Usage: $SCRIPTNAME {start|stop|restart|reload}" >&2

exit 1

;;

esac

exit 0chmod +x /etc/init.d/haproxy # 可执行权限

创建haproxy.conf

vim /usr/local/haproxy/conf/haproxy.conf

global log 127.0.0.1 local0 log 127.0.0.1 local1 notice #log loghost local0 info maxconn 50000 chroot /usr/local/haproxy uid 99 gid 99 daemon nbproc 2 pidfile /usr/local/haproxy/run/haproxy.pid #debug #quiet defaults log global mode tcp option tcplog option dontlognull option forwardfor option redispatch retries 3 timeout connect 3000 timeout client 50000 timeout server 50000 frontend admin_stat bind *:8888 mode http default_backend stats-back frontend pxc-front bind *:3306 mode tcp default_backend pxc-back frontend pxc-onenode-front bind *:3308 mode tcp default_backend pxc-onenode backend pxc-back mode tcp balance roundrobin option httpchk server node1 192.168.1.55:3306 check port 9200 inter 12000 rise 3 fall 3 server node2 192.168.1.56:3307 check port 9200 inter 12000 rise 3 fall 3 server node3 192.168.1.57:3307 check port 9200 inter 12000 rise 3 fall 3 backend pxc-onenode mode tcp balance roundrobin option httpchk server node1 192.168.1.55:3306 check port 9200 inter 12000 rise 3 fall 3 server node2 192.168.1.56:3307 check port 9200 inter 12000 rise 3 fall 3 backup server node3 192.168.1.57:3307 check port 9200 inter 12000 rise 3 fall 3 backup backend stats-back mode http balance roundrobin stats uri /admin?stats stats auth admin:admin stats realm Haproxy\ Statistics

Haproxy rsyslog 日志配置 vim /etc/rsyslog.conf #$ModLoad imudp 修改 $ModLoad imudp #$UDPServerRun 514 修改 $UDPServerRun 514 #添加 Haproxy 日志记录路径 local0.* /usr/local/haproxy/log/haproxy.log

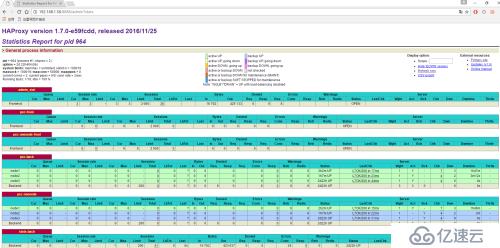

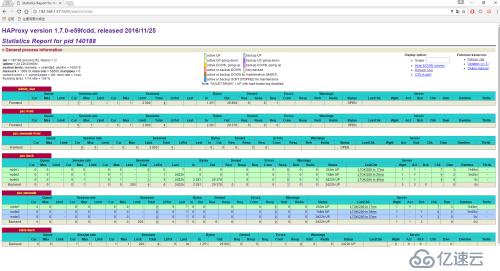

重启rsyslog systemctl restart rsyslog.service 启动Haproxy service haproxy start 加入开机启动 chkconfig haproxy on 查看haproxy 是否启动 ps -ef | grep haproxy haproxy 访问端口8888 账号密码 admin:admin http://192.168.1.56:8888/admin?stats http://192.168.1.57:8888/admin?stats

七、编译安装keepalived

安装automake-1.15 tar -xvf /usr/local/src/automake-1.15.tar.gz -C /usr/local/src cd /usr/local/src/automake-1.15 ./configure && make && make install

编译keepalived

tar -xvf /usr/local/src/keepalived-1.3.2.tar.gz -C /usr/local/src cd /usr/local/src/keepalived-1.3.2 ./configure --prefix=/usr/local/keepalived vmware 虚拟机安装 修改./lib/config.h HAVE_DECL_RTA_ENCAP 1 修改为HAVE_DECL_RTA_ENCAP 0 如果不修改编译会报错 make && make install

拷贝keepalived 可执行文件到/usr/sbin/ cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

创建keepalived 启动脚本

vim /etc/rc.d/init.d/keepalived

#!/bin/sh

#

# Startup script for the Keepalived daemon

#

# processname: keepalived

# pidfile: /var/run/keepalived.pid

# config: /etc/keepalived/keepalived.conf

# chkconfig: - 21 79

# description: Start and stop Keepalived

# Source function library

. /etc/rc.d/init.d/functions

# Source configuration file (we set KEEPALIVED_OPTIONS there)

. /etc/sysconfig/keepalived

RETVAL=0

prog="keepalived"

start() {

echo -n $"Starting $prog: "

daemon keepalived ${KEEPALIVED_OPTIONS}

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && touch /var/lock/subsys/$prog

}

stop() {

echo -n $"Stopping $prog: "

killproc keepalived

RETVAL=$?

echo

[ $RETVAL -eq 0 ] && rm -f /var/lock/subsys/$prog

}

reload() {

echo -n $"Reloading $prog: "

killproc keepalived -1

RETVAL=$?

echo

}

# See how we were called.

case "$1" in

start)

start

;;

stop)

stop

;;

reload)

;;

restart)

stop

start

;;

condrestart)

if [ -f /var/lock/subsys/$prog ]; then

stop

start

fi

;;

status)

status keepalived

RETVAL=$?

;;

*)

echo "Usage: $0 {start|stop|reload|restart|condrestart|status}"

RETVAL=1

esac

exit $RETVALchmod +x /etc/rc.d/init.d/keepalived #keepalived 可执行

配置keepalived

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ 创建/etc/keepalived 文件夹 mkdir -p /etc/keepalived

创建/etc/keepalived/keepalived.conf (192.168.1.56)

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

admin@test.com #发生故障时,接受信息的email地址

}

notification_email_from admin@test.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_haproxy { #自定义的监控脚本

script "/etc/keepalived/chk_haproxy.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP #让VIP不抢回

nopreempt

interface eth0 #监听接口

virtual_router_id 51

priority 100 #优先级,backup机器上的优先级要小与这个值

advert_int 1 #检查间隔

authentication {

auth_type PASS

auth_pass 1111

}

track_script { #自定义的监控脚本

chk_haproxy

}

virtual_ipaddress { #VIP地址,可以设多个

192.168.4.58/21

}

notify_backup "/etc/init.d/haproxy restart"

notify_fault "/etc/init.d/haproxy stop"

}创建/etc/keepalived/keepalived.conf (192.168.1.57)

! Configuration File for keepalived

global_defs {

notification_email {

admin@test.com #发生故障时,接受信息的email地址

}

notification_email_from admin@test.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_haproxy { #自定义的监控脚本

script "/etc/keepalived/chk_haproxy.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

state BACKUP #让VIP不抢回

nopreempt

interface eth0 #监听接口

virtual_router_id 51

priority 99 #优先级,backup机器上的优先级要小与这个值

advert_int 1 #检查间隔

authentication {

auth_type PASS

auth_pass 1111

}

track_script { #自定义的监控脚本

chk_haproxy

}

virtual_ipaddress { #VIP地址,可以设多个

192.168.4.58/21

}

notify_backup "/etc/init.d/haproxy restart"

notify_fault "/etc/init.d/haproxy stop"

}创建/etc/keepalived/chk_haproxy.sh

vim /etc/keepalived/chk_haproxy.sh #!/bin/bash if [ $(ps -C haproxy --no-header | wc -l) -eq 0 ];then /etc/init.d/haproxy start fi sleep 2 if [ $(ps -C haproxy --no-header | wc -l) -eq 0 ]; then /etc/init.d/keepalived stop fi

chmod +x /etc/keepalived/chk_haproxy.sh #可执行权限

启动 keepalived

192.168.1.56 service keepalived start ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:1b:50:a3 brd ff:ff:ff:ff:ff:ff inet 192.168.1.56/21 brd 192.168.7.255 scope global dynamic eth0 valid_lft 44444sec preferred_lft 44444sec inet 192.168.4.58/21 scope global secondary eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe1b:50a3/64 scope link valid_lft forever preferred_lft forever

192.168.1.57 service keepalived start 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:63:ae:85 brd ff:ff:ff:ff:ff:ff inet 192.168.1.57/21 brd 192.168.7.255 scope global dynamic eth0 valid_lft 58142sec preferred_lft 58142sec inet6 fe80::20c:29ff:fe63:ae85/64 scope link valid_lft forever preferred_lft forever

关闭 192.168.1.56 keepalived 进程

service keepalived stop [root@56~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:1b:50:a3 brd ff:ff:ff:ff:ff:ff inet 192.168.1.56/21 brd 192.168.7.255 scope global dynamic eth0 valid_lft 44258sec preferred_lft 44258sec inet6 fe80::20c:29ff:fe1b:50a3/64 scope link valid_lft forever preferred_lft forever

查看 192.168.1.57

[root@57~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:63:ae:85 brd ff:ff:ff:ff:ff:ff inet 192.168.1.57/21 brd 192.168.7.255 scope global dynamic eth0 valid_lft 57869sec preferred_lft 57869sec inet 192.168.4.58/21 scope global secondary eth0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe63:ae85/64 scope link valid_lft forever preferred_lft forever

其它 keepalived 两个都配置为BACKUP 当第一个出现故障的时候恢复了不用抢占正在 提供服务的IP 服务不会闪断。 haproxy 配置双端口链接 3306 是同时负载3台服务器 3308 只是一台使用当第一台挂掉才会使用第二台。

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。