您好,登录后才能下订单哦!

这篇文章主要介绍“Python爬虫怎么实现全国失信被执行人名单查询功能”的相关知识,小编通过实际案例向大家展示操作过程,操作方法简单快捷,实用性强,希望这篇“Python爬虫怎么实现全国失信被执行人名单查询功能”文章能帮助大家解决问题。

一、需求说明

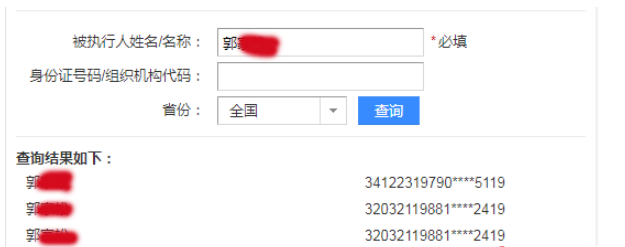

利用百度的接口,实现一个全国失信被执行人名单查询功能。输入姓名,查询是否在全国失信被执行人名单中。

二、python实现

版本1:

# -*- coding:utf-8*-

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

import time

import requests

time1=time.time()

import pandas as pd

import json

iname=[]

icard=[]

def person_executed(name):

for i in range(0,30):

try:

url="https://sp0.baidu.com/8aQDcjqpAAV3otqbppnN2DJv/api.php?resource_id=6899" \

"&query=%E5%A4%B1%E4%BF%A1%E8%A2%AB%E6%89%A7%E8%A1%8C%E4%BA%BA%E5%90%8D%E5%8D%95" \

"&cardNum=&" \

"iname="+str(name)+ \

"&areaName=" \

"&pn="+str(i*10)+ \

"&rn=10" \

"&ie=utf-8&oe=utf-8&format=json"

html=requests.get(url).content

html_json=json.loads(html)

html_data=html_json['data']

for each in html_data:

k=each['result']

for each in k:

print each['iname'],each['cardNum']

iname.append(each['iname'])

icard.append(each['cardNum'])

except:

pass

if __name__ == '__main__':

name="郭**"

person_executed(name)

print len(iname)

#####################将数据组织成数据框###########################

data=pd.DataFrame({"name":iname,"IDCard":icard})

#################数据框去重####################################

data1=data.drop_duplicates()

print data1

print len(data1)

#########################写出数据到excel#########################################

pd.DataFrame.to_excel(data1,"F:\\iname_icard_query.xlsx",header=True,encoding='gbk',index=False)

time2=time.time()

print u'ok,爬虫结束!'

print u'总共耗时:'+str(time2-time1)+'s'三、效果展示

"D:\Program Files\Python27\python.exe" D:/PycharmProjects/learn2017/全国失信被执行人查询.py

郭** 34122319790****5119

郭** 32032119881****2419

郭** 32032119881****2419

3

IDCard name

0 34122319790****5119 郭**

1 32032119881****2419 郭**

2

ok,爬虫结束!

总共耗时:7.72000002861s

Process finished with exit code 0

版本2:

# -*- coding:utf-8*-

import sys

reload(sys)

sys.setdefaultencoding('utf-8')

import time

import requests

time1=time.time()

import pandas as pd

import json

iname=[]

icard=[]

courtName=[]

areaName=[]

caseCode=[]

duty=[]

performance=[]

disruptTypeName=[]

publishDate=[]

def person_executed(name):

for i in range(0,30):

try:

url="https://sp0.baidu.com/8aQDcjqpAAV3otqbppnN2DJv/api.php?resource_id=6899" \

"&query=%E5%A4%B1%E4%BF%A1%E8%A2%AB%E6%89%A7%E8%A1%8C%E4%BA%BA%E5%90%8D%E5%8D%95" \

"&cardNum=&" \

"iname="+str(name)+ \

"&areaName=" \

"&pn="+str(i*10)+ \

"&rn=10" \

"&ie=utf-8&oe=utf-8&format=json"

html=requests.get(url).content

html_json=json.loads(html)

html_data=html_json['data']

for each in html_data:

k=each['result']

for each in k:

print each['iname'],each['cardNum'],each['courtName'],each['areaName'],each['caseCode'],each['duty'],each['performance'],each['disruptTypeName'],each['publishDate']

iname.append(each['iname'])

icard.append(each['cardNum'])

courtName.append(each['courtName'])

areaName.append(each['areaName'])

caseCode.append(each['caseCode'])

duty.append(each['duty'])

performance.append(each['performance'])

disruptTypeName.append(each['disruptTypeName'])

publishDate.append(each['publishDate'])

except:

pass

if __name__ == '__main__':

name="郭**"

person_executed(name)

print len(iname)

#####################将数据组织成数据框###########################

# data=pd.DataFrame({"name":iname,"IDCard":icard})

detail_data=pd.DataFrame({"name":iname,"IDCard":icard,"courtName":courtName,"areaName":areaName,"caseCode":caseCode,"duty":duty,"performance":performance,\

"disruptTypeName":disruptTypeName,"publishDate":publishDate})

#################数据框去重####################################

# data1=data.drop_duplicates()

# print data1

# print len(data1)

detail_data1=detail_data.drop_duplicates()

# print detail_data1

# print len(detail_data1)

#########################写出数据到excel#########################################

pd.DataFrame.to_excel(detail_data1,"F:\\iname_icard_query.xlsx",header=True,encoding='gbk',index=False)

time2=time.time()

print u'ok,爬虫结束!'

print u'总共耗时:'+str(time2-time1)+'s'关于“Python爬虫怎么实现全国失信被执行人名单查询功能”的内容就介绍到这里了,感谢大家的阅读。如果想了解更多行业相关的知识,可以关注亿速云行业资讯频道,小编每天都会为大家更新不同的知识点。

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。