您好,登录后才能下订单哦!

下载地址

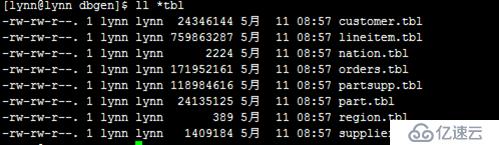

/opt/tpc-h-v2.17.0/dbgen

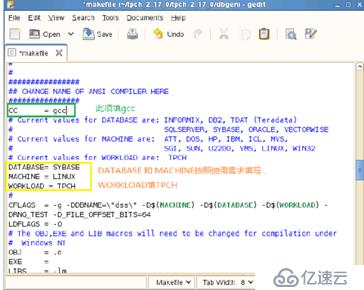

cp makefile.suite makefile修改makefile

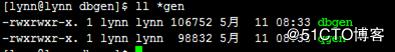

make -f makefile

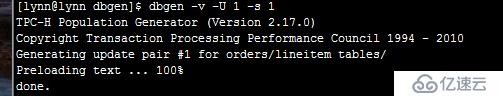

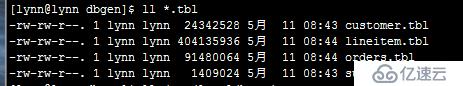

dbgen -v -U 1 -s 1

./dbgen

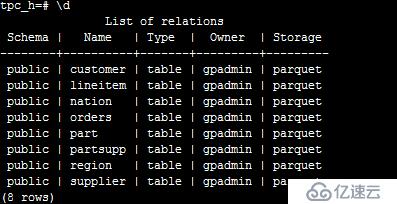

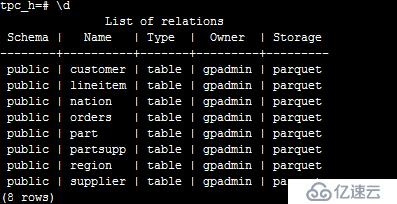

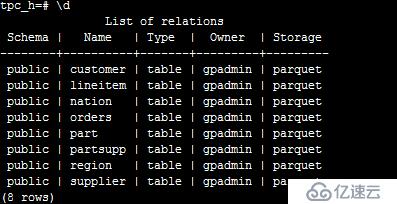

--create database tpch;

\c tpch;

--1. region

drop table if exists region;

create table region(

r_regionkey integer,

r_name char(25),

r_comment varchar(152),

r_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by(r_regionkey);

--2. nation

drop table if exists nation;

create table nation(

n_nationkey integer,

n_name char(25),

n_regionkey integer,

n_comment varchar(152),

n_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by(n_nationkey);

--3.part

drop table if exists part;

create table part(

p_partkey bigint,

p_name varchar(55),

p_mfgr char(25),

p_brand char(10),

p_type varchar(25),

p_size integer,

p_container varchar(10),

p_retailprice decimal,

p_comment varchar(117),

p_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by (p_partkey);

--4.supplier

drop table if exists supplier;

create table supplier(

s_suppkey bigint,

s_name char(25),

s_address varchar(40),

s_nationkey int,

s_phone char(15),

s_acctbal decimal,

s_comment varchar(101),

r_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by (s_suppkey);

--5.partsupp

drop table if exists partsupp;

create table partsupp(

ps_partkey bigint,

ps_suppkey bigint,

ps_availqty integer,

ps_supplycost decimal,

ps_comment varchar(199),

ps_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by(ps_partkey);

#6.customer

drop table if exists customer;

create table customer(

c_custkey bigint,

c_name char(25),

c_address char(40),

c_nationkey integer,

c_phone char(15),

c_acctbal decimal,

c_mktsegment char(10),

c_comment varchar(117),

c_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by (c_custkey);

--7.orders

drop table if exists orders;

create table orders(

o_orderkey bigint,

o_custkey bigint,

o_orderstatus char(1),

o_totalprice decimal,

o_orderdate date,

o_orderpriority char(15),

o_clerk char(15),

o_shippriority integer,

o_comment varchar(117),

o_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

distributed by (o_orderkey );

--8.lineitem

drop table if exists lineitem;

create table lineitem(

l_orderkey bigint,

l_partkey bigint,

l_suppkey bigint,

l_linenumber integer,

l_quantity decimal,

l_extendedprice decimal,

l_discount decimal,

l_tax decimal,

l_returnflag char(1),

l_linestatus char(1),

l_shipdate date,

l_commitdate date,

l_receiptdate date,

l_shipinstruct char(25),

l_shipmode char(10),

l_comment varchar(117),

l_extra char(1)

)with(appendonly=true,orientation=parquet,compresstype=snappy)

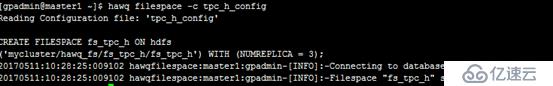

distributed by (l_linenumber);$hawq filespace -o tpc_h_config文件内容如下:

filespace:fs_tpc_h

fsreplica:3

dfs_url::mycluster/hawq_fs/fs_tpc_h$hdfs dfs -mkdir /hawq_fs

$hdfs dfs -chown gpadmin:gpadmin /hawq_fs

$hdfs dfs -ls /

$hawq filespace -c tpc_h_config

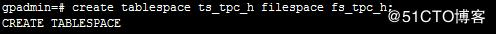

create tablespace ts_tpc_h filespace fs_tpc_h;

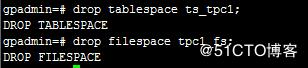

表空间拥有者可以删除,且不能有其他数据对象(如数据库,数据表)使用当前表空间,不能有表空间使用当前文件空间,才可以删除。

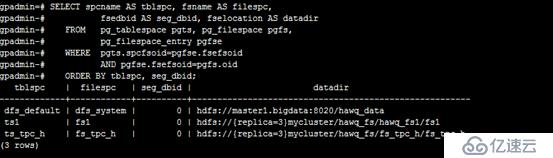

SELECT spcname AS tblspc, fsname AS filespc,

fsedbid AS seg_dbid, fselocation AS datadir

FROM pg_tablespace pgts, pg_filespace pgfs,

pg_filespace_entry pgfse

WHERE pgts.spcfsoid=pgfse.fsefsoid

AND pgfse.fsefsoid=pgfs.oid

ORDER BY tblspc, seg_dbid;

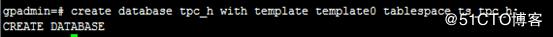

create database tpc_h with template template0 tablespace ts_tpc_h;

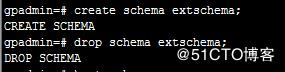

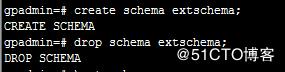

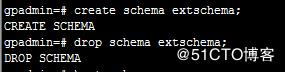

\c tpc_h;create schema extschema;

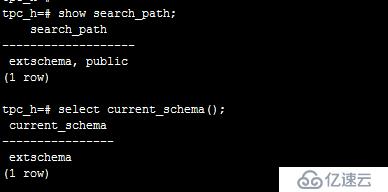

select current_schema();

show search_path;

create external table extschema.region(

r_regionkey integer,

r_name char(25),

r_comment varchar(152),

r_extra char(1)

)location('gpfdist://10.110.17.104:8081/region.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

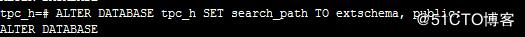

ALTER DATABASE tpc_h SET search_path TO extschema, public;

退出,重启进入psql客户端

执行第一章中的脚本。

drop view if exists v_order_customer ;

create view v_order_customer

as select o.o_orderkey, o.o_custkey, c.c_name,o.o_orderdate

from orders o join customer c on o.o_custkey=c.c_custkey

where o.o_orderdate>'1997-01-01';

select * from v_order_customer limit 10;

select * from v_order_customer where o_orderdate<='1997-01-01';

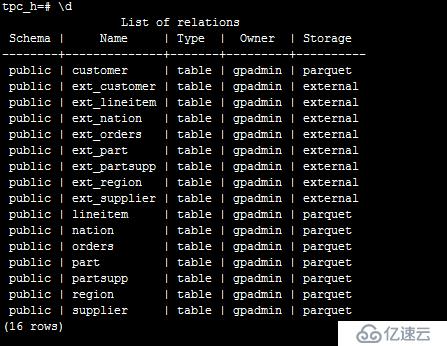

yum install -y libevent libyaml aprgpfdist -d /tmp/data -p 8081 -l ~/log &--1. region

drop external table if exists ext_region;

create external table ext_region(

r_regionkey integer,

r_name char(25),

r_comment varchar(152),

r_extra char(1)

)location('gpfdist://10.110.17.104:8081/region.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

--2. nation

drop external table if exists ext_nation;

create external table ext_nation(

n_nationkey integer,

n_name char(25),

n_regionkey integer,

n_comment varchar(152),

n_extra char(1)

)location('gpfdist://10.110.17.104:8081/nation.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

--3.part

drop external table if exists ext_part;

create external table ext_part(

p_partkey bigint,

p_name varchar(55),

p_mfgr char(25),

p_brand char(10),

p_type varchar(25),

p_size integer,

p_container varchar(10),

p_retailprice decimal,

p_comment varchar(117),

p_extra char(1)

)location('gpfdist://10.110.17.104:8081/part.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

--4.supplier

drop external table if exists ext_supplier;

create external table ext_supplier(

s_suppkey bigint,

s_name char(25),

s_address varchar(40),

s_nationkey int,

s_phone char(15),

s_acctbal decimal,

s_comment varchar(101),

s_extra char(1)

)location('gpfdist://10.110.17.104:8081/supplier.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

--5.partsupp

drop external table if exists ext_partsupp;

create external table ext_partsupp(

ps_partkey bigint,

ps_suppkey bigint,

ps_availqty integer,

ps_supplycost decimal,

ps_comment varchar(199),

ps_extra char(1)

)location('gpfdist://10.110.17.104:8081/partsupp.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

#6.customer

drop external table if exists ext_customer;

create external table ext_customer(

c_custkey bigint,

c_name char(25),

c_address char(40),

c_nationkey integer,

c_phone char(15),

c_acctbal decimal,

c_mktsegment char(10),

c_comment varchar(117),

c_extra char(1)

)location('gpfdist://10.110.17.104:8081/customer.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

--7.orders

drop external table if exists ext_orders;

create external table ext_orders(

o_orderkey bigint,

o_custkey bigint,

o_orderstatus char(1),

o_totalprice decimal,

o_orderdate date,

o_orderpriority char(15),

o_clerk char(15),

o_shippriority integer,

o_comment varchar(117),

o_extra char(1)

)location('gpfdist://10.110.17.104:8081/orders.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

--8.lineitem

drop external table if exists ext_lineitem;

create external table ext_lineitem(

l_orderkey bigint,

l_partkey bigint,

l_suppkey bigint,

l_linenumber integer,

l_quantity decimal,

l_extendedprice decimal,

l_discount decimal,

l_tax decimal,

l_returnflag char(1),

l_linestatus char(1),

l_shipdate date,

l_commitdate date,

l_receiptdate date,

l_shipinstruct char(25),

l_shipmode char(10),

l_comment varchar(117),

l_extra char(1)

)location('gpfdist://10.110.17.104:8081/lineitem.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8';

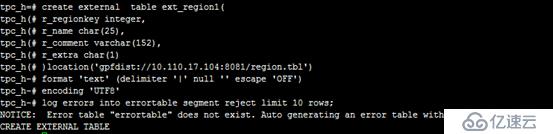

handling errors:

drop external table if exists ext_region1;

create external table ext_region1(

r_regionkey integer,

r_name char(25),

r_comment varchar(152),

r_extra char(1)

)location('gpfdist://10.110.17.104:8081/region.tbl')

format 'text' (delimiter '|' null '' escape 'OFF')

encoding 'UTF8'

log errors into errortable segment reject limit 10 rows;

测试是否可以正常读取数据:

select * from ext_region limit 10;

select * from ext_nation limit 10;

select * from ext_part limit 10;

select * from ext_supplier limit 10;

select * from ext_partsupp limit 10;

select * from ext_customer limit 10;

select * from ext_orders limit 10;

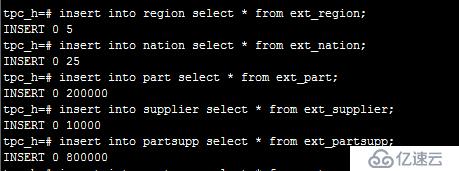

select * from ext_lineitem limit 10;insert into region select * from ext_region;

insert into nation select * from ext_nation;

insert into part select * from ext_part;

insert into supplier select * from ext_supplier;

insert into partsupp select * from ext_partsupp;

insert into customer select * from ext_customer;

insert into orders select * from ext_orders;

insert into lineitem select * from ext_lineitem;

测试是否可以正常读取数据:

select * from region limit 10;

select * from nation limit 10;

select * from part limit 10;

select * from supplier limit 10;

select * from partsupp limit 10;

select * from customer limit 10;

select * from orders limit 10;

select * from lineitem limit 10;copy region from '/tmp/data/region.tbl'

with delimiter '|';

--log errors into errtable segment reject limit 10 rows;在文件末尾增加如下内容:

source /usr/local/hawq/greenplum_path.shsudo yum install -y libevent libyaml aprcreate table audit(cmd varchar(10), t timestamp);gpfdist -p 8081 -d /tmp/data -l ~/log &---

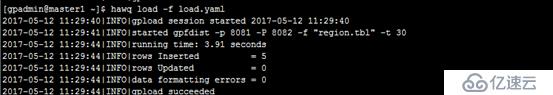

VERSION: 1.0.0.1

DATABASE: tpc_h

USER: gpadmin

HOST: master1

PORT: 5432

GPLOAD:

INPUT:

- SOURCE:

LOCAL_HOSTNAME:

- worker1

PORT: 8081

FILE:

- region.tbl

- COLUMNS:

- r_regionkey: integer

- r_name: text

- r_comment: text

- r_extra: text

- FORMAT: text

- DELIMITER: '|'

- ERROR_LIMIT: 25

- ERROR_TABLE: public.errortable

OUTPUT:

- TABLE: public.region

- MODE: INSERT

SQL:

- BEFORE: "INSERT INTO audit VALUES('start', current_timestamp)"

- AFTER: "INSERT INTO audit VALUES('end', current_timestamp)"hawq load -f load.yaml

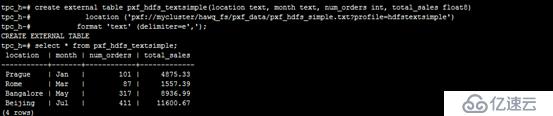

drop external table if exists pxf_hdfs_textsimple;

create external table pxf_hdfs_textsimple(location text, month text, num_orders int, total_sales float8)

location ('pxf://mycluster/hawq_fs/pxf_data/pxf_hdfs_simple.txt?profile=hdfstextsimple')

format 'text' (delimiter=e',');//namenode单点时:master1.bigdata是指namenode节点,端口是pxf的端口地址

create external table pxf_hdfs_textsimple(location text, month text, num_orders int, total_sales float8)

location ('pxf://master1.bigdata:51200/hawq_fs/pxf_data/pxf_hdfs_simple.txt?profile=hdfstextsimple')

format 'text' (delimiter=e',');自定义空值:

drop external table if exists h.pxf_inventory;

create external table h.pxf_inventory

(

inv_date_sk integer,

inv_item_sk integer,

inv_warehouse_sk integer,

inv_quantity_on_hand integer,

inv_null varchar(10)

)location ('pxf://master1.bigdata:51200/tpcdsdata/30T/inventory/data-m-*?profile=hdfstextsimple')

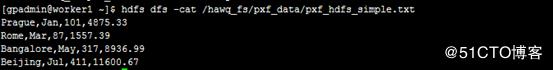

format 'TEXT' (DELIMITER '|' NULL '');echo 'Prague,Jan,101,4875.33

Rome,Mar,87,1557.39

Bangalore,May,317,8936.99

Beijing,Jul,411,11600.67' > /tmp/pxf_hdfs_simple.txthdfs dfs -put /tmp/pxf_hdfs_simple.txt /hawq_fs/pxf_data/

hdfs dfs -cat /hawq_fs/pxf_data/pxf_hdfs_simple.txt

select * from pxf_hdfs_textsimple;

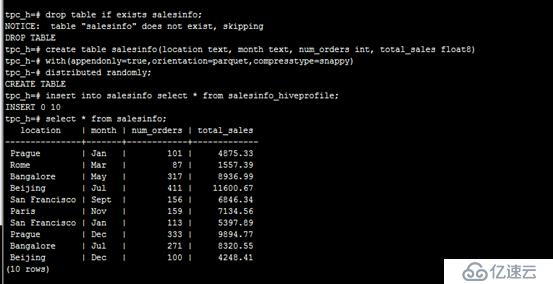

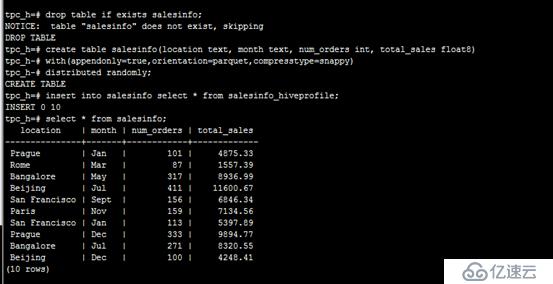

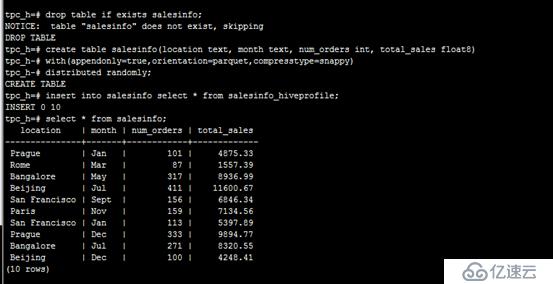

drop table if exists salesinfo;

create table salesinfo(location text, month text, num_orders int, total_sales float8)

with(appendonly=true,orientation=parquet,compresstype=snappy)

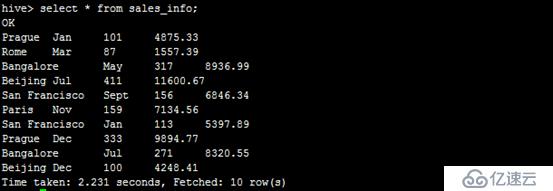

distributed randomly;/usr/hdp/current/hive-client/bin

./hivedrop table if exists sales_info;

create table sales_info (location string, month string,

number_of_orders int, total_sales double)

row format delimited fields terminated by ','

stored as textfile;Prague,Jan,101,4875.33

Rome,Mar,87,1557.39

Bangalore,May,317,8936.99

Beijing,Jul,411,11600.67

San Francisco,Sept,156,6846.34

Paris,Nov,159,7134.56

San Francisco,Jan,113,5397.89

Prague,Dec,333,9894.77

Bangalore,Jul,271,8320.55

Beijing,Dec,100,4248.41load data local inpath '/tmp/pxf_hive_datafile.txt'

into table sales_info;

psql

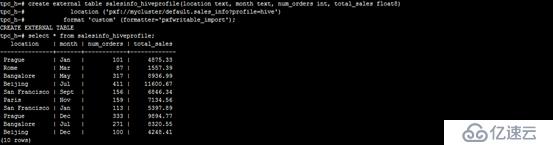

drop table if exists salesinfo_hiveprofile;

create external table salesinfo_hiveprofile(location text, month text, num_orders int, total_sales float8)

location ('pxf://mycluster/default.sales_info?profile=hive')

format 'custom' (formatter='pxfwritable_import');

insert into salesinfo select * from salesinfo_hiveprofile;

这种方式需要修改pxf-profiles.xml中关于Hive的内容,增加outputformat对象

HiveText:

<outputformat>org.apache.hawq.pxf.service.io.Text</outputformat>HiveORC:

<outputformat>org.apache.hawq.pxf.service.io.GPDBWritable</outputformat>HiveRC:

<outputformat>org.apache.hawq.pxf.service.io.Text</outputformat>Hive

<outputformat>org.apache.hawq.pxf.service.io.GPDBWritable</outputformat>格式:

SELECT * FROM hcatalog.hive-db-name.hive-table-name;如:

SELECT * FROM hcatalog.default.sales_info;

导入数据:

insert into salesinfo SELECT * FROM hcatalog.default.sales_info;

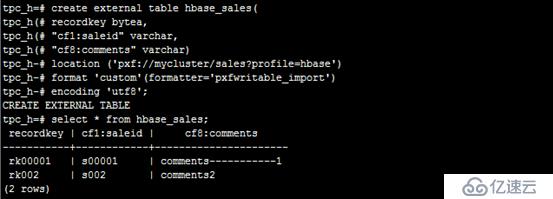

drop EXTERNAL table if exists hbase_sales;

create external table hbase_sales(

recordkey bytea,

"cf1:saleid" varchar,

"cf8:comments" varchar)

location ('pxf://mycluster/sales?profile=hbase')

format 'custom'(formatter='pxfwritable_import')

encoding 'utf8';cd /usr/hdp/current/hbase-client/bin

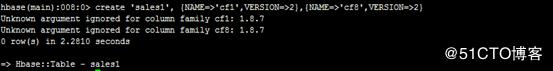

./hbase shell创建表格

create 'sales', {NAME=>'cf1',VERSION=>2},{NAME=>'cf8',VERSION=>2}

#create 'pxf_hbase_region', {NAME=>'cf1',VERSION=>2}

写入数据

put 'sales','rk001','cf1:saleid', 's001'

put 'sales','rk001','cf8:comments', 'comments1'select * from hbase_sales;

create 'pxflookup', {NAME=>'mapping',VERSION=>2}

put 'pxflookup', 'sales', 'mapping:id', 'cf1:saleid'

put 'pxflookup', 'sales', 'mapping:cmts', 'cf8:comments'

drop EXTERNAL table if exists pxf_hbase_sales ;

CREATE EXTERNAL TABLE pxf_hbase_sales (

recordkey bytea,

id varchar,

cmts varchar

)location ('pxf://mycluster/sales?profile=hbase')

format 'custom'(formatter='pxfwritable_import')

encoding 'utf8';

select * from pxf_hbase_sales;grant all privileges on *.* to "pxf"@"%" identified by 'test';

mysql> use test;

mysql> create table myclass(

id int(4) not null primary key,

name varchar(20) not null,

gender int(4) not null default '0',

degree double(16,2));insert into myclass values(1,"tom",1,90);

insert into myclass values(2,'john',0,94);

insert into myclass values(3,'simon',1,79);/etc/pxf/conf/pxf-public.classpath添加/usr/lib/pxf/mysql-connector-java-*.jar

psql:

gpadmin=#

drop external table if exists jdbc_myclass;

CREATE EXTERNAL TABLE jdbc_myclass(id integer,

name text,

gender integer,

degree float8)

LOCATION ('pxf://localhost:51200/test.myclass'

'?PROFILE=JDBC'

'&JDBC_DRIVER=com.mysql.jdbc.Driver'

'&DB_URL=jdbc:mysql://10.110.22.191:3306/test&USER=pxf&PASS=test'

)

FORMAT 'CUSTOM' (Formatter='pxfwritable_import');

select * from jdbc_myclass;slaes_info:

create table sales_info (location varchar(200), month varchar(10),

number_of_orders int, total_sales double);

insert into sales_info values("Prague","Jan",101,4875.33),

("Rome","Mar",87,1557.39),

("Bangalore","May",317,8936.99),

("Beijing","Jul",411,11600.67),

("San Francisco","Sept",156,6846.34),

("Paris","Nov",159,7134.56),

("San Francisco","Jan",113,5397.89),

("Prague","Dec",333,9894.77),

("Bangalore","Jul",271,8320.55),

("Beijing","Dec",100,4248.41);psql:

drop external table if exists jdbc_sales_info ;

CREATE EXTERNAL TABLE jdbc_sales_info (location varchar(200), month varchar(10),

number_of_orders integer, total_sales float8)

LOCATION ('pxf://localhost:51200/test.sales_info'

'?PROFILE=JDBC'

'&JDBC_DRIVER=com.mysql.jdbc.Driver'

'&DB_URL=jdbc:mysql://10.110.22.191:3306/test&USER=pxf&PASS=test'

)

FORMAT 'CUSTOM' (Formatter='pxfwritable_import');

CREATE EXTERNAL TABLE sales(id integer,

cdate date,

amt float8,

grade text)

LOCATION ('pxf://localhost:51200/sales'

'?PROFILE=JDBC'

'&JDBC_DRIVER=com.mysql.jdbc.Driver'

'&DB_URL=jdbc:mysql://192.168.200.6:3306/demodb&USER=root&PASS=root'

'&PARTITION_BY=cdate:date&RANGE=2008-01-01:2010-01-01&INTERVAL=1:year'

)

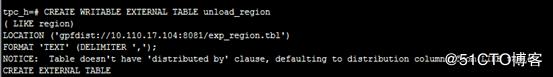

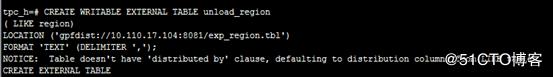

FORMAT 'CUSTOM' (Formatter='pxfwritable_import');create writable external table unload_region

(like region)

location ('gpfdist://10.110.17.104:8081/exp_region.tbl')

format 'text' (delimiter ',');

--只可写,不可读

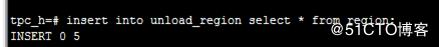

insert into unload_region select * from region;

cat exp_region.tbl

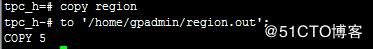

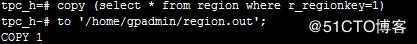

方法二 使用copy卸载数据

copy region

to '/home/gpadmin/region.out';

copy (select * from region where r_regionkey=1)

to '/home/gpadmin/region.out';

create writable external table pxf_hdfs_writabletbl_1(location text, month text, num_orders int, total_sales float8)

location ('pxf://mycluster/hawq_fs/pxf_data/pxfwritable_hdfs_textsimple1?profile=hdfstextsimple')

format 'text' (delimiter=e',');insert into pxf_hdfs_writabletbl_1 values ( 'frankfurt', 'mar', 777, 3956.98 );

insert into pxf_hdfs_writabletbl_1 values ( 'cleveland', 'oct', 3812, 96645.37 );

3.只可写不可读

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。