您好,登录后才能下订单哦!

脚本分析start-all.sh

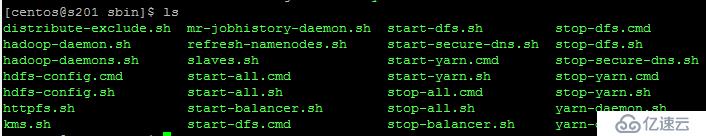

1)首先进入/soft/Hadoop/sbin目录

2)nano start-all.sh

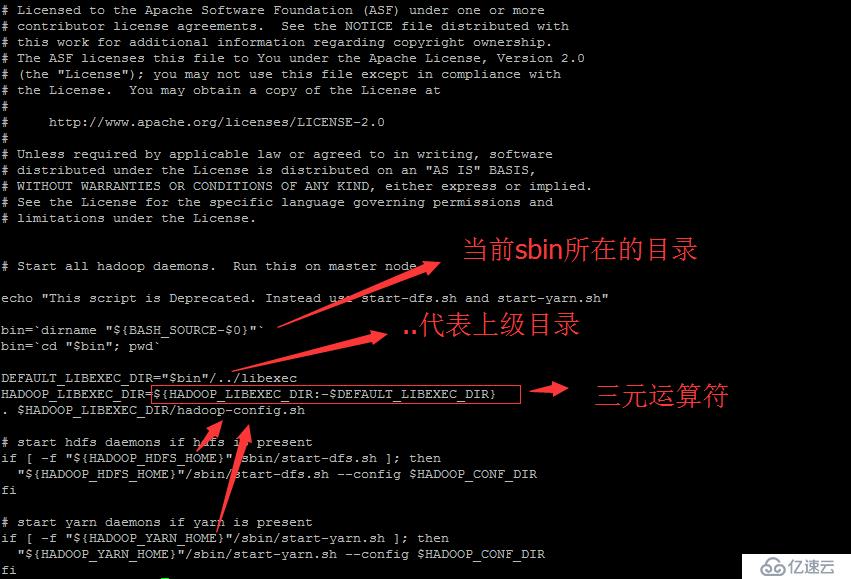

sbin/start-all.sh主要做的事情:

1) libexec/hadoop-config.sh

2) start-dfs.sh

3) start-yarn.sh

3)cat libexec/hadoop-config.sh hadoop_conf_dir =etc/hadoop

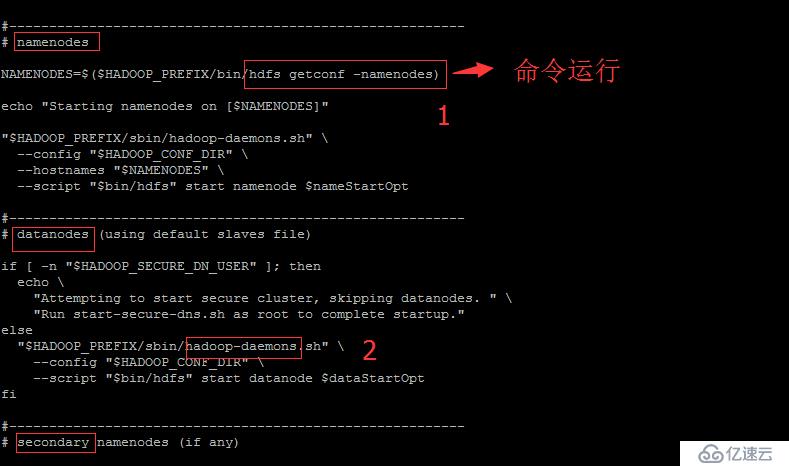

4)cat sbin/start-dfs.sh

sbin/start-dfs.sh的作用:

1)libexec/hadoop-config.sh

2)sbin/hadoop-daemons.sh --config .. --hostname .. start namenode ...

3)sbin/hadoop-daemons.sh --config .. --hostname .. start datanode ...

4)sbin/hadoop-daemons.sh --config .. --hostname .. start sescondarynamenode ...

5)sbin/hadoop-daemons.sh --config .. --hostname .. start zkfc ...//

5)cat sbin/start-yarn.sh

libexec/yarn-config.sh作用

sbin/yarn-daemon.sh start resourcemanager

sbin/yarn-daemons.sh start nodemanager

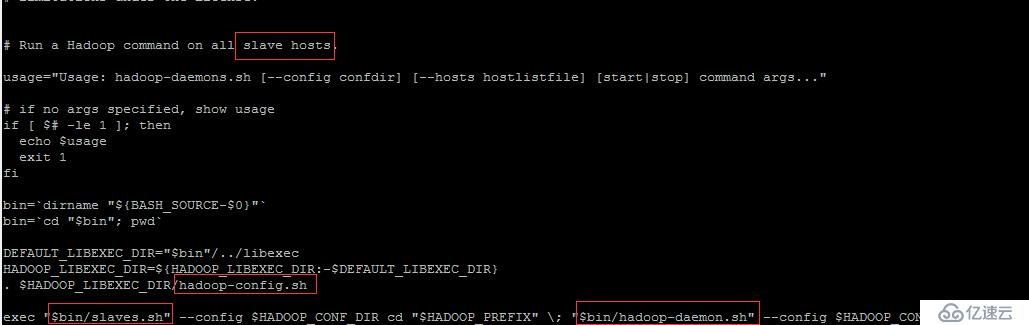

6)cat sbin/hadoop-daemons.sh

sbin/hadoop-daemons.sh的作用:

libexec/hadoop-config.sh

slaves

hadoop-daemon.sh

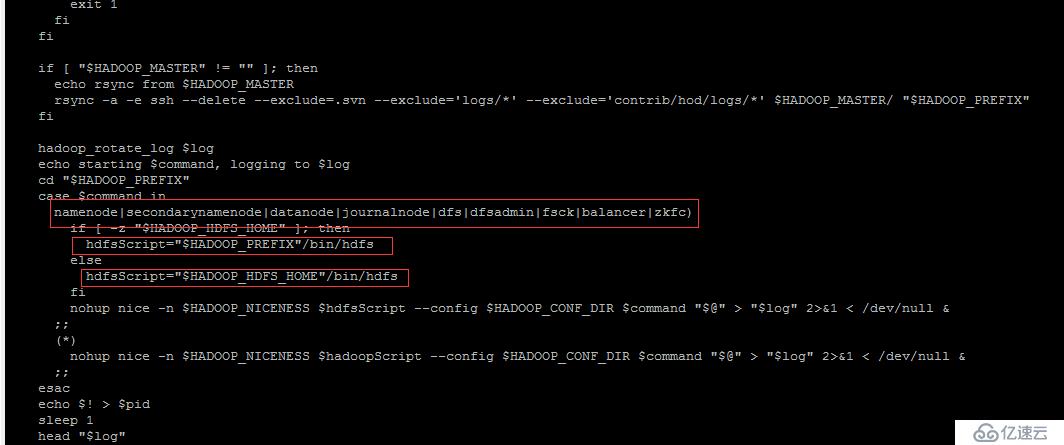

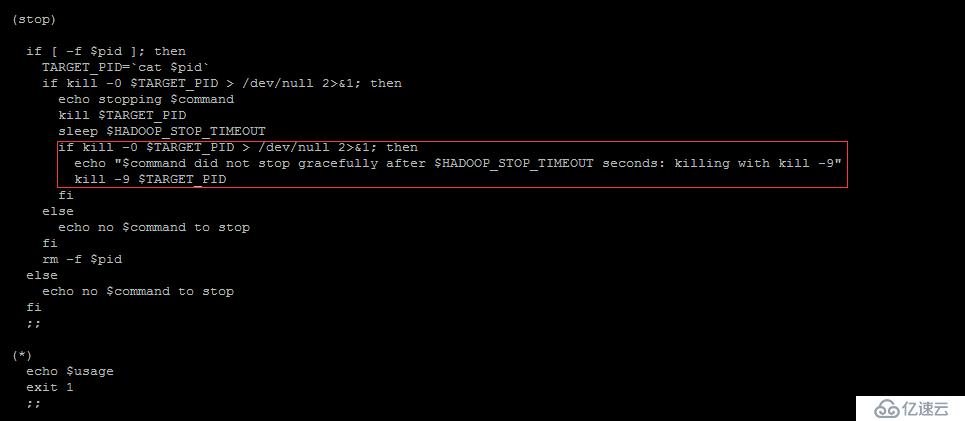

7)cat sbin/hadoop-daemon.sh

sbin/hadoop-daemon.sh的作用

libexec/hadoop-config.sh

bin/hdfs ...

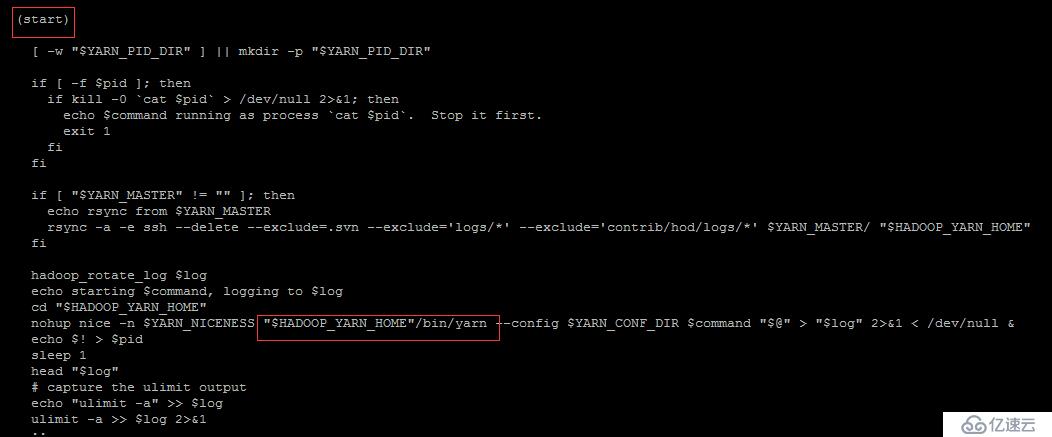

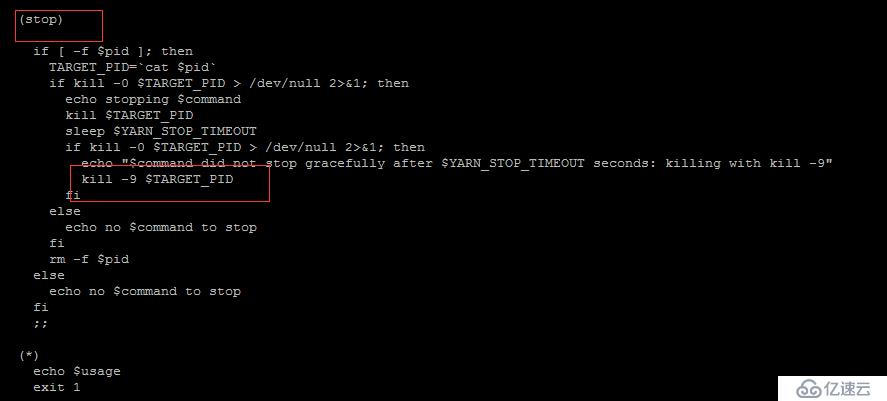

8)sbin/yarn-daemon.sh

sbin/yarn-daemon.sh作用

libexec/yarn-config.sh

bin/yarn

Start-all.sh ===config.sh----start-dfs.sh 和start-yarn.sh

Start-dfs.sh -----config.sh----(namenode,send,datanode)

Stop-dfs.sh------config---------------------------------------

hadoop-daemon.sh ----------->

免责声明:本站发布的内容(图片、视频和文字)以原创、转载和分享为主,文章观点不代表本网站立场,如果涉及侵权请联系站长邮箱:is@yisu.com进行举报,并提供相关证据,一经查实,将立刻删除涉嫌侵权内容。